Songcheng Xu

DConAD: A Differencing-based Contrastive Representation Learning Framework for Time Series Anomaly Detection

Apr 19, 2025Abstract:Time series anomaly detection holds notable importance for risk identification and fault detection across diverse application domains. Unsupervised learning methods have become popular because they have no requirement for labels. However, due to the challenges posed by the multiplicity of abnormal patterns, the sparsity of anomalies, and the growth of data scale and complexity, these methods often fail to capture robust and representative dependencies within the time series for identifying anomalies. To enhance the ability of models to capture normal patterns of time series and avoid the retrogression of modeling ability triggered by the dependencies on high-quality prior knowledge, we propose a differencing-based contrastive representation learning framework for time series anomaly detection (DConAD). Specifically, DConAD generates differential data to provide additional information about time series and utilizes transformer-based architecture to capture spatiotemporal dependencies, which enhances the robustness of unbiased representation learning ability. Furthermore, DConAD implements a novel KL divergence-based contrastive learning paradigm that only uses positive samples to avoid deviation from reconstruction and deploys the stop-gradient strategy to compel convergence. Extensive experiments on five public datasets show the superiority and effectiveness of DConAD compared with nine baselines. The code is available at https://github.com/shaieesss/DConAD.

Efficient Prompting Methods for Large Language Models: A Survey

Apr 01, 2024

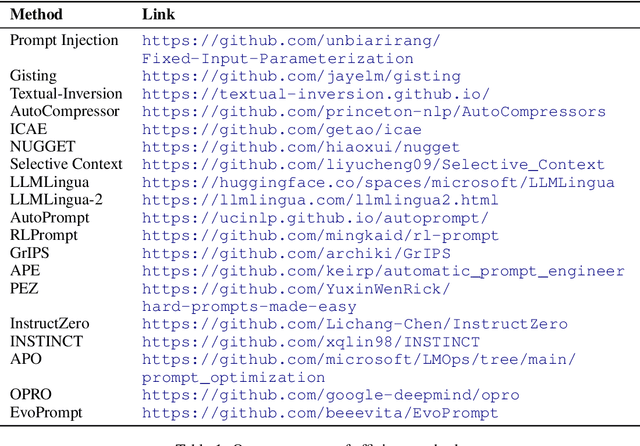

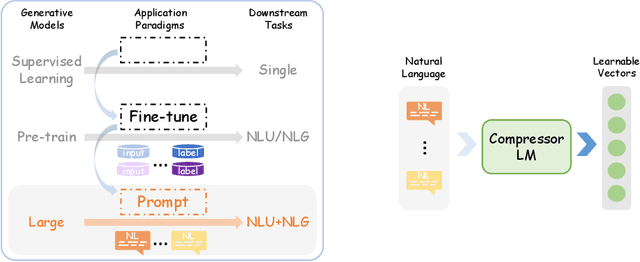

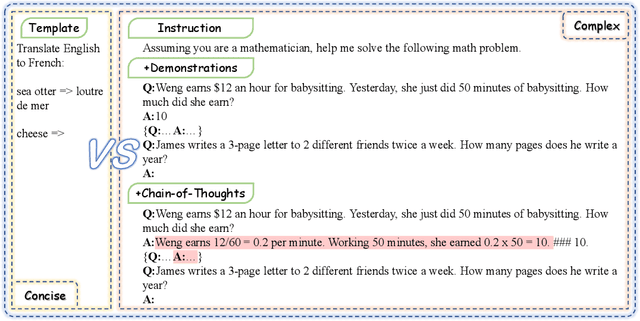

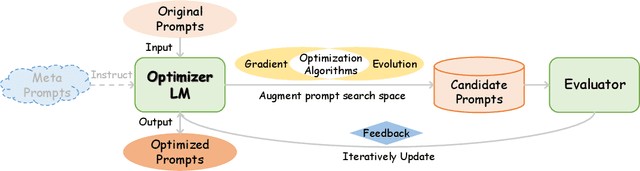

Abstract:Prompting has become a mainstream paradigm for adapting large language models (LLMs) to specific natural language processing tasks. While this approach opens the door to in-context learning of LLMs, it brings the additional computational burden of model inference and human effort of manual-designed prompts, particularly when using lengthy and complex prompts to guide and control the behavior of LLMs. As a result, the LLM field has seen a remarkable surge in efficient prompting methods. In this paper, we present a comprehensive overview of these methods. At a high level, efficient prompting methods can broadly be categorized into two approaches: prompting with efficient computation and prompting with efficient design. The former involves various ways of compressing prompts, and the latter employs techniques for automatic prompt optimization. We present the basic concepts of prompting, review the advances for efficient prompting, and highlight future research directions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge