Slawomir Grzonkowski

Deep Detector Health Management under Adversarial Campaigns

Nov 19, 2019

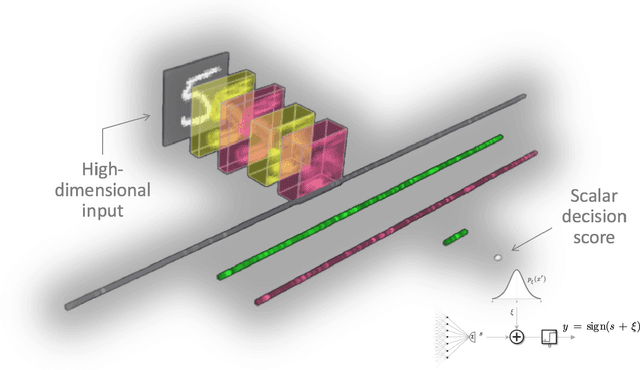

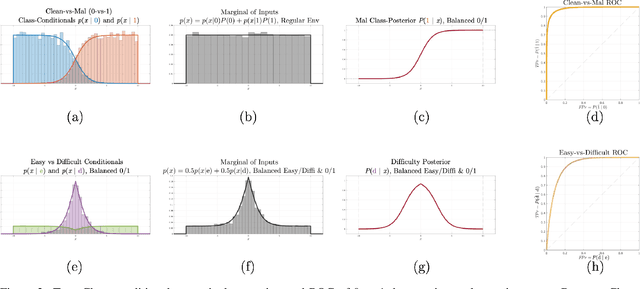

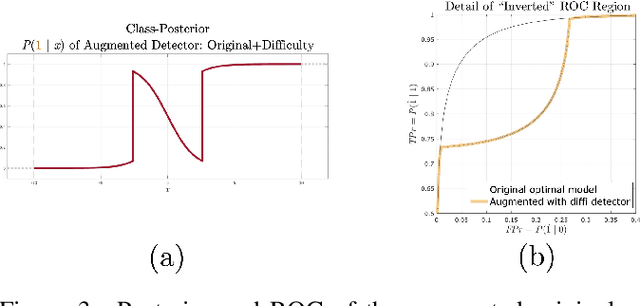

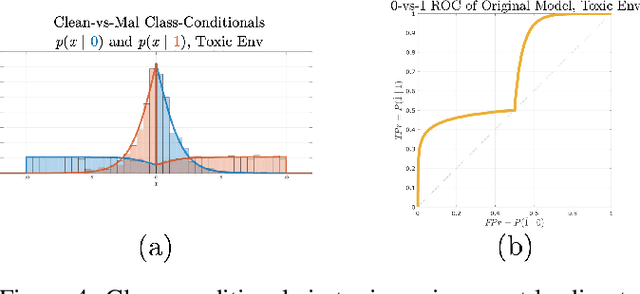

Abstract:Machine learning models are vulnerable to adversarial inputs that induce seemingly unjustifiable errors. As automated classifiers are increasingly used in industrial control systems and machinery, these adversarial errors could grow to be a serious problem. Despite numerous studies over the past few years, the field of adversarial ML is still considered alchemy, with no practical unbroken defenses demonstrated to date, leaving PHM practitioners with few meaningful ways of addressing the problem. We introduce turbidity detection as a practical superset of the adversarial input detection problem, coping with adversarial campaigns rather than statistically invisible one-offs. This perspective is coupled with ROC-theoretic design guidance that prescribes an inexpensive domain adaptation layer at the output of a deep learning model during an attack campaign. The result aims to approximate the Bayes optimal mitigation that ameliorates the detection model's degraded health. A proactively reactive type of prognostics is achieved via Monte Carlo simulation of various adversarial campaign scenarios, by sampling from the model's own turbidity distribution to quickly deploy the correct mitigation during a real-world campaign.

Finding Rats in Cats: Detecting Stealthy Attacks using Group Anomaly Detection

May 20, 2019

Abstract:Advanced attack campaigns span across multiple stages and stay stealthy for long time periods. There is a growing trend of attackers using off-the-shelf tools and pre-installed system applications (such as \emph{powershell} and \emph{wmic}) to evade the detection because the same tools are also used by system administrators and security analysts for legitimate purposes for their routine tasks. To start investigations, event logs can be collected from operational systems; however, these logs are generic enough and it often becomes impossible to attribute a potential attack to a specific attack group. Recent approaches in the literature have used anomaly detection techniques, which aim at distinguishing between malicious and normal behavior of computers or network systems. Unfortunately, anomaly detection systems based on point anomalies are too rigid in a sense that they could miss the malicious activity and classify the attack, not an outlier. Therefore, there is a research challenge to make better detection of malicious activities. To address this challenge, in this paper, we leverage Group Anomaly Detection (GAD), which detects anomalous collections of individual data points. Our approach is to build a neural network model utilizing Adversarial Autoencoder (AAE-$\alpha$) in order to detect the activity of an attacker who leverages off-the-shelf tools and system applications. In addition, we also build \textit{Behavior2Vec} and \textit{Command2Vec} sentence embedding deep learning models specific for feature extraction tasks. We conduct extensive experiments to evaluate our models on real-world datasets collected for a period of two months. The empirical results demonstrate that our approach is effective and robust in discovering targeted attacks, pen-tests, and attack campaigns leveraging custom tools.

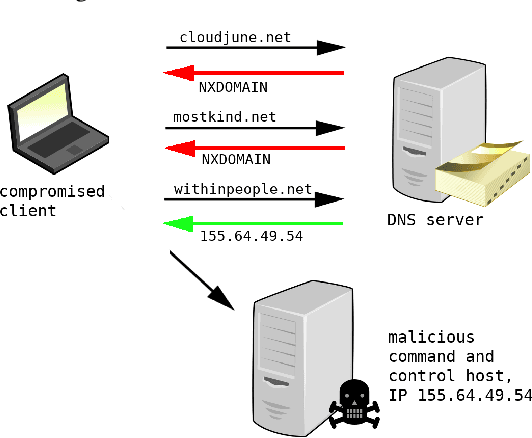

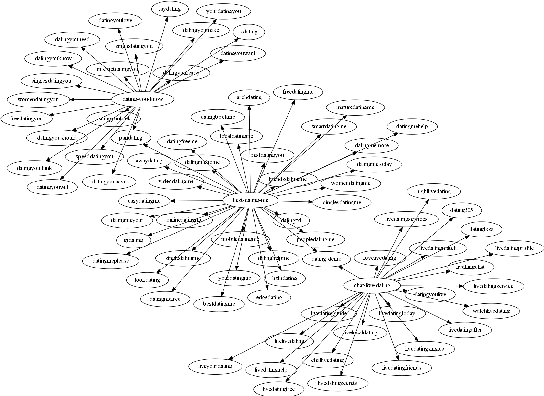

Detecting DGA domains with recurrent neural networks and side information

Oct 04, 2018

Abstract:Modern malware typically makes use of a domain generation algorithm (DGA) to avoid command and control domains or IPs being seized or sinkholed. This means that an infected system may attempt to access many domains in an attempt to contact the command and control server. Therefore, the automatic detection of DGA domains is an important task, both for the sake of blocking malicious domains and identifying compromised hosts. However, many DGAs use English wordlists to generate plausibly clean-looking domain names; this makes automatic detection difficult. In this work, we devise a notion of difficulty for DGA families called the smashword score; this measures how much a DGA family looks like English words. We find that this measure accurately reflects how much a DGA family's domains look like they are made from natural English words. We then describe our new modeling approach, which is a combination of a novel recurrent neural network architecture with domain registration side information. Our experiments show the model is capable of effectively identifying domains generated by difficult DGA families. Our experiments also show that our model outperforms existing approaches, and is able to reliably detect difficult DGA families such as matsnu, suppobox, rovnix, and others. The model's performance compared to the state of the art is best for DGA families that resemble English words. We believe that this model could either be used in a standalone DGA domain detector---such as an endpoint security application---or alternately the model could be used as a part of a larger malware detection system.

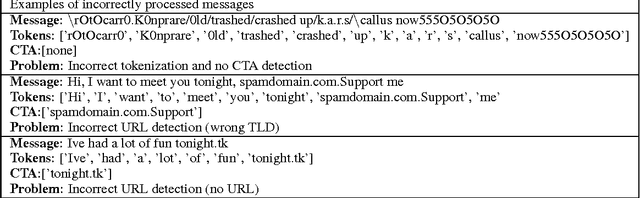

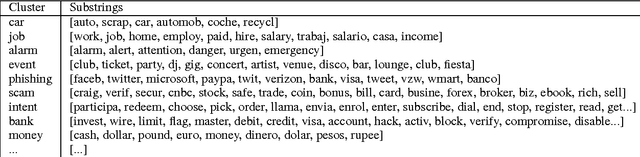

On Detecting Messaging Abuse in Short Text Messages using Linguistic and Behavioral patterns

Aug 18, 2014

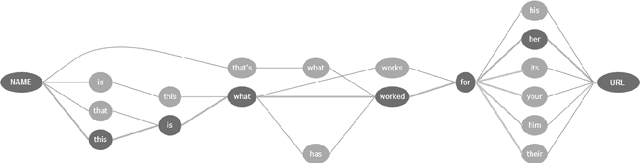

Abstract:The use of short text messages in social media and instant messaging has become a popular communication channel during the last years. This rising popularity has caused an increment in messaging threats such as spam, phishing or malware as well as other threats. The processing of these short text message threats could pose additional challenges such as the presence of lexical variants, SMS-like contractions or advanced obfuscations which can degrade the performance of traditional filtering solutions. By using a real-world SMS data set from a large telecommunications operator from the US and a social media corpus, in this paper we analyze the effectiveness of machine learning filters based on linguistic and behavioral patterns in order to detect short text spam and abusive users in the network. We have also explored different ways to deal with short text message challenges such as tokenization and entity detection by using text normalization and substring clustering techniques. The obtained results show the validity of the proposed solution by enhancing baseline approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge