Siyuan He

Balanced Anomaly-guided Ego-graph Diffusion Model for Inductive Graph Anomaly Detection

Feb 05, 2026Abstract:Graph anomaly detection (GAD) is crucial in applications like fraud detection and cybersecurity. Despite recent advancements using graph neural networks (GNNs), two major challenges persist. At the model level, most methods adopt a transductive learning paradigm, which assumes static graph structures, making them unsuitable for dynamic, evolving networks. At the data level, the extreme class imbalance, where anomalous nodes are rare, leads to biased models that fail to generalize to unseen anomalies. These challenges are interdependent: static transductive frameworks limit effective data augmentation, while imbalance exacerbates model distortion in inductive learning settings. To address these challenges, we propose a novel data-centric framework that integrates dynamic graph modeling with balanced anomaly synthesis. Our framework features: (1) a discrete ego-graph diffusion model, which captures the local topology of anomalies to generate ego-graphs aligned with anomalous structural distribution, and (2) a curriculum anomaly augmentation mechanism, which dynamically adjusts synthetic data generation during training, focusing on underrepresented anomaly patterns to improve detection and generalization. Experiments on five datasets demonstrate that the effectiveness of our framework.

T-Retriever: Tree-based Hierarchical Retrieval Augmented Generation for Textual Graphs

Jan 08, 2026Abstract:Retrieval-Augmented Generation (RAG) has significantly enhanced Large Language Models' ability to access external knowledge, yet current graph-based RAG approaches face two critical limitations in managing hierarchical information: they impose rigid layer-specific compression quotas that damage local graph structures, and they prioritize topological structure while neglecting semantic content. We introduce T-Retriever, a novel framework that reformulates attributed graph retrieval as tree-based retrieval using a semantic and structure-guided encoding tree. Our approach features two key innovations: (1) Adaptive Compression Encoding, which replaces artificial compression quotas with a global optimization strategy that preserves the graph's natural hierarchical organization, and (2) Semantic-Structural Entropy ($S^2$-Entropy), which jointly optimizes for both structural cohesion and semantic consistency when creating hierarchical partitions. Experiments across diverse graph reasoning benchmarks demonstrate that T-Retriever significantly outperforms state-of-the-art RAG methods, providing more coherent and contextually relevant responses to complex queries.

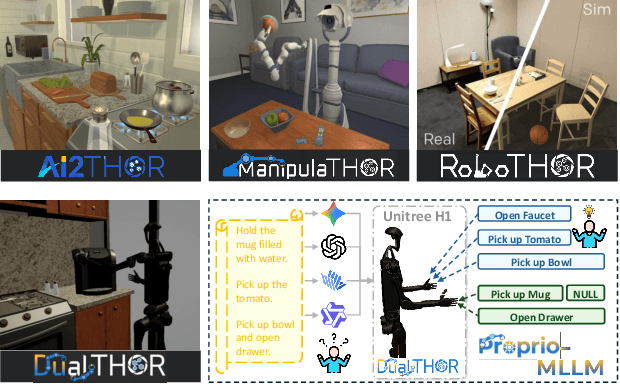

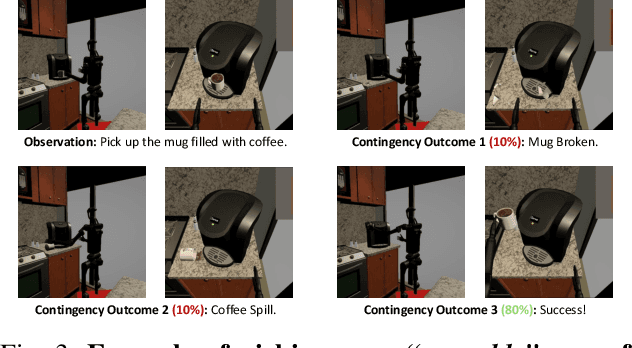

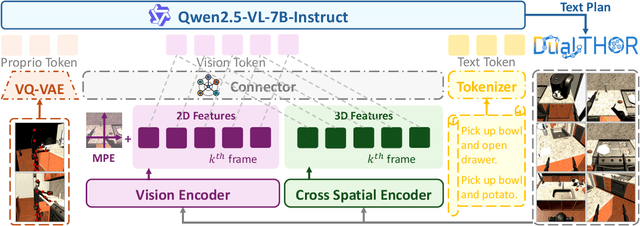

Towards Proprioception-Aware Embodied Planning for Dual-Arm Humanoid Robots

Oct 09, 2025

Abstract:In recent years, Multimodal Large Language Models (MLLMs) have demonstrated the ability to serve as high-level planners, enabling robots to follow complex human instructions. However, their effectiveness, especially in long-horizon tasks involving dual-arm humanoid robots, remains limited. This limitation arises from two main challenges: (i) the absence of simulation platforms that systematically support task evaluation and data collection for humanoid robots, and (ii) the insufficient embodiment awareness of current MLLMs, which hinders reasoning about dual-arm selection logic and body positions during planning. To address these issues, we present DualTHOR, a new dual-arm humanoid simulator, with continuous transition and a contingency mechanism. Building on this platform, we propose Proprio-MLLM, a model that enhances embodiment awareness by incorporating proprioceptive information with motion-based position embedding and a cross-spatial encoder. Experiments show that, while existing MLLMs struggle in this environment, Proprio-MLLM achieves an average improvement of 19.75% in planning performance. Our work provides both an essential simulation platform and an effective model to advance embodied intelligence in humanoid robotics. The code is available at https://anonymous.4open.science/r/DualTHOR-5F3B.

Adaptive Low Rank Adaptation of Segment Anything to Salient Object Detection

Aug 10, 2023

Abstract:Foundation models, such as OpenAI's GPT-3 and GPT-4, Meta's LLaMA, and Google's PaLM2, have revolutionized the field of artificial intelligence. A notable paradigm shift has been the advent of the Segment Anything Model (SAM), which has exhibited a remarkable capability to segment real-world objects, trained on 1 billion masks and 11 million images. Although SAM excels in general object segmentation, it lacks the intrinsic ability to detect salient objects, resulting in suboptimal performance in this domain. To address this challenge, we present the Segment Salient Object Model (SSOM), an innovative approach that adaptively fine-tunes SAM for salient object detection by harnessing the low-rank structure inherent in deep learning. Comprehensive qualitative and quantitative evaluations across five challenging RGB benchmark datasets demonstrate the superior performance of our approach, surpassing state-of-the-art methods.

A Trustworthy Framework for Medical Image Analysis with Deep Learning

Dec 06, 2022

Abstract:Computer vision and machine learning are playing an increasingly important role in computer-assisted diagnosis; however, the application of deep learning to medical imaging has challenges in data availability and data imbalance, and it is especially important that models for medical imaging are built to be trustworthy. Therefore, we propose TRUDLMIA, a trustworthy deep learning framework for medical image analysis, which adopts a modular design, leverages self-supervised pre-training, and utilizes a novel surrogate loss function. Experimental evaluations indicate that models generated from the framework are both trustworthy and high-performing. It is anticipated that the framework will support researchers and clinicians in advancing the use of deep learning for dealing with public health crises including COVID-19.

Performance or Trust? Why Not Both. Deep AUC Maximization with Self-Supervised Learning for COVID-19 Chest X-ray Classifications

Dec 14, 2021

Abstract:Effective representation learning is the key in improving model performance for medical image analysis. In training deep learning models, a compromise often must be made between performance and trust, both of which are essential for medical applications. Moreover, models optimized with cross-entropy loss tend to suffer from unwarranted overconfidence in the majority class and over-cautiousness in the minority class. In this work, we integrate a new surrogate loss with self-supervised learning for computer-aided screening of COVID-19 patients using radiography images. In addition, we adopt a new quantification score to measure a model's trustworthiness. Ablation study is conducted for both the performance and the trust on feature learning methods and loss functions. Comparisons show that leveraging the new surrogate loss on self-supervised models can produce label-efficient networks that are both high-performing and trustworthy.

* 3 pages

Simulation of Skin Stretching around the Forehead Wrinkles in Rhytidectomy

Jan 01, 2020

Abstract:Objective: Skin stretching around the forehead wrinkles is an important method in rhytidectomy. Proper parameters are required to evaluate the surgical effect. In this paper, a simulation method was proposed to obtain the parameters. Methods: Three-dimensional point cloud data with a resolution of 50 {\mu}m were employed. First, a smooth supporting contour under the wrinkled forehead was generated via b-spline interpolation and extrapolation to constrain the deformation of the wrinkled zone. Then, based on the vector formed intrinsic finite element (VFIFE) algorithm, the simulation was implemented in Matlab for the deformation of wrinkled forehead skin in the stretching process. Finally, the stress distribution and the residual wrinkles of forehead skin were employed to evaluate the surgical effect. Results: Although the residual wrinkles are similar when forehead wrinkles are finitely stretched, their stress distribution changes greatly. This indicates that the stress distribution in the skin is effective to evaluate the surgical effect, and the forehead wrinkles are easily to be overstretched, which may lead to potential skin injuries. Conclusion: The simulation method can predict stress distribution and residual wrinkles after forehead wrinkle stretching surgery, which can be potentially used to control the surgical process and further reduce risks of skin injury.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge