Stephane Tremblay

Improving Classification Model Performance on Chest X-Rays through Lung Segmentation

Feb 22, 2022

Abstract:Chest radiography is an effective screening tool for diagnosing pulmonary diseases. In computer-aided diagnosis, extracting the relevant region of interest, i.e., isolating the lung region of each radiography image, can be an essential step towards improved performance in diagnosing pulmonary disorders. Methods: In this work, we propose a deep learning approach to enhance abnormal chest x-ray (CXR) identification performance through segmentations. Our approach is designed in a cascaded manner and incorporates two modules: a deep neural network with criss-cross attention modules (XLSor) for localizing lung region in CXR images and a CXR classification model with a backbone of a self-supervised momentum contrast (MoCo) model pre-trained on large-scale CXR data sets. The proposed pipeline is evaluated on Shenzhen Hospital (SH) data set for the segmentation module, and COVIDx data set for both segmentation and classification modules. Novel statistical analysis is conducted in addition to regular evaluation metrics for the segmentation module. Furthermore, the results of the optimized approach are analyzed with gradient-weighted class activation mapping (Grad-CAM) to investigate the rationale behind the classification decisions and to interpret its choices. Results and Conclusion: Different data sets, methods, and scenarios for each module of the proposed pipeline are examined for designing an optimized approach, which has achieved an accuracy of 0.946 in distinguishing abnormal CXR images (i.e., Pneumonia and COVID-19) from normal ones. Numerical and visual validations suggest that applying automated segmentation as a pre-processing step for classification improves the generalization capability and the performance of the classification models.

Performance or Trust? Why Not Both. Deep AUC Maximization with Self-Supervised Learning for COVID-19 Chest X-ray Classifications

Dec 14, 2021

Abstract:Effective representation learning is the key in improving model performance for medical image analysis. In training deep learning models, a compromise often must be made between performance and trust, both of which are essential for medical applications. Moreover, models optimized with cross-entropy loss tend to suffer from unwarranted overconfidence in the majority class and over-cautiousness in the minority class. In this work, we integrate a new surrogate loss with self-supervised learning for computer-aided screening of COVID-19 patients using radiography images. In addition, we adopt a new quantification score to measure a model's trustworthiness. Ablation study is conducted for both the performance and the trust on feature learning methods and loss functions. Comparisons show that leveraging the new surrogate loss on self-supervised models can produce label-efficient networks that are both high-performing and trustworthy.

* 3 pages

COVID-19 Detection from Chest X-ray Images using Imprinted Weights Approach

May 04, 2021

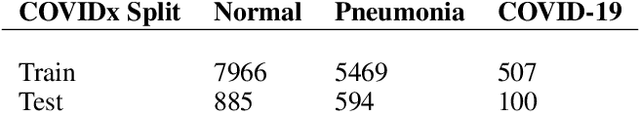

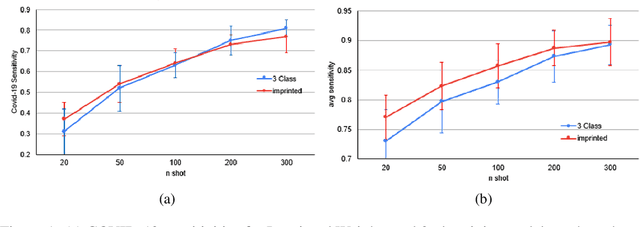

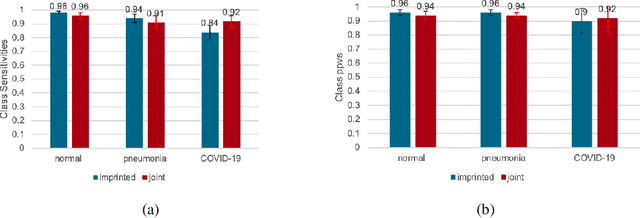

Abstract:The COVID-19 pandemic has had devastating effects on the well-being of the global population. The pandemic has been so prominent partly due to the high infection rate of the virus and its variants. In response, one of the most effective ways to stop infection is rapid diagnosis. The main-stream screening method, reverse transcription-polymerase chain reaction (RT-PCR), is time-consuming, laborious and in short supply. Chest radiography is an alternative screening method for the COVID-19 and computer-aided diagnosis (CAD) has proven to be a viable solution at low cost and with fast speed; however, one of the challenges in training the CAD models is the limited number of training data, especially at the onset of the pandemic. This becomes outstanding precisely when the quick and cheap type of diagnosis is critically needed for flattening the infection curve. To address this challenge, we propose the use of a low-shot learning approach named imprinted weights, taking advantage of the abundance of samples from known illnesses such as pneumonia to improve the detection performance on COVID-19.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge