Sixun Ouyang

Graphusion: A RAG Framework for Knowledge Graph Construction with a Global Perspective

Oct 23, 2024

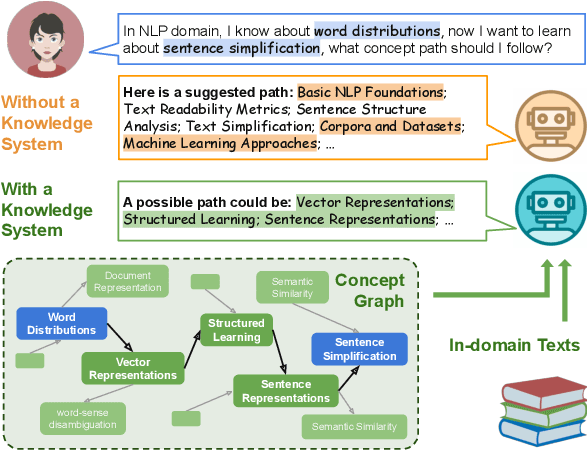

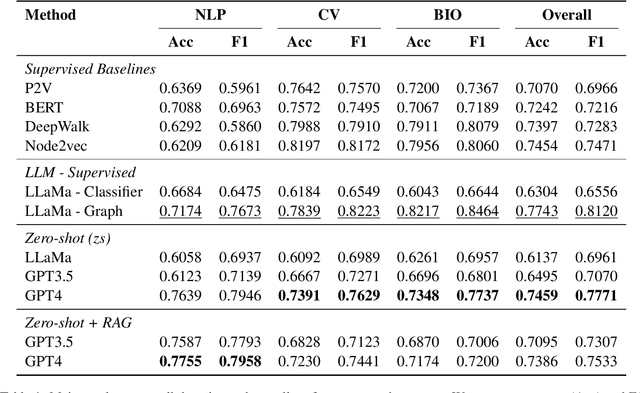

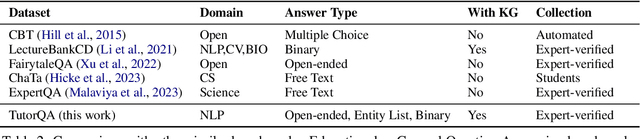

Abstract:Knowledge Graphs (KGs) are crucial in the field of artificial intelligence and are widely used in downstream tasks, such as question-answering (QA). The construction of KGs typically requires significant effort from domain experts. Large Language Models (LLMs) have recently been used for Knowledge Graph Construction (KGC). However, most existing approaches focus on a local perspective, extracting knowledge triplets from individual sentences or documents, missing a fusion process to combine the knowledge in a global KG. This work introduces Graphusion, a zero-shot KGC framework from free text. It contains three steps: in Step 1, we extract a list of seed entities using topic modeling to guide the final KG includes the most relevant entities; in Step 2, we conduct candidate triplet extraction using LLMs; in Step 3, we design the novel fusion module that provides a global view of the extracted knowledge, incorporating entity merging, conflict resolution, and novel triplet discovery. Results show that Graphusion achieves scores of 2.92 and 2.37 out of 3 for entity extraction and relation recognition, respectively. Moreover, we showcase how Graphusion could be applied to the Natural Language Processing (NLP) domain and validate it in an educational scenario. Specifically, we introduce TutorQA, a new expert-verified benchmark for QA, comprising six tasks and a total of 1,200 QA pairs. Using the Graphusion-constructed KG, we achieve a significant improvement on the benchmark, for example, a 9.2% accuracy improvement on sub-graph completion.

Graphusion: Leveraging Large Language Models for Scientific Knowledge Graph Fusion and Construction in NLP Education

Jul 15, 2024

Abstract:Knowledge graphs (KGs) are crucial in the field of artificial intelligence and are widely applied in downstream tasks, such as enhancing Question Answering (QA) systems. The construction of KGs typically requires significant effort from domain experts. Recently, Large Language Models (LLMs) have been used for knowledge graph construction (KGC), however, most existing approaches focus on a local perspective, extracting knowledge triplets from individual sentences or documents. In this work, we introduce Graphusion, a zero-shot KGC framework from free text. The core fusion module provides a global view of triplets, incorporating entity merging, conflict resolution, and novel triplet discovery. We showcase how Graphusion could be applied to the natural language processing (NLP) domain and validate it in the educational scenario. Specifically, we introduce TutorQA, a new expert-verified benchmark for graph reasoning and QA, comprising six tasks and a total of 1,200 QA pairs. Our evaluation demonstrates that Graphusion surpasses supervised baselines by up to 10% in accuracy on link prediction. Additionally, it achieves average scores of 2.92 and 2.37 out of 3 in human evaluations for concept entity extraction and relation recognition, respectively.

Leveraging Large Language Models for Concept Graph Recovery and Question Answering in NLP Education

Feb 22, 2024

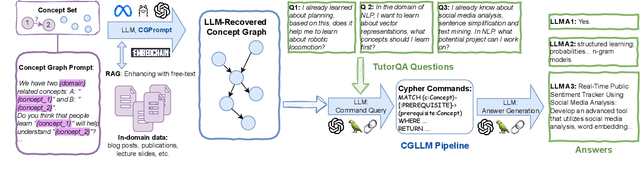

Abstract:In the domain of Natural Language Processing (NLP), Large Language Models (LLMs) have demonstrated promise in text-generation tasks. However, their educational applications, particularly for domain-specific queries, remain underexplored. This study investigates LLMs' capabilities in educational scenarios, focusing on concept graph recovery and question-answering (QA). We assess LLMs' zero-shot performance in creating domain-specific concept graphs and introduce TutorQA, a new expert-verified NLP-focused benchmark for scientific graph reasoning and QA. TutorQA consists of five tasks with 500 QA pairs. To tackle TutorQA queries, we present CGLLM, a pipeline integrating concept graphs with LLMs for answering diverse questions. Our results indicate that LLMs' zero-shot concept graph recovery is competitive with supervised methods, showing an average 3% F1 score improvement. In TutorQA tasks, LLMs achieve up to 26% F1 score enhancement. Moreover, human evaluation and analysis show that CGLLM generates answers with more fine-grained concepts.

Improving Explainable Recommendations with Synthetic Reviews

Jul 18, 2018

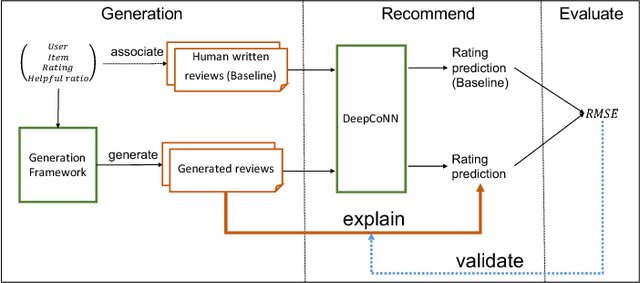

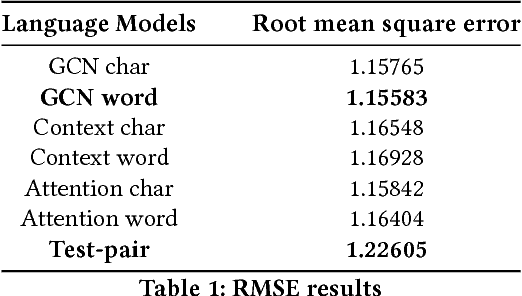

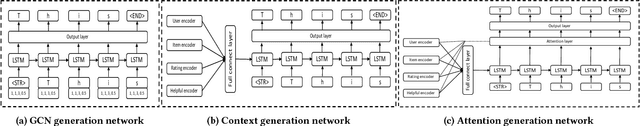

Abstract:An important task for a recommender system to provide interpretable explanations for the user. This is important for the credibility of the system. Current interpretable recommender systems tend to focus on certain features known to be important to the user and offer their explanations in a structured form. It is well known that user generated reviews and feedback from reviewers have strong leverage over the users' decisions. On the other hand, recent text generation works have been shown to generate text of similar quality to human written text, and we aim to show that generated text can be successfully used to explain recommendations. In this paper, we propose a framework consisting of popular review-oriented generation models aiming to create personalised explanations for recommendations. The interpretations are generated at both character and word levels. We build a dataset containing reviewers' feedback from the Amazon books review dataset. Our cross-domain experiments are designed to bridge from natural language processing to the recommender system domain. Besides language model evaluation methods, we employ DeepCoNN, a novel review-oriented recommender system using a deep neural network, to evaluate the recommendation performance of generated reviews by root mean square error (RMSE). We demonstrate that the synthetic personalised reviews have better recommendation performance than human written reviews. To our knowledge, this presents the first machine-generated natural language explanations for rating prediction.

Automatic Generation of Natural Language Explanations

Jul 04, 2017

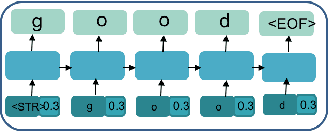

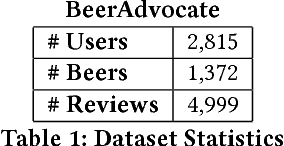

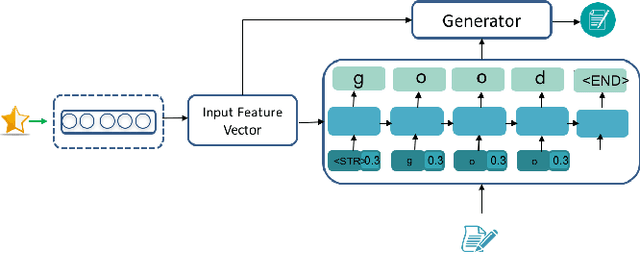

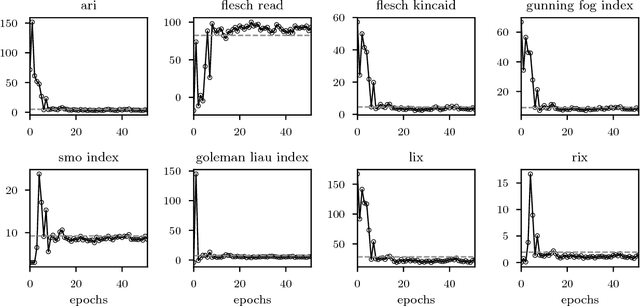

Abstract:An important task for recommender system is to generate explanations according to a user's preferences. Most of the current methods for explainable recommendations use structured sentences to provide descriptions along with the recommendations they produce. However, those methods have neglected the review-oriented way of writing a text, even though it is known that these reviews have a strong influence over user's decision. In this paper, we propose a method for the automatic generation of natural language explanations, for predicting how a user would write about an item, based on user ratings from different items' features. We design a character-level recurrent neural network (RNN) model, which generates an item's review explanations using long-short term memories (LSTM). The model generates text reviews given a combination of the review and ratings score that express opinions about different factors or aspects of an item. Our network is trained on a sub-sample from the large real-world dataset BeerAdvocate. Our empirical evaluation using natural language processing metrics shows the generated text's quality is close to a real user written review, identifying negation, misspellings, and domain specific vocabulary.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge