Peter Dolog

The Limits of Graph Samplers for Training Inductive Recommender Systems: Extended results

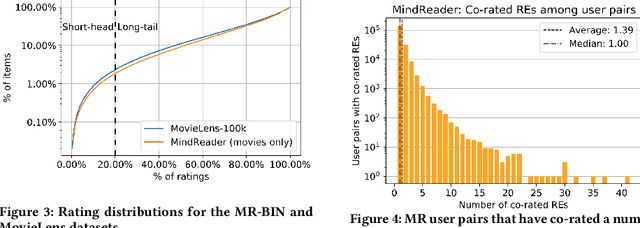

May 20, 2025Abstract:Inductive Recommender Systems are capable of recommending for new users and with new items thus avoiding the need to retrain after new data reaches the system. However, these methods are still trained on all the data available, requiring multiple days to train a single model, without counting hyperparameter tuning. In this work we focus on graph-based recommender systems, i.e., systems that model the data as a heterogeneous network. In other applications, graph sampling allows to study a subgraph and generalize the findings to the original graph. Thus, we investigate the applicability of sampling techniques for this task. We test on three real world datasets, with three state-of-the-art inductive methods, and using six different sampling methods. We find that its possible to maintain performance using only 50% of the training data with up to 86% percent decrease in training time; however, using less training data leads to far worse performance. Further, we find that when it comes to data for recommendations, graph sampling should also account for the temporal dimension. Therefore, we find that if higher data reduction is needed, new graph based sampling techniques should be studied and new inductive methods should be designed.

Simple and Powerful Architecture for Inductive Recommendation Using Knowledge Graph Convolutions

Sep 13, 2022

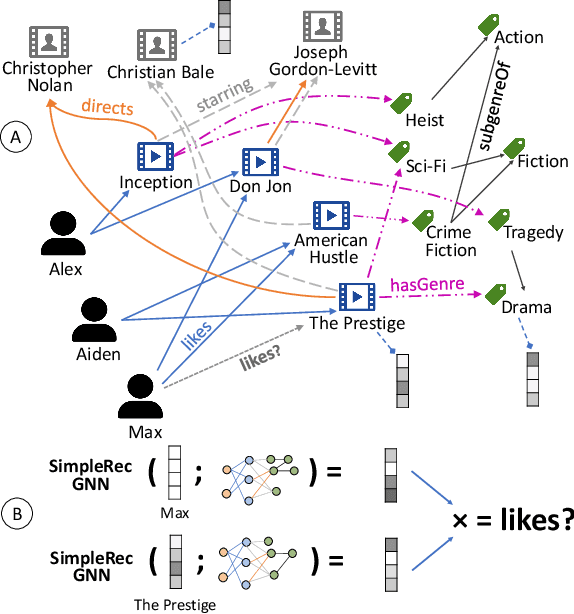

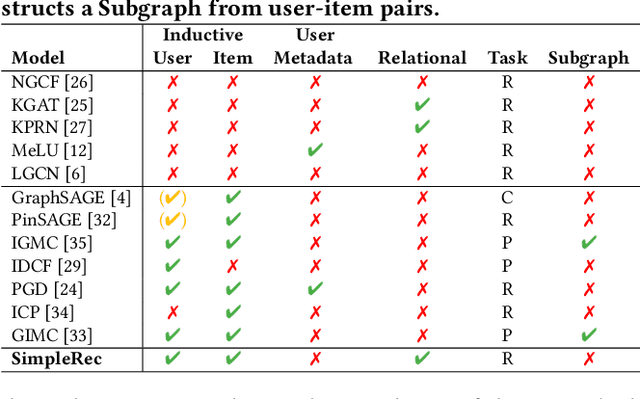

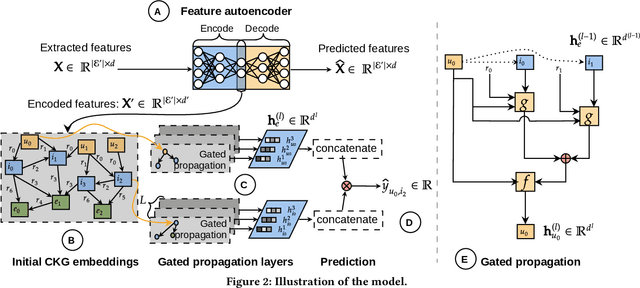

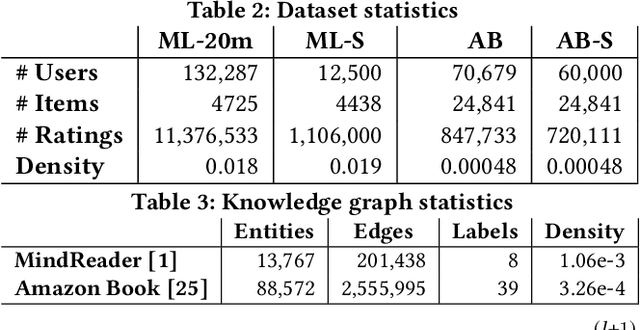

Abstract:Using graph models with relational information in recommender systems has shown promising results. Yet, most methods are transductive, i.e., they are based on dimensionality reduction architectures. Hence, they require heavy retraining every time new items or users are added. Conversely, inductive methods promise to solve these issues. Nonetheless, all inductive methods rely only on interactions, making recommendations for users with few interactions sub-optimal and even impossible for new items. Therefore, we focus on inductive methods able to also exploit knowledge graphs (KGs). In this work, we propose SimpleRec, a strong baseline that uses a graph neural network and a KG to provide better recommendations than related inductive methods for new users and items. We show that it is unnecessary to create complex model architectures for user representations, but it is enough to allow users to be represented by the few ratings they provide and the indirect connections among them without any user metadata. As a result, we re-evaluate state-of-the-art methods, identify better evaluation protocols, highlight unwarranted conclusions from previous proposals, and showcase a novel, stronger baseline for this task.

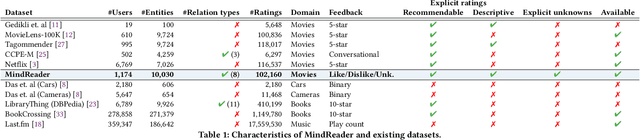

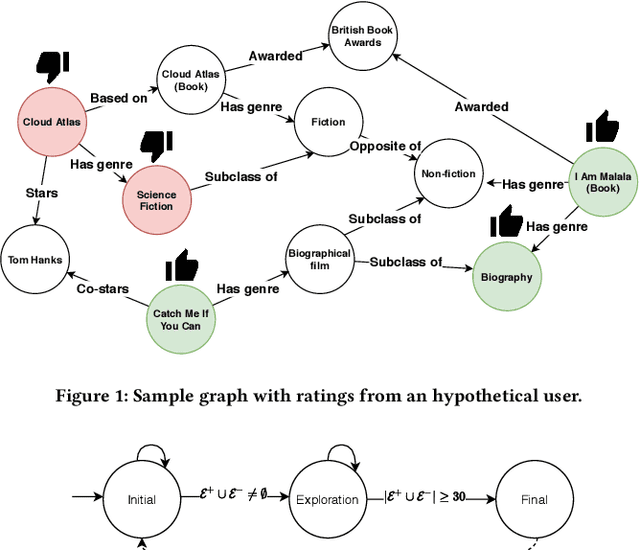

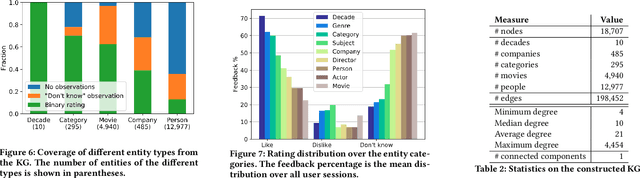

MindReader: Recommendation over Knowledge Graph Entities with Explicit User Ratings

Jun 08, 2021

Abstract:Knowledge Graphs (KGs) have been integrated in several models of recommendation to augment the informational value of an item by means of its related entities in the graph. Yet, existing datasets only provide explicit ratings on items and no information is provided about user opinions of other (non-recommendable) entities. To overcome this limitation, we introduce a new dataset, called the MindReader, providing explicit user ratings both for items and for KG entities. In this first version, the MindReader dataset provides more than 102 thousands explicit ratings collected from 1,174 real users on both items and entities from a KG in the movie domain. This dataset has been collected through an online interview application that we also release open source. As a demonstration of the importance of this new dataset, we present a comparative study of the effect of the inclusion of ratings on non-item KG entities in a variety of state-of-the-art recommendation models. In particular, we show that most models, whether designed specifically for graph data or not, see improvements in recommendation quality when trained on explicit non-item ratings. Moreover, for some models, we show that non-item ratings can effectively replace item ratings without loss of recommendation quality. This finding, thanks also to an observed greater familiarity of users towards common KG entities than towards long-tail items, motivates the use of KG entities for both warm and cold-start recommendations.

Improving Explainable Recommendations with Synthetic Reviews

Jul 18, 2018

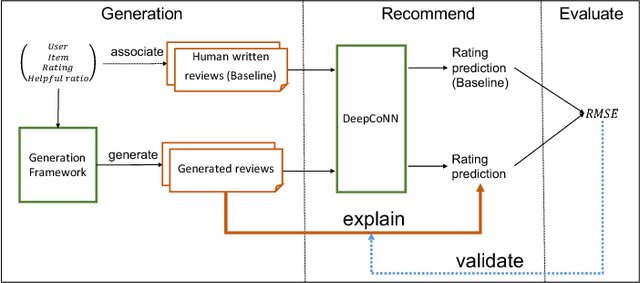

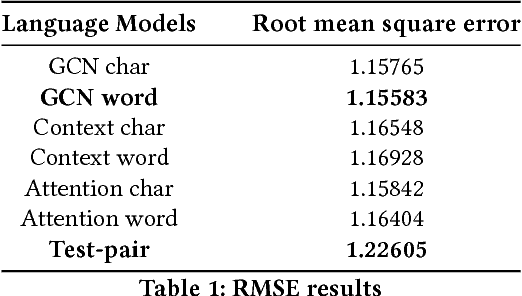

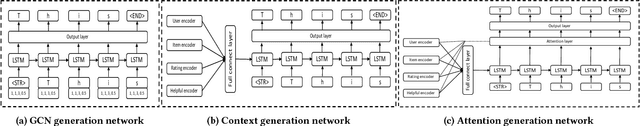

Abstract:An important task for a recommender system to provide interpretable explanations for the user. This is important for the credibility of the system. Current interpretable recommender systems tend to focus on certain features known to be important to the user and offer their explanations in a structured form. It is well known that user generated reviews and feedback from reviewers have strong leverage over the users' decisions. On the other hand, recent text generation works have been shown to generate text of similar quality to human written text, and we aim to show that generated text can be successfully used to explain recommendations. In this paper, we propose a framework consisting of popular review-oriented generation models aiming to create personalised explanations for recommendations. The interpretations are generated at both character and word levels. We build a dataset containing reviewers' feedback from the Amazon books review dataset. Our cross-domain experiments are designed to bridge from natural language processing to the recommender system domain. Besides language model evaluation methods, we employ DeepCoNN, a novel review-oriented recommender system using a deep neural network, to evaluate the recommendation performance of generated reviews by root mean square error (RMSE). We demonstrate that the synthetic personalised reviews have better recommendation performance than human written reviews. To our knowledge, this presents the first machine-generated natural language explanations for rating prediction.

Automatic Generation of Natural Language Explanations

Jul 04, 2017

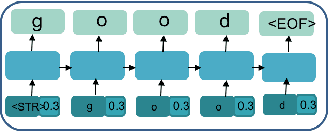

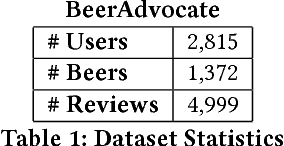

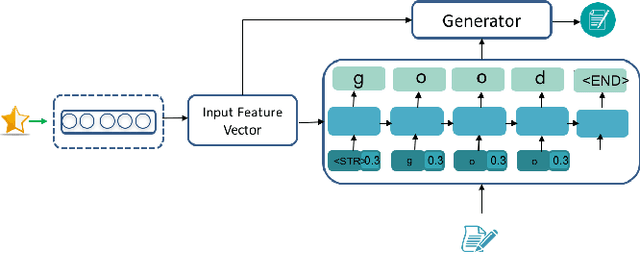

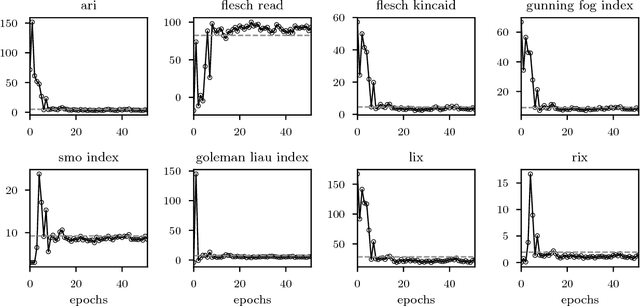

Abstract:An important task for recommender system is to generate explanations according to a user's preferences. Most of the current methods for explainable recommendations use structured sentences to provide descriptions along with the recommendations they produce. However, those methods have neglected the review-oriented way of writing a text, even though it is known that these reviews have a strong influence over user's decision. In this paper, we propose a method for the automatic generation of natural language explanations, for predicting how a user would write about an item, based on user ratings from different items' features. We design a character-level recurrent neural network (RNN) model, which generates an item's review explanations using long-short term memories (LSTM). The model generates text reviews given a combination of the review and ratings score that express opinions about different factors or aspects of an item. Our network is trained on a sub-sample from the large real-world dataset BeerAdvocate. Our empirical evaluation using natural language processing metrics shows the generated text's quality is close to a real user written review, identifying negation, misspellings, and domain specific vocabulary.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge