Siqing Li

Multivariate Gaussian Representation Learning for Medical Action Evaluation

Nov 13, 2025

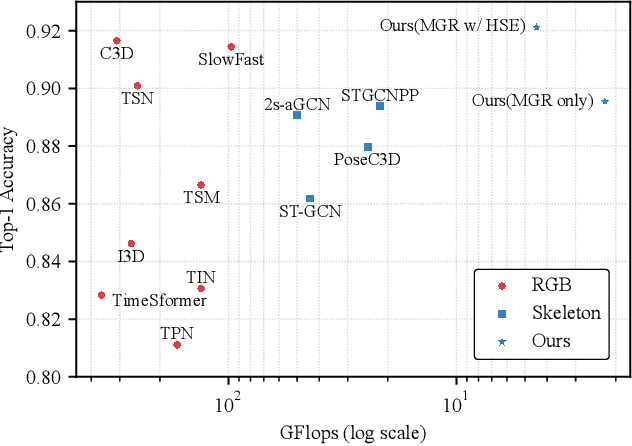

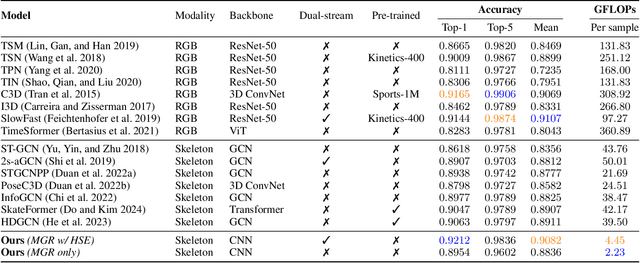

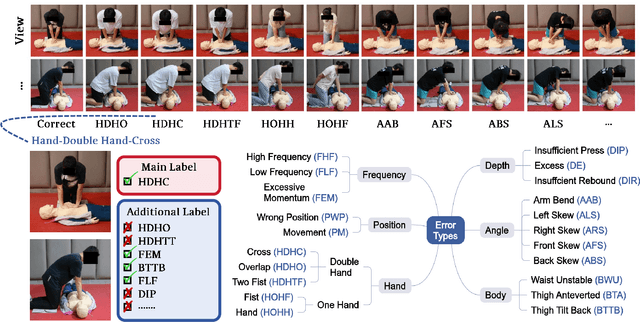

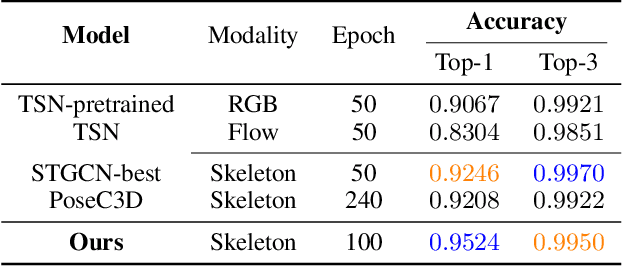

Abstract:Fine-grained action evaluation in medical vision faces unique challenges due to the unavailability of comprehensive datasets, stringent precision requirements, and insufficient spatiotemporal dynamic modeling of very rapid actions. To support development and evaluation, we introduce CPREval-6k, a multi-view, multi-label medical action benchmark containing 6,372 expert-annotated videos with 22 clinical labels. Using this dataset, we present GaussMedAct, a multivariate Gaussian encoding framework, to advance medical motion analysis through adaptive spatiotemporal representation learning. Multivariate Gaussian Representation projects the joint motions to a temporally scaled multi-dimensional space, and decomposes actions into adaptive 3D Gaussians that serve as tokens. These tokens preserve motion semantics through anisotropic covariance modeling while maintaining robustness to spatiotemporal noise. Hybrid Spatial Encoding, employing a Cartesian and Vector dual-stream strategy, effectively utilizes skeletal information in the form of joint and bone features. The proposed method achieves 92.1% Top-1 accuracy with real-time inference on the benchmark, outperforming the ST-GCN baseline by +5.9% accuracy with only 10% FLOPs. Cross-dataset experiments confirm the superiority of our method in robustness.

LAMP: Learnable Meta-Path Guided Adversarial Contrastive Learning for Heterogeneous Graphs

Sep 10, 2024

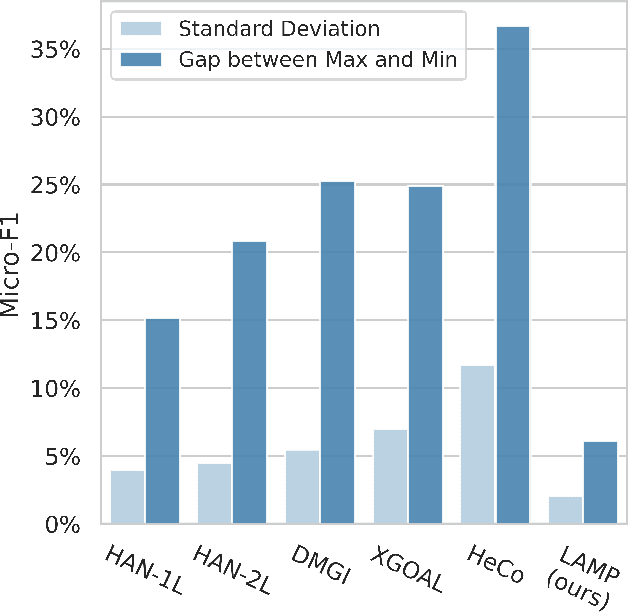

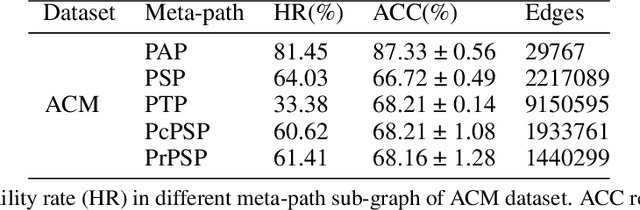

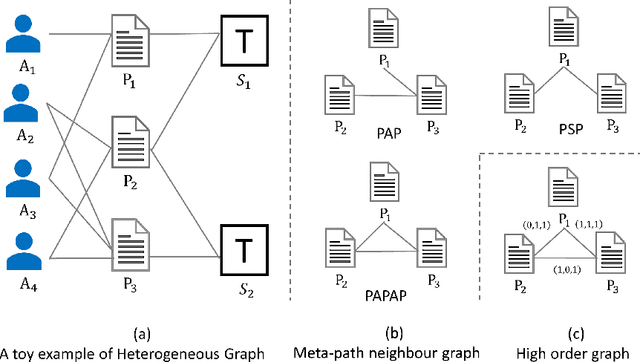

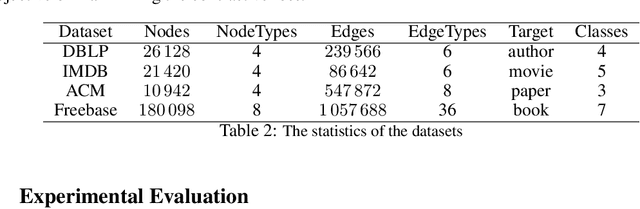

Abstract:Heterogeneous graph neural networks (HGNNs) have significantly propelled the information retrieval (IR) field. Still, the effectiveness of HGNNs heavily relies on high-quality labels, which are often expensive to acquire. This challenge has shifted attention towards Heterogeneous Graph Contrastive Learning (HGCL), which usually requires pre-defined meta-paths. However, our findings reveal that meta-path combinations significantly affect performance in unsupervised settings, an aspect often overlooked in current literature. Existing HGCL methods have considerable variability in outcomes across different meta-path combinations, thereby challenging the optimization process to achieve consistent and high performance. In response, we introduce \textsf{LAMP} (\underline{\textbf{L}}earn\underline{\textbf{A}}ble \underline{\textbf{M}}eta-\underline{\textbf{P}}ath), a novel adversarial contrastive learning approach that integrates various meta-path sub-graphs into a unified and stable structure, leveraging the overlap among these sub-graphs. To address the denseness of this integrated sub-graph, we propose an adversarial training strategy for edge pruning, maintaining sparsity to enhance model performance and robustness. \textsf{LAMP} aims to maximize the difference between meta-path and network schema views for guiding contrastive learning to capture the most meaningful information. Our extensive experimental study conducted on four diverse datasets from the Heterogeneous Graph Benchmark (HGB) demonstrates that \textsf{LAMP} significantly outperforms existing state-of-the-art unsupervised models in terms of accuracy and robustness.

Revisiting Attention Weights as Interpretations of Message-Passing Neural Networks

Jun 07, 2024

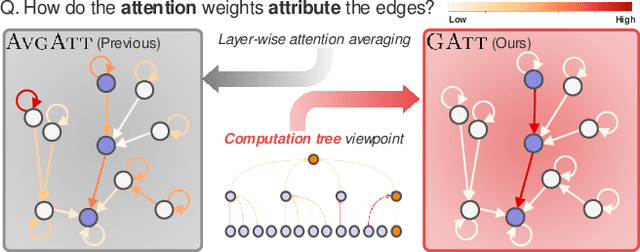

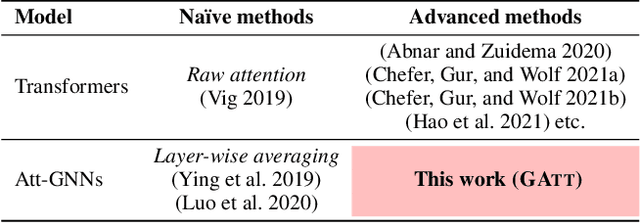

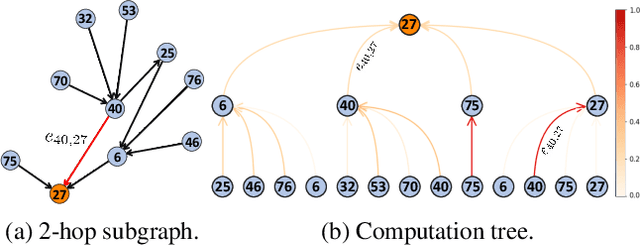

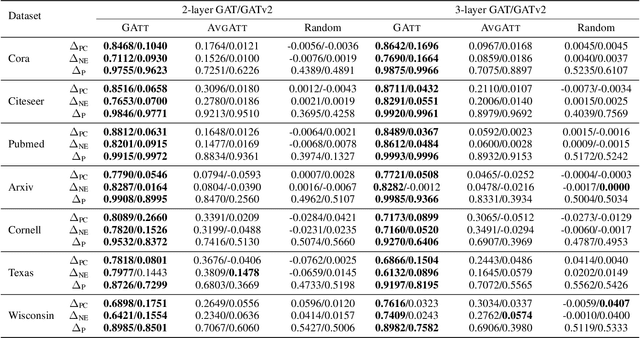

Abstract:The self-attention mechanism has been adopted in several widely-used message-passing neural networks (MPNNs) (e.g., GATs), which adaptively controls the amount of information that flows along the edges of the underlying graph. This usage of attention has made such models a baseline for studies on explainable AI (XAI) since interpretations via attention have been popularized in various domains (e.g., natural language processing and computer vision). However, existing studies often use naive calculations to derive attribution scores from attention, and do not take the precise and careful calculation of edge attribution into consideration. In our study, we aim to fill the gap between the widespread usage of attention-enabled MPNNs and their potential in largely under-explored explainability, a topic that has been actively investigated in other areas. To this end, as the first attempt, we formalize the problem of edge attribution from attention weights in GNNs. Then, we propose GATT, an edge attribution calculation method built upon the computation tree. Through comprehensive experiments, we demonstrate the effectiveness of our proposed method when evaluating attributions from GATs. Conversely, we empirically validate that simply averaging attention weights over graph attention layers is insufficient to interpret the GAT model's behavior. Code is publicly available at https://github.com/jordan7186/GAtt/tree/main.

Criteria Tell You More than Ratings: Criteria Preference-Aware Light Graph Convolution for Effective Multi-Criteria Recommendation

Jun 06, 2023

Abstract:The multi-criteria (MC) recommender system, which leverages MC rating information in a wide range of e-commerce areas, is ubiquitous nowadays. Surprisingly, although graph neural networks (GNNs) have been widely applied to develop various recommender systems due to GNN's high expressive capability in learning graph representations, it has been still unexplored how to design MC recommender systems with GNNs. In light of this, we make the first attempt towards designing a GNN-aided MC recommender system. Specifically, rather than straightforwardly adopting existing GNN-based recommendation methods, we devise a novel criteria preference-aware light graph convolution CPA-LGC method, which is capable of precisely capturing the criteria preference of users as well as the collaborative signal in complex high-order connectivities. To this end, we first construct an MC expansion graph that transforms user--item MC ratings into an expanded bipartite graph to potentially learn from the collaborative signal in MC ratings. Next, to strengthen the capability of criteria preference awareness, CPA-LGC incorporates newly characterized embeddings, including user-specific criteria-preference embeddings and item-specific criterion embeddings, into our graph convolution model. Through comprehensive evaluations using four real-world datasets, we demonstrate (a) the superiority over benchmark MC recommendation methods and benchmark recommendation methods using GNNs with tremendous gains, (b) the effectiveness of core components in CPA-LGC, and (c) the computational efficiency.

Knowledge-Enhanced Personalized Review Generation with Capsule Graph Neural Network

Oct 04, 2020

Abstract:Personalized review generation (PRG) aims to automatically produce review text reflecting user preference, which is a challenging natural language generation task. Most of previous studies do not explicitly model factual description of products, tending to generate uninformative content. Moreover, they mainly focus on word-level generation, but cannot accurately reflect more abstractive user preference in multiple aspects. To address the above issues, we propose a novel knowledge-enhanced PRG model based on capsule graph neural network~(Caps-GNN). We first construct a heterogeneous knowledge graph (HKG) for utilizing rich item attributes. We adopt Caps-GNN to learn graph capsules for encoding underlying characteristics from the HKG. Our generation process contains two major steps, namely aspect sequence generation and sentence generation. First, based on graph capsules, we adaptively learn aspect capsules for inferring the aspect sequence. Then, conditioned on the inferred aspect label, we design a graph-based copy mechanism to generate sentences by incorporating related entities or words from HKG. To our knowledge, we are the first to utilize knowledge graph for the PRG task. The incorporated KG information is able to enhance user preference at both aspect and word levels. Extensive experiments on three real-world datasets have demonstrated the effectiveness of our model on the PRG task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge