Zhicheng Wei

Denoising Distantly Supervised Named Entity Recognition via a Hypergeometric Probabilistic Model

Jun 17, 2021

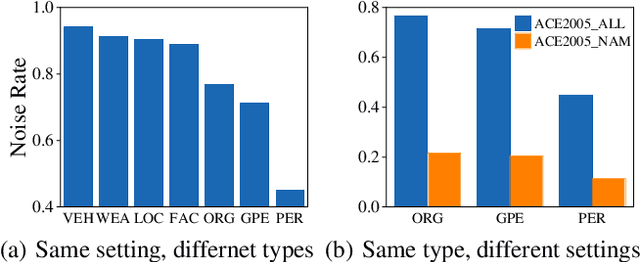

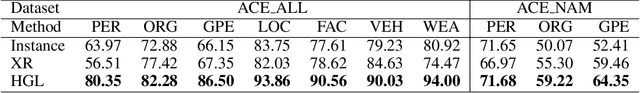

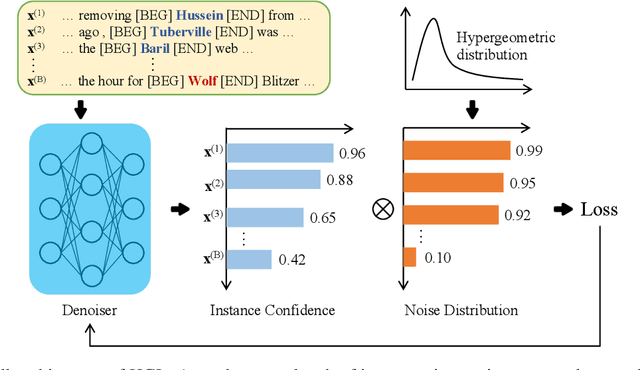

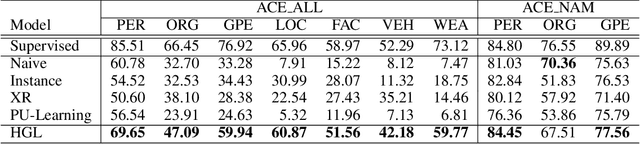

Abstract:Denoising is the essential step for distant supervision based named entity recognition. Previous denoising methods are mostly based on instance-level confidence statistics, which ignore the variety of the underlying noise distribution on different datasets and entity types. This makes them difficult to be adapted to high noise rate settings. In this paper, we propose Hypergeometric Learning (HGL), a denoising algorithm for distantly supervised NER that takes both noise distribution and instance-level confidence into consideration. Specifically, during neural network training, we naturally model the noise samples in each batch following a hypergeometric distribution parameterized by the noise-rate. Then each instance in the batch is regarded as either correct or noisy one according to its label confidence derived from previous training step, as well as the noise distribution in this sampled batch. Experiments show that HGL can effectively denoise the weakly-labeled data retrieved from distant supervision, and therefore results in significant improvements on the trained models.

Few-shot Knowledge Graph-to-Text Generation with Pretrained Language Models

Jun 03, 2021

Abstract:This paper studies how to automatically generate a natural language text that describes the facts in knowledge graph (KG). Considering the few-shot setting, we leverage the excellent capacities of pretrained language models (PLMs) in language understanding and generation. We make three major technical contributions, namely representation alignment for bridging the semantic gap between KG encodings and PLMs, relation-biased KG linearization for deriving better input representations, and multi-task learning for learning the correspondence between KG and text. Extensive experiments on three benchmark datasets have demonstrated the effectiveness of our model on KG-to-text generation task. In particular, our model outperforms all comparison methods on both fully-supervised and few-shot settings. Our code and datasets are available at https://github.com/RUCAIBox/Few-Shot-KG2Text.

Knowledge-based Review Generation by Coherence Enhanced Text Planning

May 09, 2021

Abstract:As a natural language generation task, it is challenging to generate informative and coherent review text. In order to enhance the informativeness of the generated text, existing solutions typically learn to copy entities or triples from knowledge graphs (KGs). However, they lack overall consideration to select and arrange the incorporated knowledge, which tends to cause text incoherence. To address the above issue, we focus on improving entity-centric coherence of the generated reviews by leveraging the semantic structure of KGs. In this paper, we propose a novel Coherence Enhanced Text Planning model (CETP) based on knowledge graphs (KGs) to improve both global and local coherence for review generation. The proposed model learns a two-level text plan for generating a document: (1) the document plan is modeled as a sequence of sentence plans in order, and (2) the sentence plan is modeled as an entity-based subgraph from KG. Local coherence can be naturally enforced by KG subgraphs through intra-sentence correlations between entities. For global coherence, we design a hierarchical self-attentive architecture with both subgraph- and node-level attention to enhance the correlations between subgraphs. To our knowledge, we are the first to utilize a KG-based text planning model to enhance text coherence for review generation. Extensive experiments on three datasets confirm the effectiveness of our model on improving the content coherence of generated texts.

Knowledge-Enhanced Personalized Review Generation with Capsule Graph Neural Network

Oct 04, 2020

Abstract:Personalized review generation (PRG) aims to automatically produce review text reflecting user preference, which is a challenging natural language generation task. Most of previous studies do not explicitly model factual description of products, tending to generate uninformative content. Moreover, they mainly focus on word-level generation, but cannot accurately reflect more abstractive user preference in multiple aspects. To address the above issues, we propose a novel knowledge-enhanced PRG model based on capsule graph neural network~(Caps-GNN). We first construct a heterogeneous knowledge graph (HKG) for utilizing rich item attributes. We adopt Caps-GNN to learn graph capsules for encoding underlying characteristics from the HKG. Our generation process contains two major steps, namely aspect sequence generation and sentence generation. First, based on graph capsules, we adaptively learn aspect capsules for inferring the aspect sequence. Then, conditioned on the inferred aspect label, we design a graph-based copy mechanism to generate sentences by incorporating related entities or words from HKG. To our knowledge, we are the first to utilize knowledge graph for the PRG task. The incorporated KG information is able to enhance user preference at both aspect and word levels. Extensive experiments on three real-world datasets have demonstrated the effectiveness of our model on the PRG task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge