Silvia Cruciani

Polyhedral Friction Cone Estimator for Object Manipulation

Nov 26, 2020

Abstract:A polyhedral friction cone is a set of reaction wrenches that an object can experience whilst in contact with its environment. This polyhedron is a powerful tool to control an object's motion and interaction with the environment. It can be derived analytically, upon knowledge of object and environment geometries, contact point locations and friction coefficients. We propose to estimate the polyhedral friction cone so that a priori knowledge of these quantities is no longer required. Additionally, we introduce a solution to transform the estimated friction cone to avoid re-estimation while the object moves. We present an analysis of the estimated polyhedral friction cone and demonstrate its application for manipulating an object in simulation and with a real robot.

Benchmarking In-Hand Manipulation

Jan 09, 2020

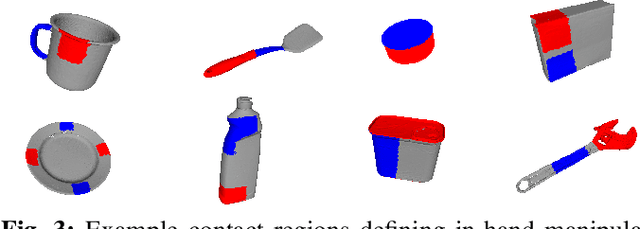

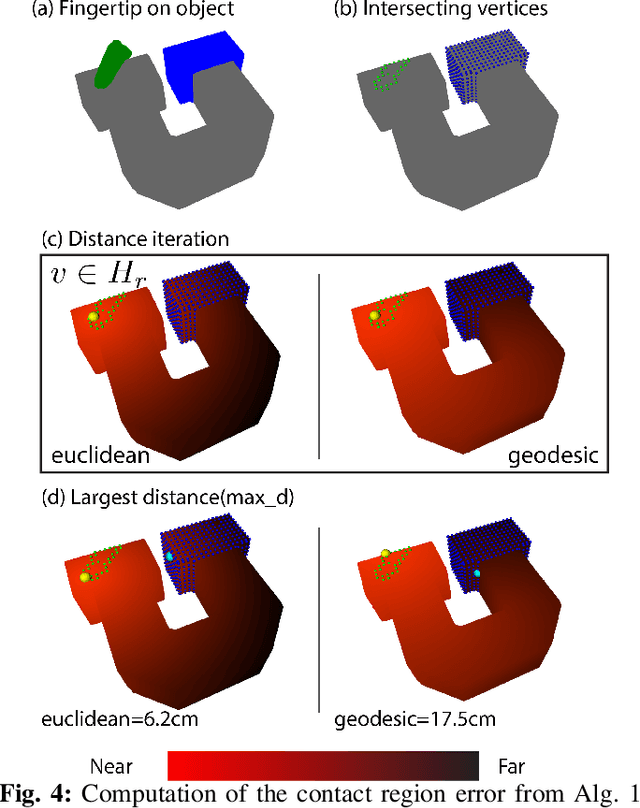

Abstract:The purpose of this benchmark is to evaluate the planning and control aspects of robotic in-hand manipulation systems. The goal is to assess the system's ability to change the pose of a hand-held object by either using the fingers, environment or a combination of both. Given an object surface mesh from the YCB data-set, we provide examples of initial and goal states (i.e.\ static object poses and fingertip locations) for various in-hand manipulation tasks. We further propose metrics that measure the error in reaching the goal state from a specific initial state, which, when aggregated across all tasks, also serves as a measure of the system's in-hand manipulation capability. We provide supporting software, task examples, and evaluation results associated with the benchmark. All the supporting material is available at https://robot-learning.cs.utah.edu/project/benchmarking_in_hand_manipulation

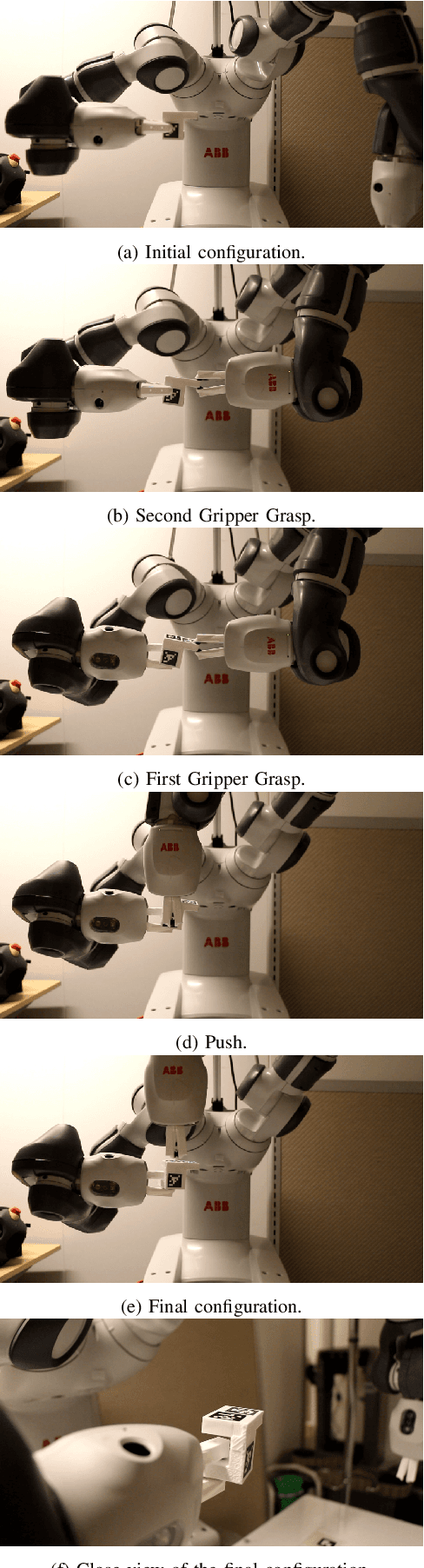

Dual-Arm In-Hand Manipulation and Regrasping Using Dexterous Manipulation Graphs

May 16, 2019

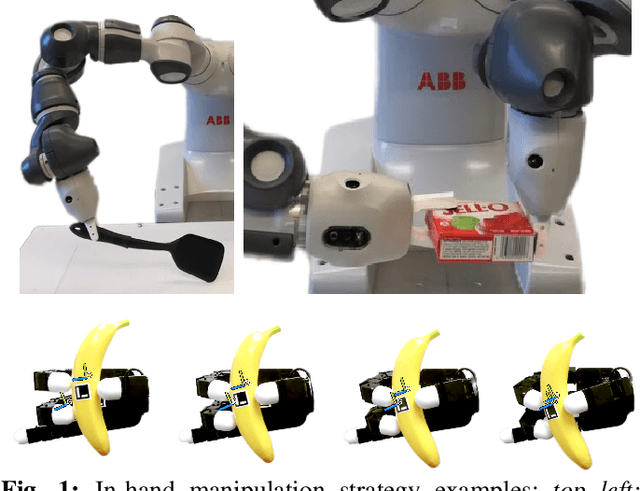

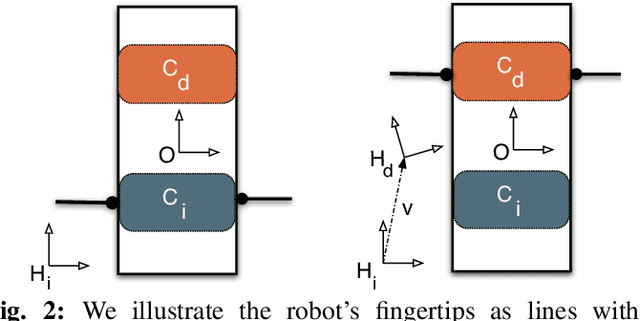

Abstract:This work focuses on the problem of in-hand manipulation and regrasping of objects with parallel grippers. We propose Dexterous Manipulation Graph (DMG) as a representation on which we define planning for in-hand manipulation and regrasping. The DMG is a disconnected undirected graph that represents the possible motions of a finger along the object's surface. We formulate the in-hand manipulation and regrasping problem as a graph search problem from the initial to the final configuration. The resulting plan is a sequence of coordinated in-hand pushing and regrasping movements. We propose a dual-arm system for the execution of the sequence where both hands are used interchangeably. We demonstrate our approach on an ABB Yumi robot tasked with different grasp reconfigurations.

Dexterous Manipulation Graphs

Mar 01, 2018

Abstract:We propose the Dexterous Manipulation Graph as a tool to address in-hand manipulation and reposition an object inside a robot's end-effector. This graph is used to plan a sequence of manipulation primitives so to bring the object to the desired end pose. This sequence of primitives is translated into motions of the robot to move the object held by the end-effector. We use a dual arm robot with parallel grippers to test our method on a real system and show successful planning and execution of in-hand manipulation.

Unlocking the Potential of Simulators: Design with RL in Mind

Jun 08, 2017

Abstract:Using Reinforcement Learning (RL) in simulation to construct policies useful in real life is challenging. This is often attributed to the sequential decision making aspect: inaccuracies in simulation accumulate over multiple steps, hence the simulated trajectories diverge from what would happen in reality. In our work we show the need to consider another important aspect: the mismatch in simulating control. We bring attention to the need for modeling control as well as dynamics, since oversimplifying assumptions about applying actions of RL policies could make the policies fail on real-world systems. We design a simulator for solving a pivoting task (of interest in Robotics) and demonstrate that even a simple simulator designed with RL in mind outperforms high-fidelity simulators when it comes to learning a policy that is to be deployed on a real robotic system. We show that a phenomenon that is hard to model - friction - could be exploited successfully, even when RL is performed using a simulator with a simple dynamics and noise model. Hence, we demonstrate that as long as the main sources of uncertainty are identified, it could be possible to learn policies applicable to real systems even using a simple simulator. RL-compatible simulators could open the possibilities for applying a wide range of RL algorithms in various fields. This is important, since currently data sparsity in fields like healthcare and education frequently forces researchers and engineers to only consider sample-efficient RL approaches. Successful simulator-aided RL could increase flexibility of experimenting with RL algorithms and help applying RL policies to real-world settings in fields where data is scarce. We believe that lessons learned in Robotics could help other fields design RL-compatible simulators, so we summarize our experience and conclude with suggestions.

Reinforcement Learning for Pivoting Task

Mar 01, 2017

Abstract:In this work we propose an approach to learn a robust policy for solving the pivoting task. Recently, several model-free continuous control algorithms were shown to learn successful policies without prior knowledge of the dynamics of the task. However, obtaining successful policies required thousands to millions of training episodes, limiting the applicability of these approaches to real hardware. We developed a training procedure that allows us to use a simple custom simulator to learn policies robust to the mismatch of simulation vs robot. In our experiments, we demonstrate that the policy learned in the simulator is able to pivot the object to the desired target angle on the real robot. We also show generalization to an object with different inertia, shape, mass and friction properties than those used during training. This result is a step towards making model-free reinforcement learning available for solving robotics tasks via pre-training in simulators that offer only an imprecise match to the real-world dynamics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge