Shulong Li

Predicting Lung Nodule Malignancies by Combining Deep Convolutional Neural Network and Handcrafted Features

Sep 07, 2018

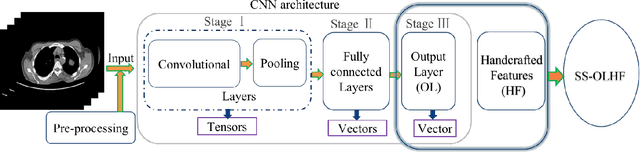

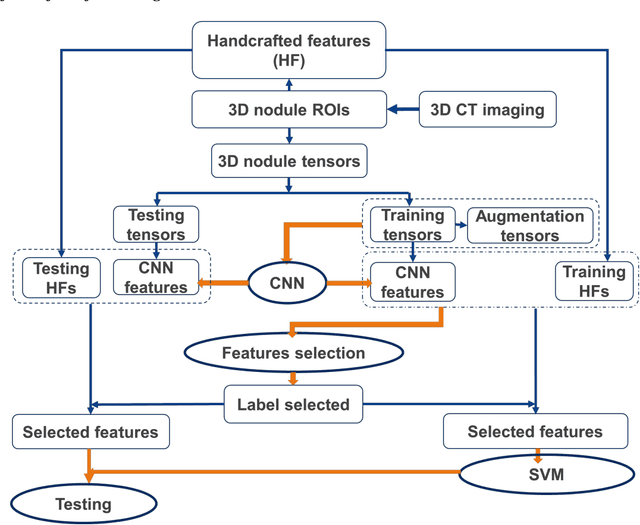

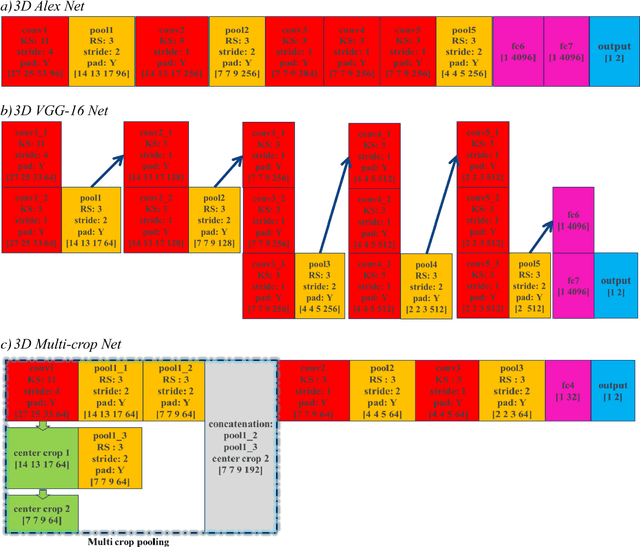

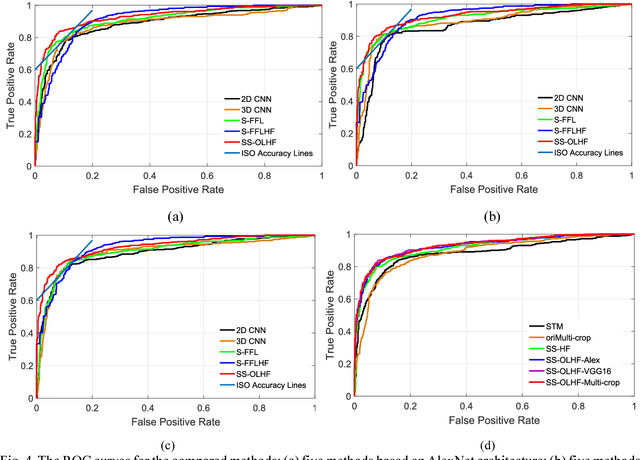

Abstract:To predict lung nodule malignancy with a high sensitivity and specificity, we propose a fusion algorithm that combines handcrafted features (HF) into the features learned at the output layer of a 3D deep convolutional neural network (CNN). First, we extracted twenty-nine handcrafted features, including nine intensity features, eight geometric features, and twelve texture features based on grey-level co-occurrence matrix (GLCM) averaged from thirteen directions. We then trained 3D CNNs modified from three state-of-the-art 2D CNN architectures (AlexNet, VGG-16 Net and Multi-crop Net) to extract the CNN features learned at the output layer. For each 3D CNN, the CNN features combined with the 29 handcrafted features were used as the input for the support vector machine (SVM) coupled with the sequential forward feature selection (SFS) method to select the optimal feature subset and construct the classifiers. The fusion algorithm takes full advantage of the handcrafted features and the highest level CNN features learned at the output layer. It can overcome the disadvantage of the handcrafted features that may not fully reflect the unique characteristics of a particular lesion by combining the intrinsic CNN features. Meanwhile, it also alleviates the requirement of a large scale annotated dataset for the CNNs based on the complementary of handcrafted features. The patient cohort includes 431 malignant nodules and 795 benign nodules extracted from the LIDC/IDRI database. For each investigated CNN architecture, the proposed fusion algorithm achieved the highest AUC, accuracy, sensitivity, and specificity scores among all competitive classification models.

Automatic multi-objective based feature selection for classification

Jul 24, 2018

Abstract:Accurately classifying the malignancy of lesions detected in a screening scan is critical for reducing false positives. Radiomics holds great potential to differentiate malignant from benign tumors by extracting and analyzing a large number of quantitative image features. Since not all radiomic features contribute to an effective classifying model, selecting an optimal feature subset is critical. This work proposes a new multi-objective based feature selection (MO-FS) algorithm that considers sensitivity and specificity simultaneously as the objective functions during feature selection. For MO-FS, we developed a modified entropy based termination criterion (METC) that stops the algorithm automatically rather than relying on a preset number of generations. We also designed a solution selection methodology for multi-objective learning that uses the evidential reasoning approach (SMOLER) to automatically select the optimal solution from the Pareto-optimal set. Furthermore, we developed an adaptive mutation operation to generate the mutation probability in MO-FS automatically. We evaluated the MO-FS for classifying lung nodule malignancy in low-dose CT and breast lesion malignancy in digital breast tomosynthesis. The experimental results demonstrated that the feature set selected by MO-FS achieved better classification performance than features selected by other commonly used methods.

Constructing multi-modality and multi-classifier radiomics predictive models through reliable classifier fusion

Oct 05, 2017

Abstract:Radiomics aims to extract and analyze large numbers of quantitative features from medical images and is highly promising in staging, diagnosing, and predicting outcomes of cancer treatments. Nevertheless, several challenges need to be addressed to construct an optimal radiomics predictive model. First, the predictive performance of the model may be reduced when features extracted from an individual imaging modality are blindly combined into a single predictive model. Second, because many different types of classifiers are available to construct a predictive model, selecting an optimal classifier for a particular application is still challenging. In this work, we developed multi-modality and multi-classifier radiomics predictive models that address the aforementioned issues in currently available models. Specifically, a new reliable classifier fusion strategy was proposed to optimally combine output from different modalities and classifiers. In this strategy, modality-specific classifiers were first trained, and an analytic evidential reasoning (ER) rule was developed to fuse the output score from each modality to construct an optimal predictive model. One public data set and two clinical case studies were performed to validate model performance. The experimental results indicated that the proposed ER rule based radiomics models outperformed the traditional models that rely on a single classifier or simply use combined features from different modalities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge