Shoichiro Takeda

IPCD: Intrinsic Point-Cloud Decomposition

Nov 13, 2025Abstract:Point clouds are widely used in various fields, including augmented reality (AR) and robotics, where relighting and texture editing are crucial for realistic visualization. Achieving these tasks requires accurately separating albedo from shade. However, performing this separation on point clouds presents two key challenges: (1) the non-grid structure of point clouds makes conventional image-based decomposition models ineffective, and (2) point-cloud models designed for other tasks do not explicitly consider global-light direction, resulting in inaccurate shade. In this paper, we introduce \textbf{Intrinsic Point-Cloud Decomposition (IPCD)}, which extends image decomposition to the direct decomposition of colored point clouds into albedo and shade. To overcome challenge (1), we propose \textbf{IPCD-Net} that extends image-based model with point-wise feature aggregation for non-grid data processing. For challenge (2), we introduce \textbf{Projection-based Luminance Distribution (PLD)} with a hierarchical feature refinement, capturing global-light ques via multi-view projection. For comprehensive evaluation, we create a synthetic outdoor-scene dataset. Experimental results demonstrate that IPCD-Net reduces cast shadows in albedo and enhances color accuracy in shade. Furthermore, we showcase its applications in texture editing, relighting, and point-cloud registration under varying illumination. Finally, we verify the real-world applicability of IPCD-Net.

Difference Vector Equalization for Robust Fine-tuning of Vision-Language Models

Nov 13, 2025

Abstract:Contrastive pre-trained vision-language models, such as CLIP, demonstrate strong generalization abilities in zero-shot classification by leveraging embeddings extracted from image and text encoders. This paper aims to robustly fine-tune these vision-language models on in-distribution (ID) data without compromising their generalization abilities in out-of-distribution (OOD) and zero-shot settings. Current robust fine-tuning methods tackle this challenge by reusing contrastive learning, which was used in pre-training, for fine-tuning. However, we found that these methods distort the geometric structure of the embeddings, which plays a crucial role in the generalization of vision-language models, resulting in limited OOD and zero-shot performance. To address this, we propose Difference Vector Equalization (DiVE), which preserves the geometric structure during fine-tuning. The idea behind DiVE is to constrain difference vectors, each of which is obtained by subtracting the embeddings extracted from the pre-trained and fine-tuning models for the same data sample. By constraining the difference vectors to be equal across various data samples, we effectively preserve the geometric structure. Therefore, we introduce two losses: average vector loss (AVL) and pairwise vector loss (PVL). AVL preserves the geometric structure globally by constraining difference vectors to be equal to their weighted average. PVL preserves the geometric structure locally by ensuring a consistent multimodal alignment. Our experiments demonstrate that DiVE effectively preserves the geometric structure, achieving strong results across ID, OOD, and zero-shot metrics.

Optimal Transport with Cyclic Symmetry

Nov 22, 2023

Abstract:We propose novel fast algorithms for optimal transport (OT) utilizing a cyclic symmetry structure of input data. Such OT with cyclic symmetry appears universally in various real-world examples: image processing, urban planning, and graph processing. Our main idea is to reduce OT to a small optimization problem that has significantly fewer variables by utilizing cyclic symmetry and various optimization techniques. On the basis of this reduction, our algorithms solve the small optimization problem instead of the original OT. As a result, our algorithms obtain the optimal solution and the objective function value of the original OT faster than solving the original OT directly. In this paper, our focus is on two crucial OT formulations: the linear programming OT (LOT) and the strongly convex-regularized OT, which includes the well-known entropy-regularized OT (EROT). Experiments show the effectiveness of our algorithms for LOT and EROT in synthetic/real-world data that has a strict/approximate cyclic symmetry structure. Through theoretical and experimental results, this paper successfully introduces the concept of symmetry into the OT research field for the first time.

Adversarial Finetuning with Latent Representation Constraint to Mitigate Accuracy-Robustness Tradeoff

Aug 31, 2023

Abstract:This paper addresses the tradeoff between standard accuracy on clean examples and robustness against adversarial examples in deep neural networks (DNNs). Although adversarial training (AT) improves robustness, it degrades the standard accuracy, thus yielding the tradeoff. To mitigate this tradeoff, we propose a novel AT method called ARREST, which comprises three components: (i) adversarial finetuning (AFT), (ii) representation-guided knowledge distillation (RGKD), and (iii) noisy replay (NR). AFT trains a DNN on adversarial examples by initializing its parameters with a DNN that is standardly pretrained on clean examples. RGKD and NR respectively entail a regularization term and an algorithm to preserve latent representations of clean examples during AFT. RGKD penalizes the distance between the representations of the standardly pretrained and AFT DNNs. NR switches input adversarial examples to nonadversarial ones when the representation changes significantly during AFT. By combining these components, ARREST achieves both high standard accuracy and robustness. Experimental results demonstrate that ARREST mitigates the tradeoff more effectively than previous AT-based methods do.

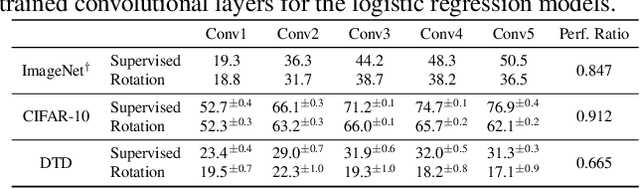

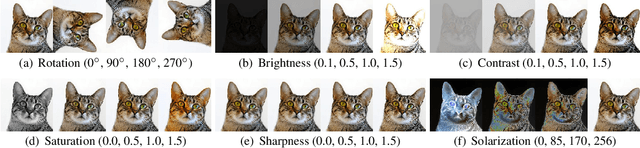

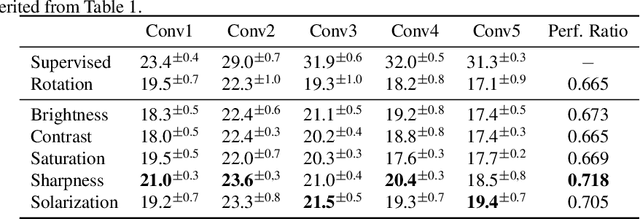

Multiple Pretext-Task for Self-Supervised Learning via Mixing Multiple Image Transformations

Dec 25, 2019

Abstract:Self-supervised learning is one of the most promising approaches to learn representations capturing semantic features in images without any manual annotation cost. To learn useful representations, a self-supervised model solves a pretext-task, which is defined by data itself. Among a number of pretext-tasks, the rotation prediction task (Rotation) achieves better representations for solving various target tasks despite its simplicity of the implementation. However, we found that Rotation can fail to capture semantic features related to image textures and colors. To tackle this problem, we introduce a learning technique called multiple pretext-task for self-supervised learning (MP-SSL), which solves multiple pretext-task in addition to Rotation simultaneously. In order to capture features of textures and colors, we employ the transformations of image enhancements (e.g., sharpening and solarizing) as the additional pretext-tasks. MP-SSL efficiently trains a model by leveraging a Frank-Wolfe based multi-task training algorithm. Our experimental results show MP-SSL models outperform Rotation on multiple standard benchmarks and achieve state-of-the-art performance on Places-205.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge