Shobhit Gupta

Eco-Driving Control of Connected and Automated Vehicles using Neural Network based Rollout

Oct 16, 2023Abstract:Connected and autonomous vehicles have the potential to minimize energy consumption by optimizing the vehicle velocity and powertrain dynamics with Vehicle-to-Everything info en route. Existing deterministic and stochastic methods created to solve the eco-driving problem generally suffer from high computational and memory requirements, which makes online implementation challenging. This work proposes a hierarchical multi-horizon optimization framework implemented via a neural network. The neural network learns a full-route value function to account for the variability in route information and is then used to approximate the terminal cost in a receding horizon optimization. Simulations over real-world routes demonstrate that the proposed approach achieves comparable performance to a stochastic optimization solution obtained via reinforcement learning, while requiring no sophisticated training paradigm and negligible on-board memory.

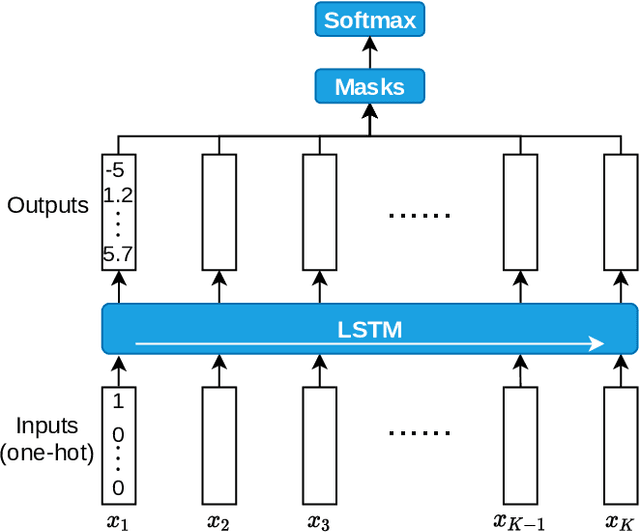

Data-driven Driver Model for Speed Advisory Systems in Partially Automated Vehicles

May 17, 2022

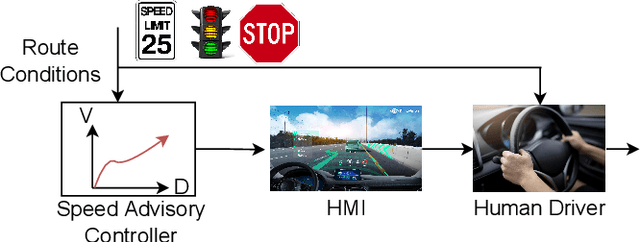

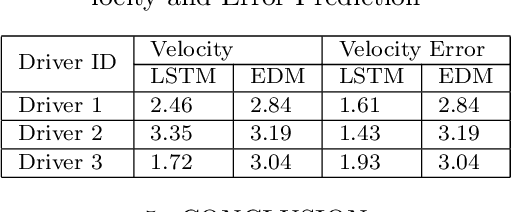

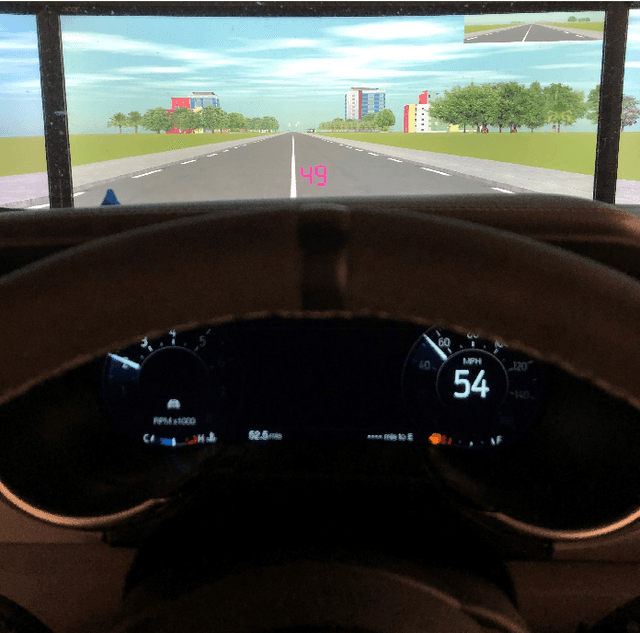

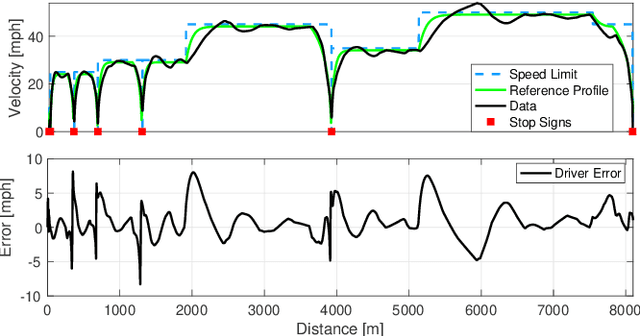

Abstract:Vehicle control algorithms exploiting connectivity and automation, such as Connected and Automated Vehicles (CAVs) or Advanced Driver Assistance Systems (ADAS), have the opportunity to improve energy savings. However, lower levels of automation involve a human-machine interaction stage, where the presence of a human driver affects the performance of the control algorithm in closed loop. This occurs for instance in the case of Eco-Driving control algorithms implemented as a velocity advisory system, where the driver is displayed an optimal speed trajectory to follow to reduce energy consumption. Achieving the control objectives relies on the human driver perfectly following the recommended speed. If the driver is unable to follow the recommended speed, a decline in energy savings and poor vehicle performance may occur. This warrants the creation of methods to model and forecast the response of a human driver when operating in the loop with a speed advisory system. This work focuses on developing a sequence to sequence long-short term memory (LSTM)-based driver behavior model that models the interaction of the human driver to a suggested desired vehicle speed trajectory in real-world conditions. A driving simulator is used for data collection and training the driver model, which is then compared against the driving data and a deterministic model. Results show close proximity of the LSTM-based model with the driving data, demonstrating that the model can be adopted as a tool to design human-centered speed advisory systems.

Video-based assessment of intraoperative surgical skill

May 13, 2022

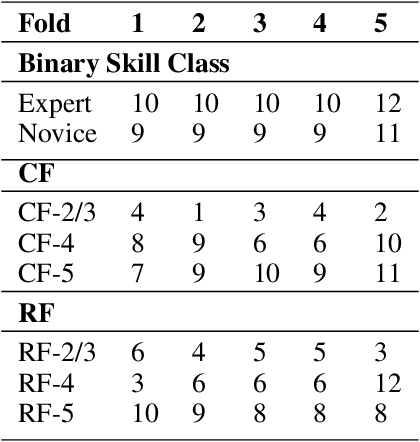

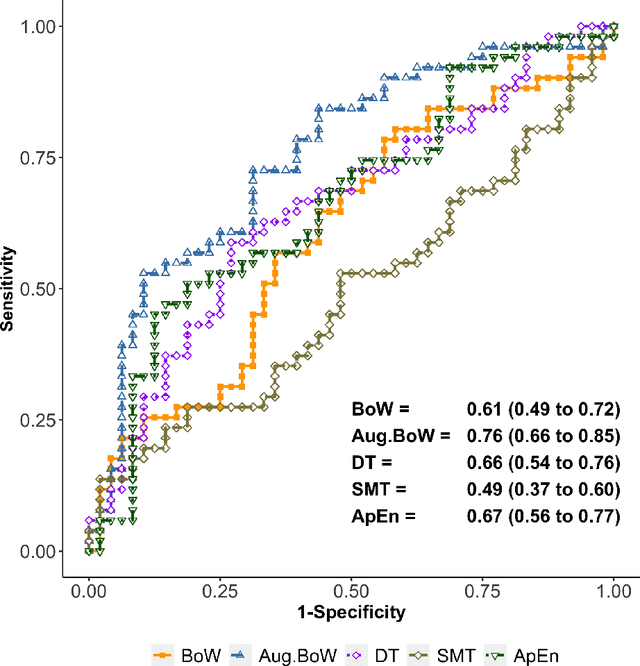

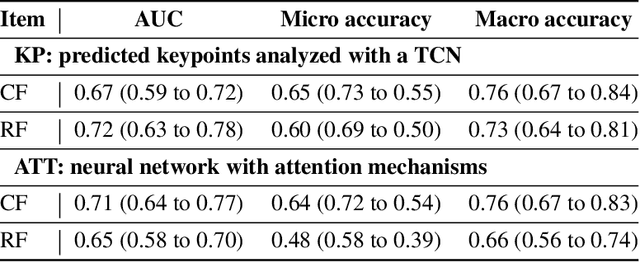

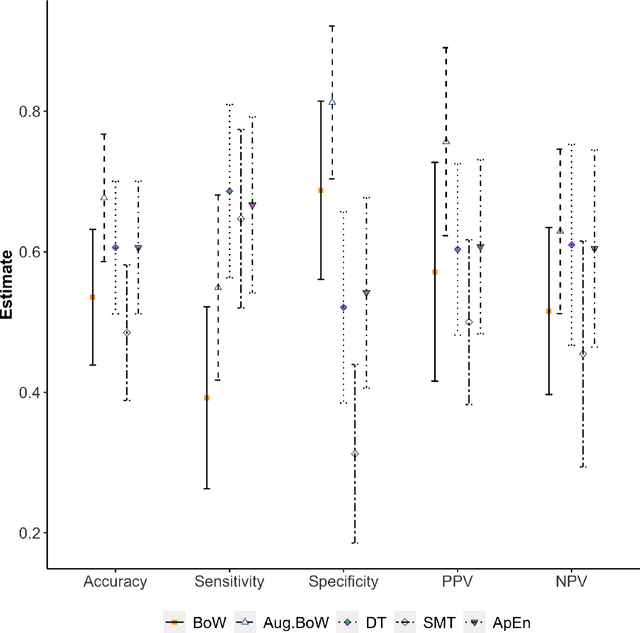

Abstract:Purpose: The objective of this investigation is to provide a comprehensive analysis of state-of-the-art methods for video-based assessment of surgical skill in the operating room. Methods: Using a data set of 99 videos of capsulorhexis, a critical step in cataract surgery, we evaluate feature based methods previously developed for surgical skill assessment mostly under benchtop settings. In addition, we present and validate two deep learning methods that directly assess skill using RGB videos. In the first method, we predict instrument tips as keypoints, and learn surgical skill using temporal convolutional neural networks. In the second method, we propose a novel architecture for surgical skill assessment that includes a frame-wise encoder (2D convolutional neural network) followed by a temporal model (recurrent neural network), both of which are augmented by visual attention mechanisms. We report the area under the receiver operating characteristic curve, sensitivity, specificity, and predictive values with each method through 5-fold cross-validation. Results: For the task of binary skill classification (expert vs. novice), deep neural network based methods exhibit higher AUC than the classical spatiotemporal interest point based methods. The neural network approach using attention mechanisms also showed high sensitivity and specificity. Conclusion: Deep learning methods are necessary for video-based assessment of surgical skill in the operating room. Our findings of internal validity of a network using attention mechanisms to assess skill directly using RGB videos should be evaluated for external validity in other data sets.

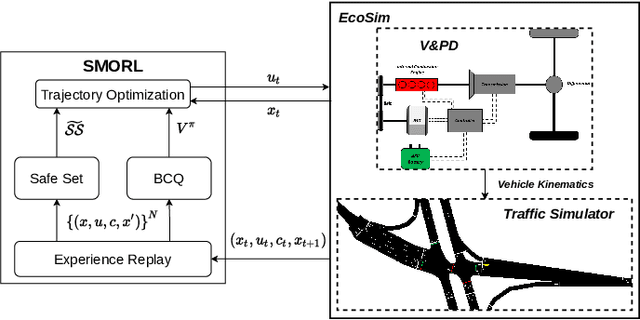

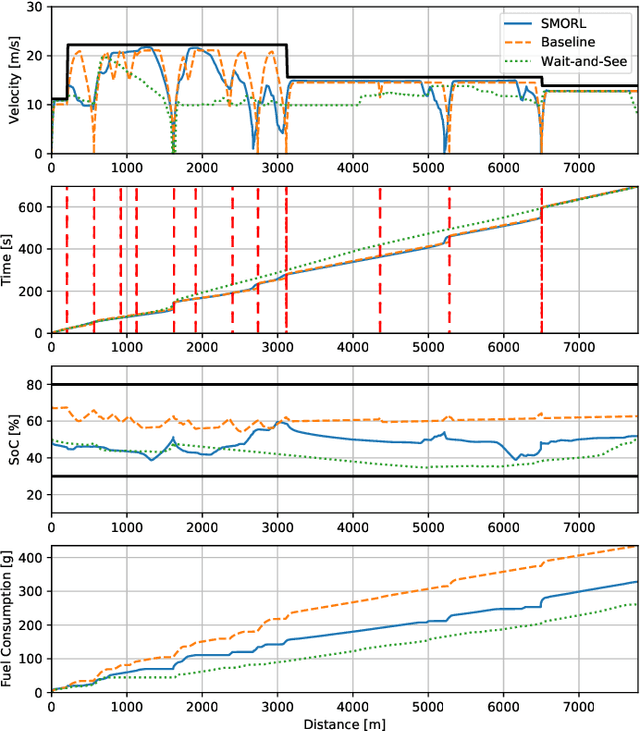

Safe Model-based Off-policy Reinforcement Learning for Eco-Driving in Connected and Automated Hybrid Electric Vehicles

May 25, 2021

Abstract:Connected and Automated Hybrid Electric Vehicles have the potential to reduce fuel consumption and travel time in real-world driving conditions. The eco-driving problem seeks to design optimal speed and power usage profiles based upon look-ahead information from connectivity and advanced mapping features. Recently, Deep Reinforcement Learning (DRL) has been applied to the eco-driving problem. While the previous studies synthesize simulators and model-free DRL to reduce online computation, this work proposes a Safe Off-policy Model-Based Reinforcement Learning algorithm for the eco-driving problem. The advantages over the existing literature are three-fold. First, the combination of off-policy learning and the use of a physics-based model improves the sample efficiency. Second, the training does not require any extrinsic rewarding mechanism for constraint satisfaction. Third, the feasibility of trajectory is guaranteed by using a safe set approximated by deep generative models. The performance of the proposed method is benchmarked against a baseline controller representing human drivers, a previously designed model-free DRL strategy, and the wait-and-see optimal solution. In simulation, the proposed algorithm leads to a policy with a higher average speed and a better fuel economy compared to the model-free agent. Compared to the baseline controller, the learned strategy reduces the fuel consumption by more than 21\% while keeping the average speed comparable.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge