Shihui Yin

High-Throughput In-Memory Computing for Binary Deep Neural Networks with Monolithically Integrated RRAM and 90nm CMOS

Sep 16, 2019

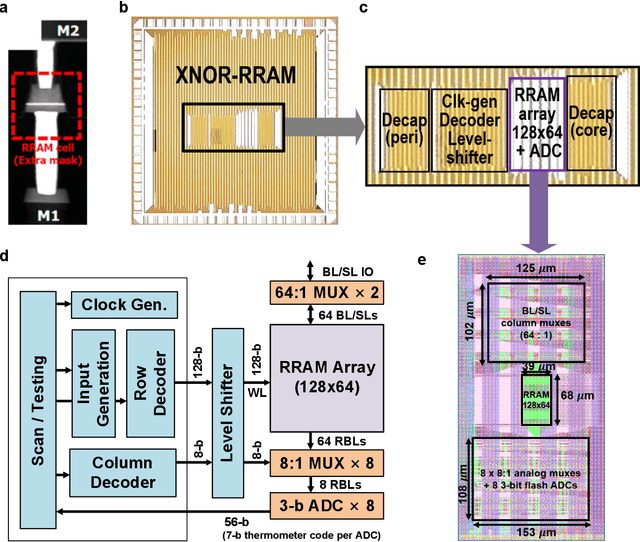

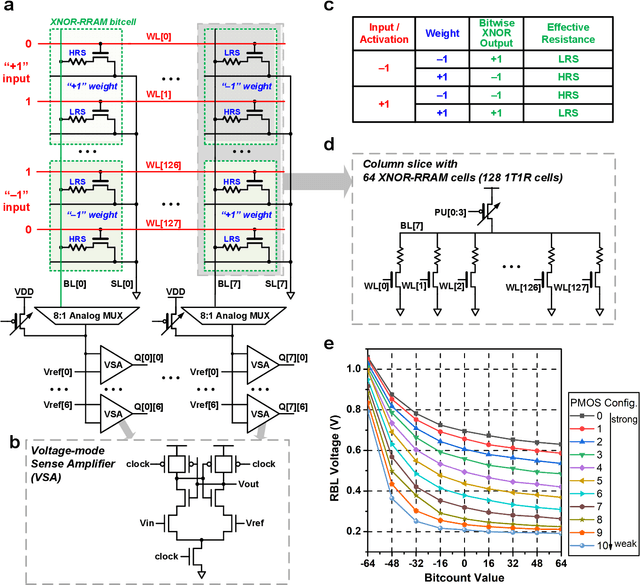

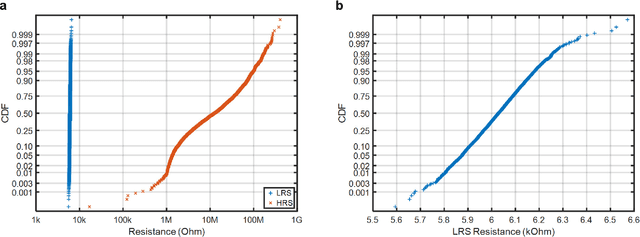

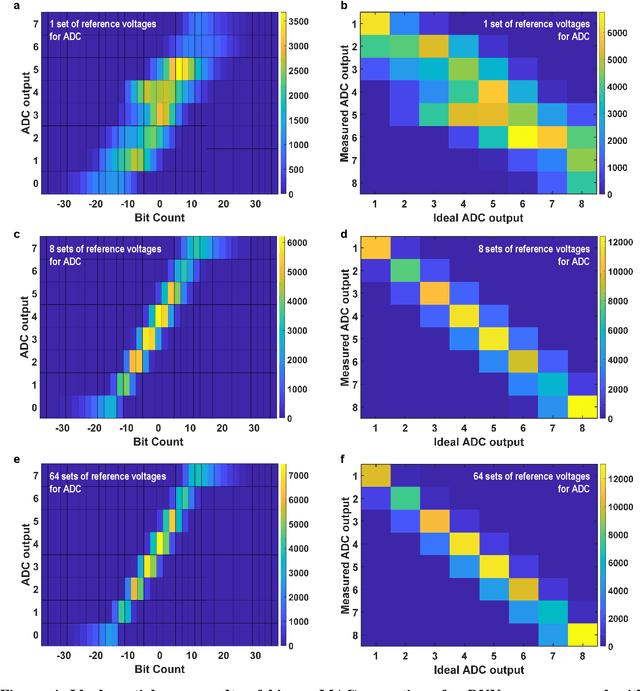

Abstract:Deep learning hardware designs have been bottlenecked by conventional memories such as SRAM due to density, leakage and parallel computing challenges. Resistive devices can address the density and volatility issues, but have been limited by peripheral circuit integration. In this work, we demonstrate a scalable RRAM based in-memory computing design, termed XNOR-RRAM, which is fabricated in a 90nm CMOS technology with monolithic integration of RRAM devices between metal 1 and 2. We integrated a 128x64 RRAM array with CMOS peripheral circuits including row/column decoders and flash analog-to-digital converters (ADCs), which collectively become a core component for scalable RRAM-based in-memory computing towards large deep neural networks (DNNs). To maximize the parallelism of in-memory computing, we assert all 128 wordlines of the RRAM array simultaneously, perform analog computing along the bitlines, and digitize the bitline voltages using ADCs. The resistance distribution of low resistance states is tightened by write-verify scheme, and the ADC offset is calibrated. Prototype chip measurements show that the proposed design achieves high binary DNN accuracy of 98.5% for MNIST and 83.5% for CIFAR-10 datasets, respectively, with energy efficiency of 24 TOPS/W and 158 GOPS throughput. This represents 5.6X, 3.2X, 14.1X improvements in throughput, energy-delay product (EDP), and energy-delay-squared product (ED2P), respectively, compared to the state-of-the-art literature. The proposed XNOR-RRAM can enable intelligent functionalities for area-/energy-constrained edge computing devices.

Automatic Compiler Based FPGA Accelerator for CNN Training

Aug 15, 2019

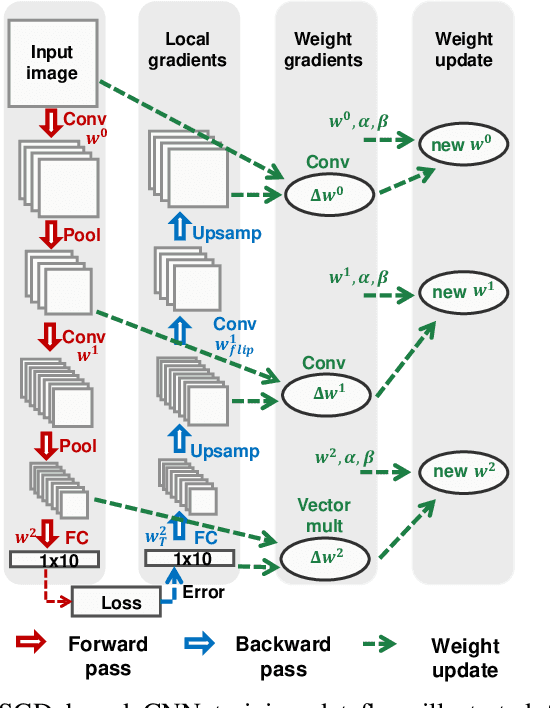

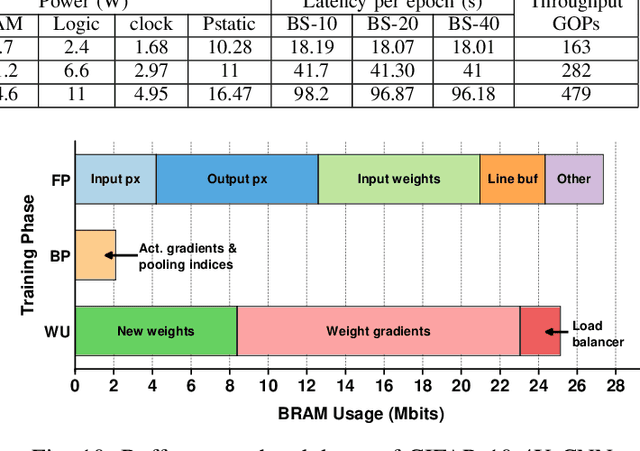

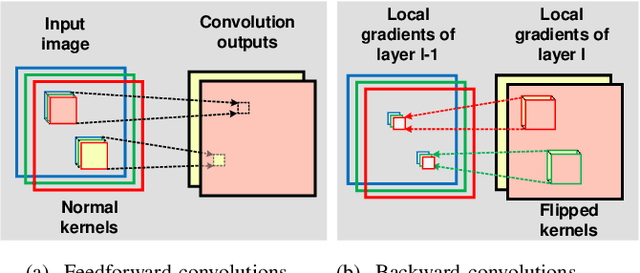

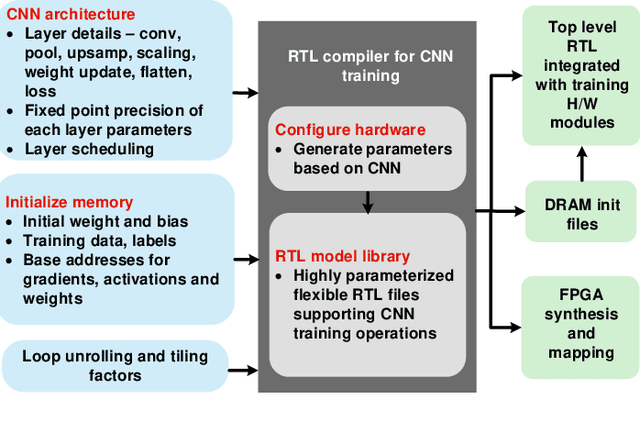

Abstract:Training of convolutional neural networks (CNNs)on embedded platforms to support on-device learning is earning vital importance in recent days. Designing flexible training hard-ware is much more challenging than inference hardware, due to design complexity and large computation/memory requirement. In this work, we present an automatic compiler-based FPGA accelerator with 16-bit fixed-point precision for complete CNNtraining, including Forward Pass (FP), Backward Pass (BP) and Weight Update (WU). We implemented an optimized RTL library to perform training-specific tasks and developed an RTL compiler to automatically generate FPGA-synthesizable RTL based on user-defined constraints. We present a new cyclic weight storage/access scheme for on-chip BRAM and off-chip DRAMto efficiently implement non-transpose and transpose operations during FP and BP phases, respectively. Representative CNNs for CIFAR-10 dataset are implemented and trained on Intel Stratix 10-GX FPGA using proposed hardware architecture, demonstrating up to 479 GOPS performance.

Minimizing Area and Energy of Deep Learning Hardware Design Using Collective Low Precision and Structured Compression

Apr 19, 2018

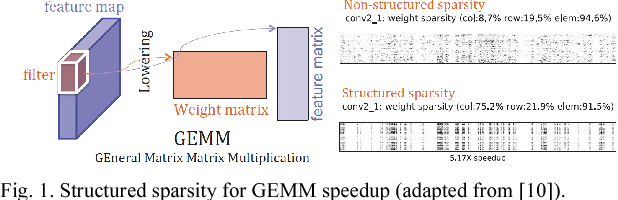

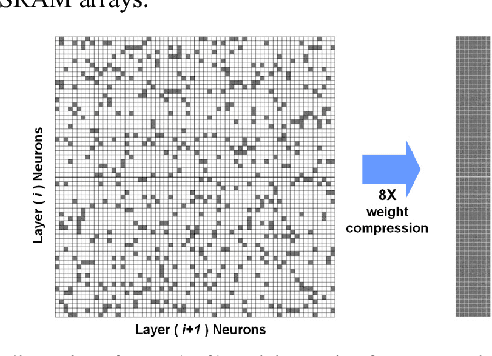

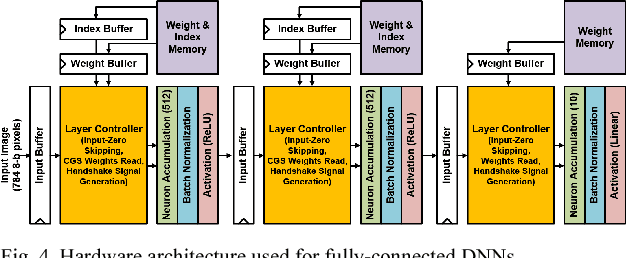

Abstract:Deep learning algorithms have shown tremendous success in many recognition tasks; however, these algorithms typically include a deep neural network (DNN) structure and a large number of parameters, which makes it challenging to implement them on power/area-constrained embedded platforms. To reduce the network size, several studies investigated compression by introducing element-wise or row-/column-/block-wise sparsity via pruning and regularization. In addition, many recent works have focused on reducing precision of activations and weights with some reducing down to a single bit. However, combining various sparsity structures with binarized or very-low-precision (2-3 bit) neural networks have not been comprehensively explored. In this work, we present design techniques for minimum-area/-energy DNN hardware with minimal degradation in accuracy. During training, both binarization/low-precision and structured sparsity are applied as constraints to find the smallest memory footprint for a given deep learning algorithm. The DNN model for CIFAR-10 dataset with weight memory reduction of 50X exhibits accuracy comparable to that of the floating-point counterpart. Area, performance and energy results of DNN hardware in 40nm CMOS are reported for the MNIST dataset. The optimized DNN that combines 8X structured compression and 3-bit weight precision showed 98.4% accuracy at 20nJ per classification.

Algorithm and Hardware Design of Discrete-Time Spiking Neural Networks Based on Back Propagation with Binary Activations

Sep 19, 2017

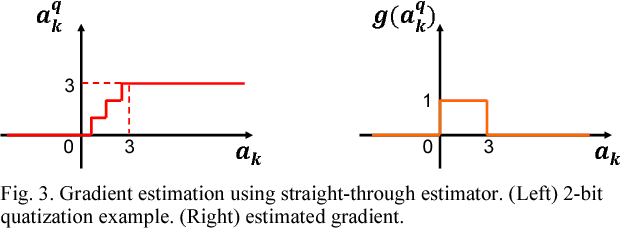

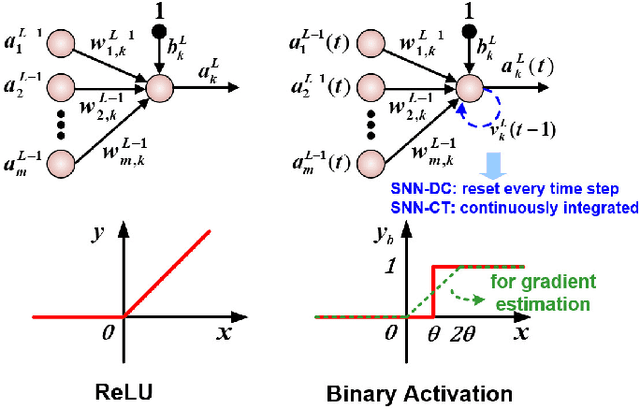

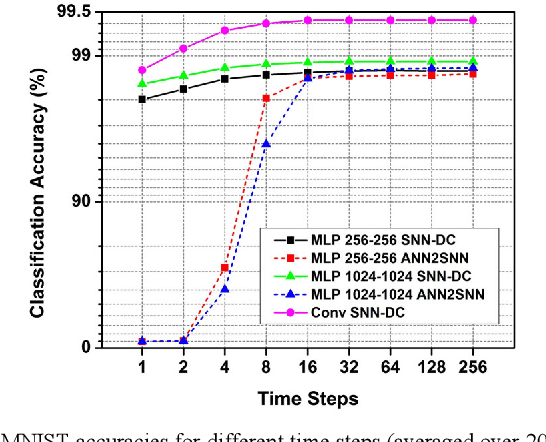

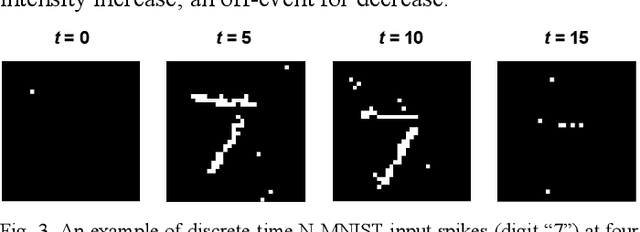

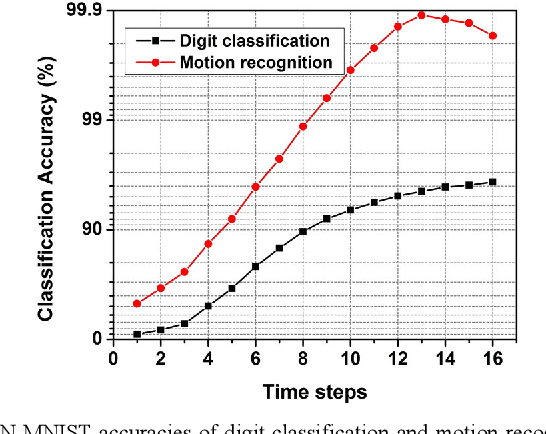

Abstract:We present a new back propagation based training algorithm for discrete-time spiking neural networks (SNN). Inspired by recent deep learning algorithms on binarized neural networks, binary activation with a straight-through gradient estimator is used to model the leaky integrate-fire spiking neuron, overcoming the difficulty in training SNNs using back propagation. Two SNN training algorithms are proposed: (1) SNN with discontinuous integration, which is suitable for rate-coded input spikes, and (2) SNN with continuous integration, which is more general and can handle input spikes with temporal information. Neuromorphic hardware designed in 40nm CMOS exploits the spike sparsity and demonstrates high classification accuracy (>98% on MNIST) and low energy (48.4-773 nJ/image).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge