Shiguang Sun

MInCo: Mitigating Information Conflicts in Distracted Visual Model-based Reinforcement Learning

Apr 05, 2025Abstract:Existing visual model-based reinforcement learning (MBRL) algorithms with observation reconstruction often suffer from information conflicts, making it difficult to learn compact representations and hence result in less robust policies, especially in the presence of task-irrelevant visual distractions. In this paper, we first reveal that the information conflicts in current visual MBRL algorithms stem from visual representation learning and latent dynamics modeling with an information-theoretic perspective. Based on this finding, we present a new algorithm to resolve information conflicts for visual MBRL, named MInCo, which mitigates information conflicts by leveraging negative-free contrastive learning, aiding in learning invariant representation and robust policies despite noisy observations. To prevent the dominance of visual representation learning, we introduce time-varying reweighting to bias the learning towards dynamics modeling as training proceeds. We evaluate our method on several robotic control tasks with dynamic background distractions. Our experiments demonstrate that MInCo learns invariant representations against background noise and consistently outperforms current state-of-the-art visual MBRL methods. Code is available at https://github.com/ShiguangSun/minco.

Grounded Answers for Multi-agent Decision-making Problem through Generative World Model

Oct 03, 2024

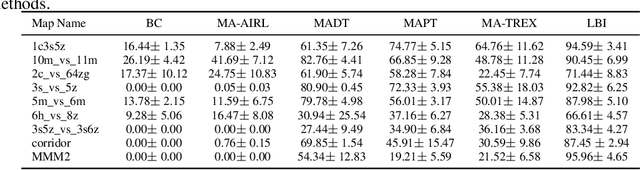

Abstract:Recent progress in generative models has stimulated significant innovations in many fields, such as image generation and chatbots. Despite their success, these models often produce sketchy and misleading solutions for complex multi-agent decision-making problems because they miss the trial-and-error experience and reasoning as humans. To address this limitation, we explore a paradigm that integrates a language-guided simulator into the multi-agent reinforcement learning pipeline to enhance the generated answer. The simulator is a world model that separately learns dynamics and reward, where the dynamics model comprises an image tokenizer as well as a causal transformer to generate interaction transitions autoregressively, and the reward model is a bidirectional transformer learned by maximizing the likelihood of trajectories in the expert demonstrations under language guidance. Given an image of the current state and the task description, we use the world model to train the joint policy and produce the image sequence as the answer by running the converged policy on the dynamics model. The empirical results demonstrate that this framework can improve the answers for multi-agent decision-making problems by showing superior performance on the training and unseen tasks of the StarCraft Multi-Agent Challenge benchmark. In particular, it can generate consistent interaction sequences and explainable reward functions at interaction states, opening the path for training generative models of the future.

Robotic Grasping from Classical to Modern: A Survey

Feb 08, 2022

Abstract:Robotic Grasping has always been an active topic in robotics since grasping is one of the fundamental but most challenging skills of robots. It demands the coordination of robotic perception, planning, and control for robustness and intelligence. However, current solutions are still far behind humans, especially when confronting unstructured scenarios. In this paper, we survey the advances of robotic grasping, starting from the classical formulations and solutions to the modern ones. By reviewing the history of robotic grasping, we want to provide a complete view of this community, and perhaps inspire the combination and fusion of different ideas, which we think would be helpful to touch and explore the essence of robotic grasping problems. In detail, we firstly give an overview of the analytic methods for robotic grasping. After that, we provide a discussion on the recent state-of-the-art data-driven grasping approaches rising in recent years. With the development of computer vision, semantic grasping is being widely investigated and can be the basis of intelligent manipulation and skill learning for autonomous robotic systems in the future. Therefore, in our survey, we also briefly review the recent progress in this topic. Finally, we discuss the open problems and the future research directions that may be important for the human-level robustness, autonomy, and intelligence of robots.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge