Shaojun Cai

Differentiable SLAM-net: Learning Particle SLAM for Visual Navigation

May 19, 2021

Abstract:Simultaneous localization and mapping (SLAM) remains challenging for a number of downstream applications, such as visual robot navigation, because of rapid turns, featureless walls, and poor camera quality. We introduce the Differentiable SLAM Network (SLAM-net) along with a navigation architecture to enable planar robot navigation in previously unseen indoor environments. SLAM-net encodes a particle filter based SLAM algorithm in a differentiable computation graph, and learns task-oriented neural network components by backpropagating through the SLAM algorithm. Because it can optimize all model components jointly for the end-objective, SLAM-net learns to be robust in challenging conditions. We run experiments in the Habitat platform with different real-world RGB and RGB-D datasets. SLAM-net significantly outperforms the widely adapted ORB-SLAM in noisy conditions. Our navigation architecture with SLAM-net improves the state-of-the-art for the Habitat Challenge 2020 PointNav task by a large margin (37% to 64% success). Project website: http://sites.google.com/view/slamnet

Localizing Discriminative Visual Landmarks for Place Recognition

Apr 14, 2019

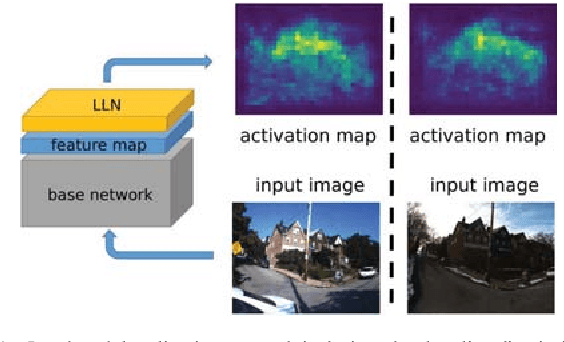

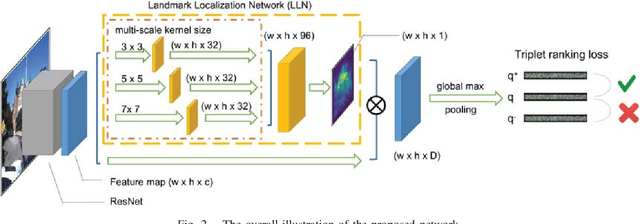

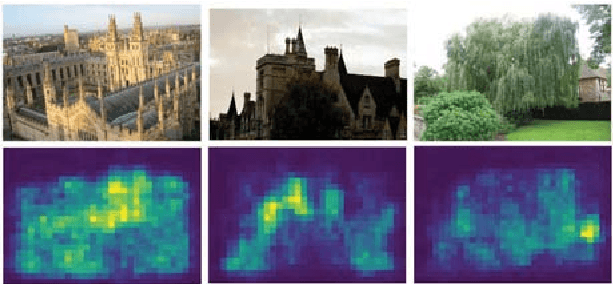

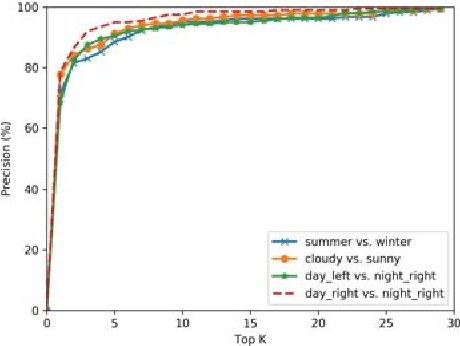

Abstract:We address the problem of visual place recognition with perceptual changes. The fundamental problem of visual place recognition is generating robust image representations which are not only insensitive to environmental changes but also distinguishable to different places. Taking advantage of the feature extraction ability of Convolutional Neural Networks (CNNs), we further investigate how to localize discriminative visual landmarks that positively contribute to the similarity measurement, such as buildings and vegetations. In particular, a Landmark Localization Network (LLN) is designed to indicate which regions of an image are used for discrimination. Detailed experiments are conducted on open source datasets with varied appearance and viewpoint changes. The proposed approach achieves superior performance against state-of-the-art methods.

CubemapSLAM: A Piecewise-Pinhole Monocular Fisheye SLAM System

Feb 27, 2019

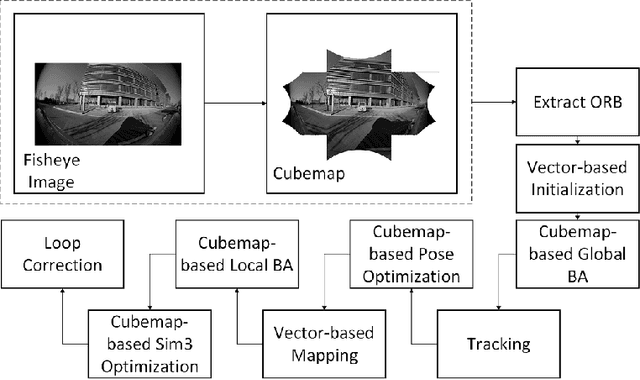

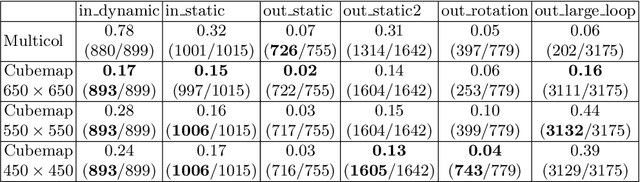

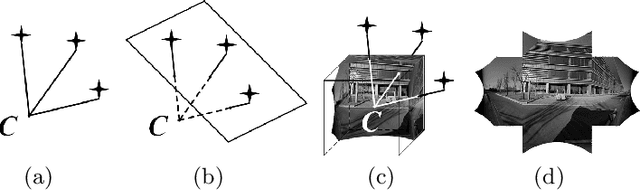

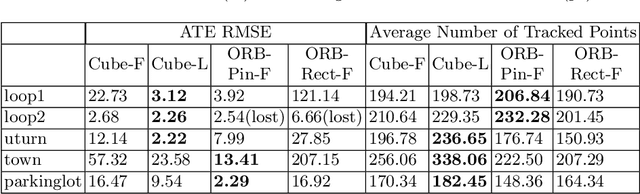

Abstract:We present a real-time feature-based SLAM (Simultaneous Localization and Mapping) system for fisheye cameras featured by a large field-of-view (FoV). Large FoV cameras are beneficial for large-scale outdoor SLAM applications, because they increase visual overlap between consecutive frames and capture more pixels belonging to the static parts of the environment. However, current feature-based SLAM systems such as PTAM and ORB-SLAM limit their camera model to pinhole only. To compensate for the vacancy, we propose a novel SLAM system with the cubemap model that utilizes the full FoV without introducing distortion from the fisheye lens, which greatly benefits the feature matching pipeline. In the initialization and point triangulation stages, we adopt a unified vector-based representation to efficiently handle matches across multiple faces, and based on this representation we propose and analyze a novel inlier checking metric. In the optimization stage, we design and test a novel multi-pinhole reprojection error metric that outperforms other metrics by a large margin. We evaluate our system comprehensively on a public dataset as well as a self-collected dataset that contains real-world challenging sequences. The results suggest that our system is more robust and accurate than other feature-based fisheye SLAM approaches. The CubemapSLAM system has been released into the public domain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge