Shantanu Chandra

A Multimodal Framework for the Detection of Hateful Memes

Dec 24, 2020

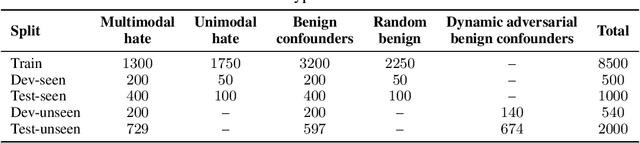

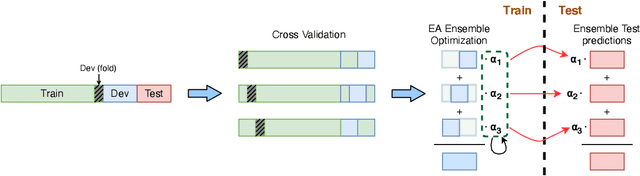

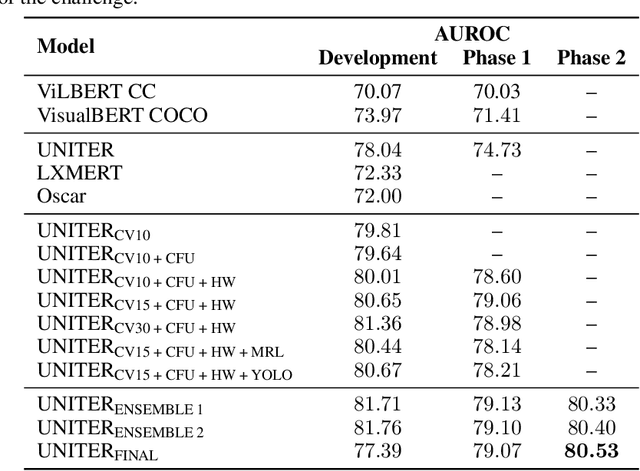

Abstract:An increasingly common expression of online hate speech is multimodal in nature and comes in the form of memes. Designing systems to automatically detect hateful content is of paramount importance if we are to mitigate its undesirable effects on the society at large. The detection of multimodal hate speech is an intrinsically difficult and open problem: memes convey a message using both images and text and, hence, require multimodal reasoning and joint visual and language understanding. In this work, we seek to advance this line of research and develop a multimodal framework for the detection of hateful memes. We improve the performance of existing multimodal approaches beyond simple fine-tuning and, among others, show the effectiveness of upsampling of contrastive examples to encourage multimodality and ensemble learning based on cross-validation to improve robustness. We furthermore analyze model misclassifications and discuss a number of hypothesis-driven augmentations and their effects on performance, presenting important implications for future research in the field. Our best approach comprises an ensemble of UNITER-based models and achieves an AUROC score of 80.53, placing us 4th on phase 2 of the 2020 Hateful Memes Challenge organized by Facebook.

Graph-based Modeling of Online Communities for Fake News Detection

Sep 14, 2020

Abstract:Over the past few years, there has been a substantial effort towards automated detection of fake news on social media platforms. Existing research has modeled the structure, style, content, and patterns in dissemination of online posts, as well as the demographic traits of users who interact with them. However, no attention has been directed towards modeling the properties of online communities that interact with the posts. In this work, we propose a novel social context-aware fake news detection framework, SAFER, based on graph neural networks (GNNs). The proposed framework aggregates information with respect to: 1) the nature of the content disseminated, 2) content-sharing behavior of users, and 3) the social network of those users. We furthermore perform a systematic comparison of several GNN models for this task and introduce novel methods based on relational and hyperbolic GNNs, which have not been previously used for user or community modeling within NLP. We empirically demonstrate that our framework yields significant improvements over existing text-based techniques and achieves state-of-the-art results on fake news datasets from two different domains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge