Shangqi Lai

Robust Anomaly Detection in O-RAN: Leveraging LLMs against Data Manipulation Attacks

Aug 11, 2025Abstract:The introduction of 5G and the Open Radio Access Network (O-RAN) architecture has enabled more flexible and intelligent network deployments. However, the increased complexity and openness of these architectures also introduce novel security challenges, such as data manipulation attacks on the semi-standardised Shared Data Layer (SDL) within the O-RAN platform through malicious xApps. In particular, malicious xApps can exploit this vulnerability by introducing subtle Unicode-wise alterations (hypoglyphs) into the data that are being used by traditional machine learning (ML)-based anomaly detection methods. These Unicode-wise manipulations can potentially bypass detection and cause failures in anomaly detection systems based on traditional ML, such as AutoEncoders, which are unable to process hypoglyphed data without crashing. We investigate the use of Large Language Models (LLMs) for anomaly detection within the O-RAN architecture to address this challenge. We demonstrate that LLM-based xApps maintain robust operational performance and are capable of processing manipulated messages without crashing. While initial detection accuracy requires further improvements, our results highlight the robustness of LLMs to adversarial attacks such as hypoglyphs in input data. There is potential to use their adaptability through prompt engineering to further improve the accuracy, although this requires further research. Additionally, we show that LLMs achieve low detection latency (under 0.07 seconds), making them suitable for Near-Real-Time (Near-RT) RIC deployments.

Self-Adaptive and Robust Federated Spectrum Sensing without Benign Majority for Cellular Networks

Jul 16, 2025Abstract:Advancements in wireless and mobile technologies, including 5G advanced and the envisioned 6G, are driving exponential growth in wireless devices. However, this rapid expansion exacerbates spectrum scarcity, posing a critical challenge. Dynamic spectrum allocation (DSA)--which relies on sensing and dynamically sharing spectrum--has emerged as an essential solution to address this issue. While machine learning (ML) models hold significant potential for improving spectrum sensing, their adoption in centralized ML-based DSA systems is limited by privacy concerns, bandwidth constraints, and regulatory challenges. To overcome these limitations, distributed ML-based approaches such as Federated Learning (FL) offer promising alternatives. This work addresses two key challenges in FL-based spectrum sensing (FLSS). First, the scarcity of labeled data for training FL models in practical spectrum sensing scenarios is tackled with a semi-supervised FL approach, combined with energy detection, enabling model training on unlabeled datasets. Second, we examine the security vulnerabilities of FLSS, focusing on the impact of data poisoning attacks. Our analysis highlights the shortcomings of existing majority-based defenses in countering such attacks. To address these vulnerabilities, we propose a novel defense mechanism inspired by vaccination, which effectively mitigates data poisoning attacks without relying on majority-based assumptions. Extensive experiments on both synthetic and real-world datasets validate our solutions, demonstrating that FLSS can achieve near-perfect accuracy on unlabeled datasets and maintain Byzantine robustness against both targeted and untargeted data poisoning attacks, even when a significant proportion of participants are malicious.

Security and Privacy of 6G Federated Learning-enabled Dynamic Spectrum Sharing

Jun 18, 2024

Abstract:Spectrum sharing is increasingly vital in 6G wireless communication, facilitating dynamic access to unused spectrum holes. Recently, there has been a significant shift towards employing machine learning (ML) techniques for sensing spectrum holes. In this context, federated learning (FL)-enabled spectrum sensing technology has garnered wide attention, allowing for the construction of an aggregated ML model without disclosing the private spectrum sensing information of wireless user devices. However, the integrity of collaborative training and the privacy of spectrum information from local users have remained largely unexplored. This article first examines the latest developments in FL-enabled spectrum sharing for prospective 6G scenarios. It then identifies practical attack vectors in 6G to illustrate potential AI-powered security and privacy threats in these contexts. Finally, the study outlines future directions, including practical defense challenges and guidelines.

Aggregation Service for Federated Learning: An Efficient, Secure, and More Resilient Realization

Feb 04, 2022

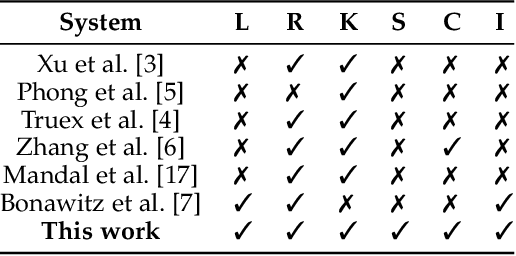

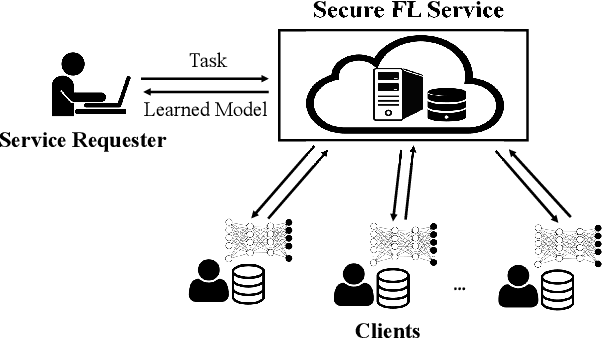

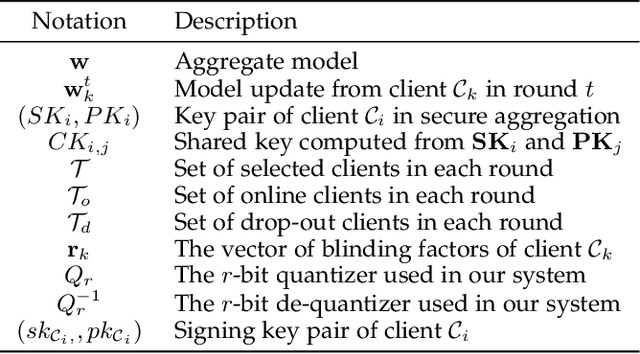

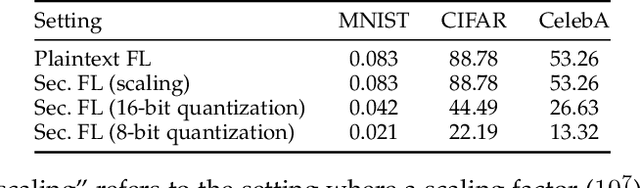

Abstract:Federated learning has recently emerged as a paradigm promising the benefits of harnessing rich data from diverse sources to train high quality models, with the salient features that training datasets never leave local devices. Only model updates are locally computed and shared for aggregation to produce a global model. While federated learning greatly alleviates the privacy concerns as opposed to learning with centralized data, sharing model updates still poses privacy risks. In this paper, we present a system design which offers efficient protection of individual model updates throughout the learning procedure, allowing clients to only provide obscured model updates while a cloud server can still perform the aggregation. Our federated learning system first departs from prior works by supporting lightweight encryption and aggregation, and resilience against drop-out clients with no impact on their participation in future rounds. Meanwhile, prior work largely overlooks bandwidth efficiency optimization in the ciphertext domain and the support of security against an actively adversarial cloud server, which we also fully explore in this paper and provide effective and efficient mechanisms. Extensive experiments over several benchmark datasets (MNIST, CIFAR-10, and CelebA) show our system achieves accuracy comparable to the plaintext baseline, with practical performance.

Enabling Efficient Privacy-Assured Outlier Detection over Encrypted Incremental Datasets

Nov 14, 2019

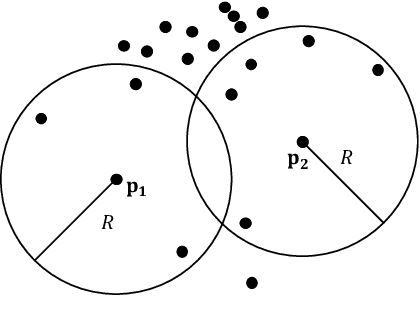

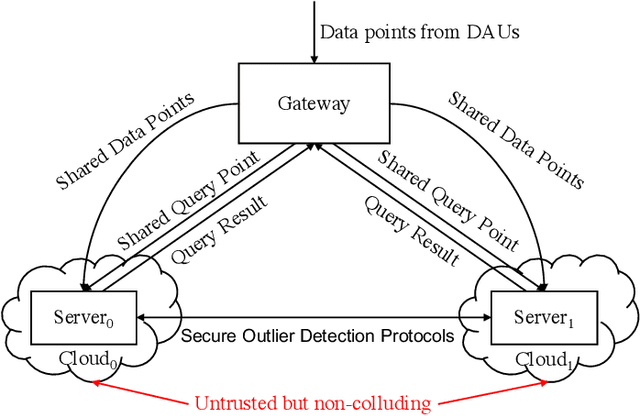

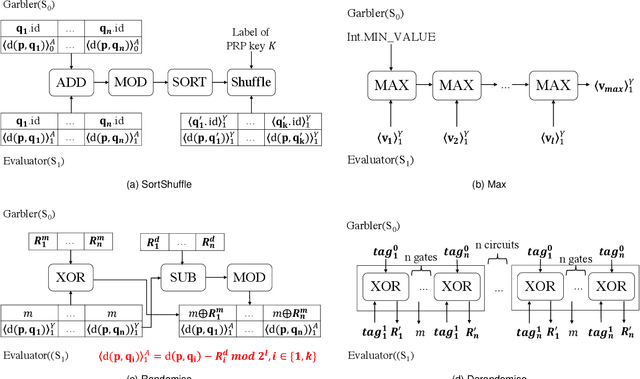

Abstract:Outlier detection is widely used in practice to track the anomaly on incremental datasets such as network traffic and system logs. However, these datasets often involve sensitive information, and sharing the data to third parties for anomaly detection raises privacy concerns. In this paper, we present a privacy-preserving outlier detection protocol (PPOD) for incremental datasets. The protocol decomposes the outlier detection algorithm into several phases and recognises the necessary cryptographic operations in each phase. It realises several cryptographic modules via efficient and interchangeable protocols to support the above cryptographic operations and composes them in the overall protocol to enable outlier detection over encrypted datasets. To support efficient updates, it integrates the sliding window model to periodically evict the expired data in order to maintain a constant update time. We build a prototype of PPOD and systematically evaluates the cryptographic modules and the overall protocols under various parameter settings. Our results show that PPOD can handle encrypted incremental datasets with a moderate computation and communication cost.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge