Seth Nabarro

Learning in Deep Factor Graphs with Gaussian Belief Propagation

Nov 24, 2023Abstract:We propose an approach to do learning in Gaussian factor graphs. We treat all relevant quantities (inputs, outputs, parameters, latents) as random variables in a graphical model, and view both training and prediction as inference problems with different observed nodes. Our experiments show that these problems can be efficiently solved with belief propagation (BP), whose updates are inherently local, presenting exciting opportunities for distributed and asynchronous training. Our approach can be scaled to deep networks and provides a natural means to do continual learning: use the BP-estimated parameter marginals of the current task as parameter priors for the next. On a video denoising task we demonstrate the benefit of learnable parameters over a classical factor graph approach and we show encouraging performance of deep factor graphs for continual image classification on MNIST.

Data augmentation in Bayesian neural networks and the cold posterior effect

Jun 10, 2021

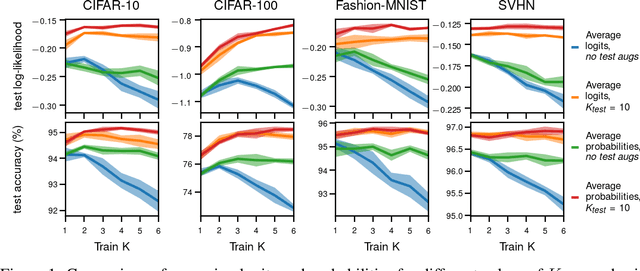

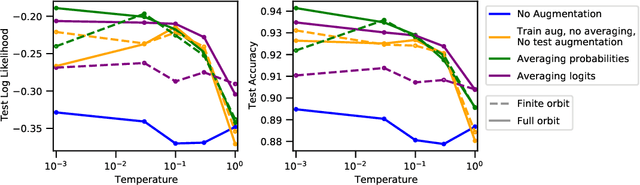

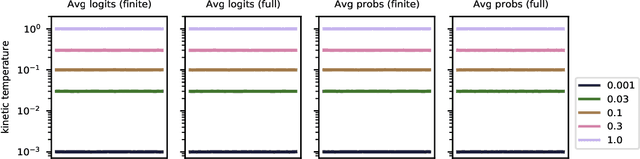

Abstract:Data augmentation is a highly effective approach for improving performance in deep neural networks. The standard view is that it creates an enlarged dataset by adding synthetic data, which raises a problem when combining it with Bayesian inference: how much data are we really conditioning on? This question is particularly relevant to recent observations linking data augmentation to the cold posterior effect. We investigate various principled ways of finding a log-likelihood for augmented datasets. Our approach prescribes augmenting the same underlying image multiple times, both at test and train-time, and averaging either the logits or the predictive probabilities. Empirically, we observe the best performance with averaging probabilities. While there are interactions with the cold posterior effect, neither averaging logits or averaging probabilities eliminates it.

Hardware-accelerated Simulation-based Inference of Stochastic Epidemiology Models for COVID-19

Dec 23, 2020

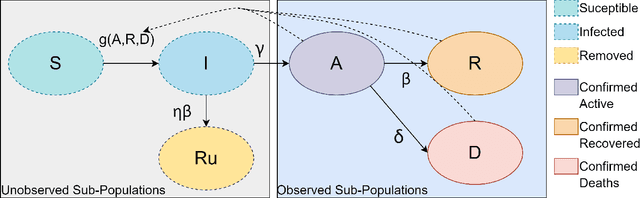

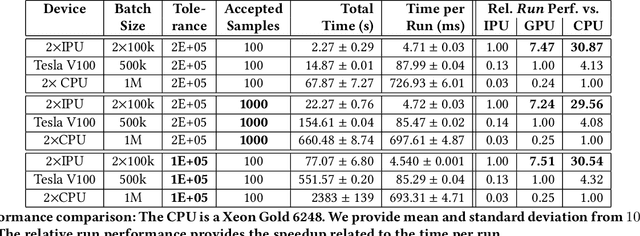

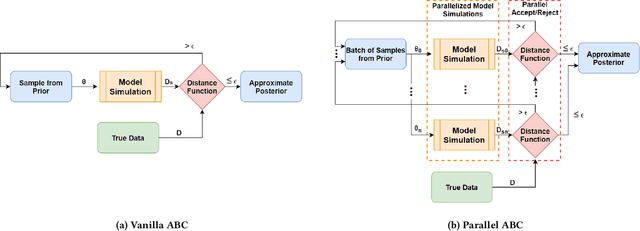

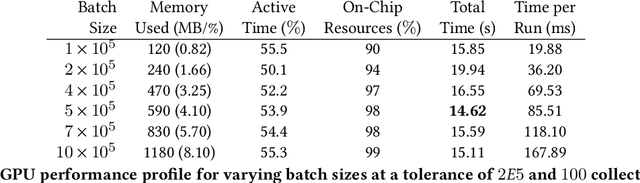

Abstract:Epidemiology models are central in understanding and controlling large scale pandemics. Several epidemiology models require simulation-based inference such as Approximate Bayesian Computation (ABC) to fit their parameters to observations. ABC inference is highly amenable to efficient hardware acceleration. In this work, we develop parallel ABC inference of a stochastic epidemiology model for COVID-19. The statistical inference framework is implemented and compared on Intel Xeon CPU, NVIDIA Tesla V100 GPU and the Graphcore Mk1 IPU, and the results are discussed in the context of their computational architectures. Results show that GPUs are 4x and IPUs are 30x faster than Xeon CPUs. Extensive performance analysis indicates that the difference between IPU and GPU can be attributed to higher communication bandwidth, closeness of memory to compute, and higher compute power in the IPU. The proposed framework scales across 16 IPUs, with scaling overhead not exceeding 8% for the experiments performed. We present an example of our framework in practice, performing inference on the epidemiology model across three countries, and giving a brief overview of the results.

Spatiotemporal Prediction of Ambulance Demand using Gaussian Process Regression

Jun 28, 2018

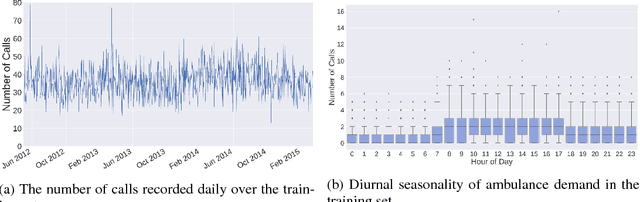

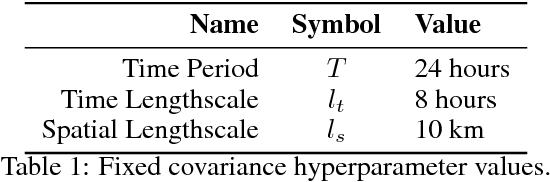

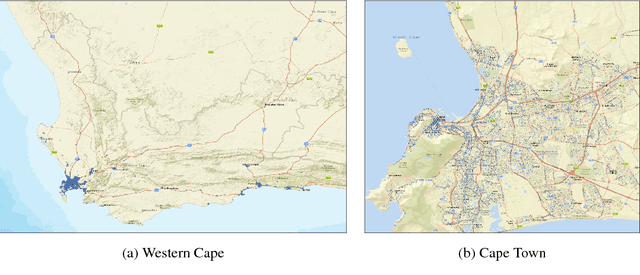

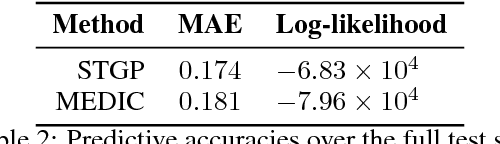

Abstract:Accurately predicting when and where ambulance call-outs occur can reduce response times and ensure the patient receives urgent care sooner. Here we present a novel method for ambulance demand prediction using Gaussian process regression (GPR) in time and geographic space. The method exhibits superior accuracy to MEDIC, a method which has been used in industry. The use of GPR has additional benefits such as the quantification of uncertainty with each prediction, the choice of kernel functions to encode prior knowledge and the ability to capture spatial correlation. Measures to increase the utility of GPR in the current context, with large training sets and a Poisson-distributed output, are outlined.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge