Seiji Okajima

Inter-domain Multi-relational Link Prediction

Jul 09, 2021

Abstract:Multi-relational graph is a ubiquitous and important data structure, allowing flexible representation of multiple types of interactions and relations between entities. Similar to other graph-structured data, link prediction is one of the most important tasks on multi-relational graphs and is often used for knowledge completion. When related graphs coexist, it is of great benefit to build a larger graph via integrating the smaller ones. The integration requires predicting hidden relational connections between entities belonged to different graphs (inter-domain link prediction). However, this poses a real challenge to existing methods that are exclusively designed for link prediction between entities of the same graph only (intra-domain link prediction). In this study, we propose a new approach to tackle the inter-domain link prediction problem by softly aligning the entity distributions between different domains with optimal transport and maximum mean discrepancy regularizers. Experiments on real-world datasets show that optimal transport regularizer is beneficial and considerably improves the performance of baseline methods.

Crowdsourcing Evaluation of Saliency-based XAI Methods

Jun 27, 2021

Abstract:Understanding the reasons behind the predictions made by deep neural networks is critical for gaining human trust in many important applications, which is reflected in the increasing demand for explainability in AI (XAI) in recent years. Saliency-based feature attribution methods, which highlight important parts of images that contribute to decisions by classifiers, are often used as XAI methods, especially in the field of computer vision. In order to compare various saliency-based XAI methods quantitatively, several approaches for automated evaluation schemes have been proposed; however, there is no guarantee that such automated evaluation metrics correctly evaluate explainability, and a high rating by an automated evaluation scheme does not necessarily mean a high explainability for humans. In this study, instead of the automated evaluation, we propose a new human-based evaluation scheme using crowdsourcing to evaluate XAI methods. Our method is inspired by a human computation game, "Peek-a-boom", and can efficiently compare different XAI methods by exploiting the power of crowds. We evaluate the saliency maps of various XAI methods on two datasets with automated and crowd-based evaluation schemes. Our experiments show that the result of our crowd-based evaluation scheme is different from those of automated evaluation schemes. In addition, we regard the crowd-based evaluation results as ground truths and provide a quantitative performance measure to compare different automated evaluation schemes. We also discuss the impact of crowd workers on the results and show that the varying ability of crowd workers does not significantly impact the results.

Report on the First Knowledge Graph Reasoning Challenge 2018 -- Toward the eXplainable AI System

Aug 22, 2019

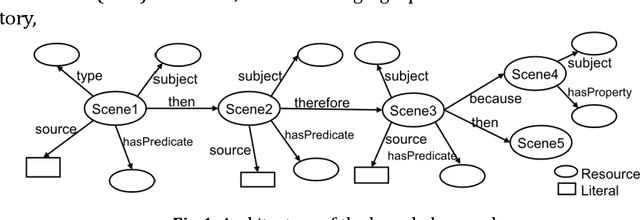

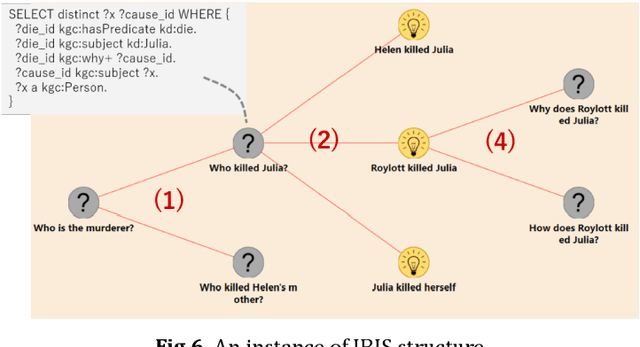

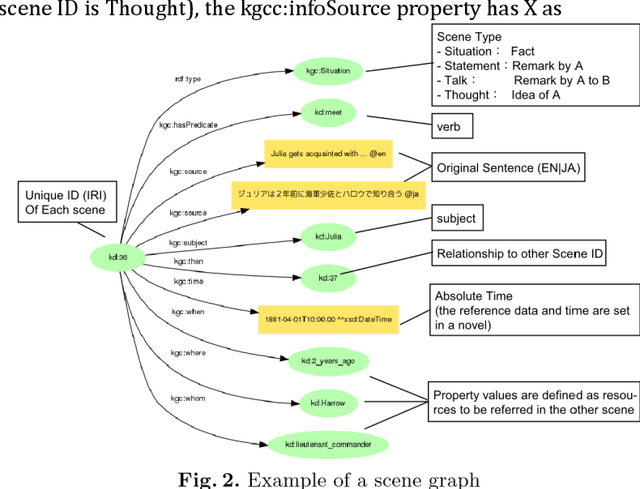

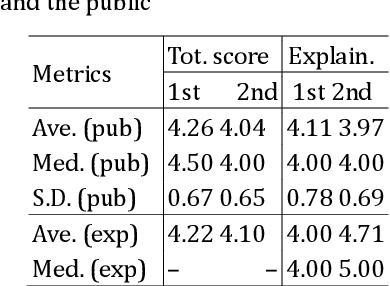

Abstract:A new challenge for knowledge graph reasoning started in 2018. Deep learning has promoted the application of artificial intelligence (AI) techniques to a wide variety of social problems. Accordingly, being able to explain the reason for an AI decision is becoming important to ensure the secure and safe use of AI techniques. Thus, we, the Special Interest Group on Semantic Web and Ontology of the Japanese Society for AI, organized a challenge calling for techniques that reason and/or estimate which characters are criminals while providing a reasonable explanation based on an open knowledge graph of a well-known Sherlock Holmes mystery story. This paper presents a summary report of the first challenge held in 2018, including the knowledge graph construction, the techniques proposed for reasoning and/or estimation, the evaluation metrics, and the results. The first prize went to an approach that formalized the problem as a constraint satisfaction problem and solved it using a lightweight formal method; the second prize went to an approach that used SPARQL and rules; the best resource prize went to a submission that constructed word embedding of characters from all sentences of Sherlock Holmes novels; and the best idea prize went to a discussion multi-agents model. We conclude this paper with the plans and issues for the next challenge in 2019.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge