Satoshi Funabashi

TaSA: Two-Phased Deep Predictive Learning of Tactile Sensory Attenuation for Improving In-Grasp Manipulation

Feb 05, 2026Abstract:Humans can achieve diverse in-hand manipulations, such as object pinching and tool use, which often involve simultaneous contact between the object and multiple fingers. This is still an open issue for robotic hands because such dexterous manipulation requires distinguishing between tactile sensations generated by their self-contact and those arising from external contact. Otherwise, object/robot breakage happens due to contacts/collisions. Indeed, most approaches ignore self-contact altogether, by constraining motion to avoid/ignore self-tactile information during contact. While this reduces complexity, it also limits generalization to real-world scenarios where self-contact is inevitable. Humans overcome this challenge through self-touch perception, using predictive mechanisms that anticipate the tactile consequences of their own motion, through a principle called sensory attenuation, where the nervous system differentiates predictable self-touch signals, allowing novel object stimuli to stand out as relevant. Deriving from this, we introduce TaSA, a two-phased deep predictive learning framework. In the first phase, TaSA explicitly learns self-touch dynamics, modeling how a robot's own actions generate tactile feedback. In the second phase, this learned model is incorporated into the motion learning phase, to emphasize object contact signals during manipulation. We evaluate TaSA on a set of insertion tasks, which demand fine tactile discrimination: inserting a pencil lead into a mechanical pencil, inserting coins into a slot, and fixing a paper clip onto a sheet of paper, with various orientations, positions, and sizes. Across all tasks, policies trained with TaSA achieve significantly higher success rates than baseline methods, demonstrating that structured tactile perception with self-touch based on sensory attenuation is critical for dexterous robotic manipulation.

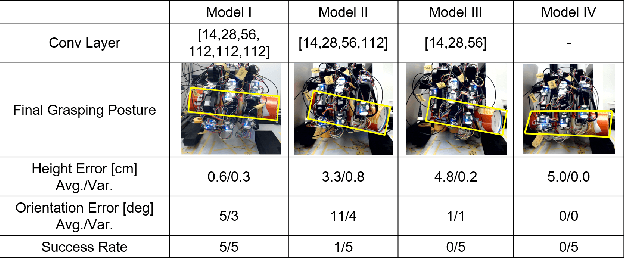

Focused Blind Switching Manipulation Based on Constrained and Regional Touch States of Multi-Fingered Hand Using Deep Learning

Mar 10, 2025Abstract:To achieve a desired grasping posture (including object position and orientation), multi-finger motions need to be conducted according to the the current touch state. Specifically, when subtle changes happen during correcting the object state, not only proprioception but also tactile information from the entire hand can be beneficial. However, switching motions with high-DOFs of multiple fingers and abundant tactile information is still challenging. In this study, we propose a loss function with constraints of touch states and an attention mechanism for focusing on important modalities depending on the touch states. The policy model is AE-LSTM which consists of Autoencoder (AE) which compresses abundant tactile information and Long Short-Term Memory (LSTM) which switches the motion depending on the touch states. Motion for cap-opening was chosen as a target task which consists of subtasks of sliding an object and opening its cap. As a result, the proposed method achieved the best success rates with a variety of objects for real time cap-opening manipulation. Furthermore, we could confirm that the proposed model acquired the features of each subtask and attention on specific modalities.

FingerTac -- An Interchangeable and Wearable Tactile Sensor for the Fingertips of Human and Robot Hands

Oct 13, 2023Abstract:Skill transfer from humans to robots is challenging. Presently, many researchers focus on capturing only position or joint angle data from humans to teach the robots. Even though this approach has yielded impressive results for grasping applications, reconstructing motion for object handling or fine manipulation from a human hand to a robot hand has been sparsely explored. Humans use tactile feedback to adjust their motion to various objects, but capturing and reproducing the applied forces is an open research question. In this paper we introduce a wearable fingertip tactile sensor, which captures the distributed 3-axis force vectors on the fingertip. The fingertip tactile sensor is interchangeable between the human hand and the robot hand, meaning that it can also be assembled to fit on a robot hand such as the Allegro hand. This paper presents the structural aspects of the sensor as well as the methodology and approach used to design, manufacture, and calibrate the sensor. The sensor is able to measure forces accurately with a mean absolute error of 0.21, 0.16, and 0.44 Newtons in X, Y, and Z directions, respectively.

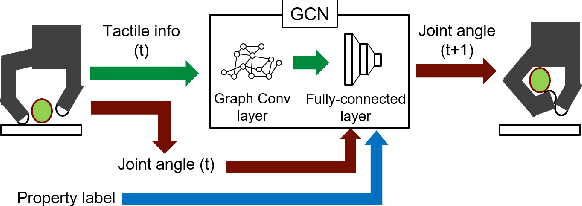

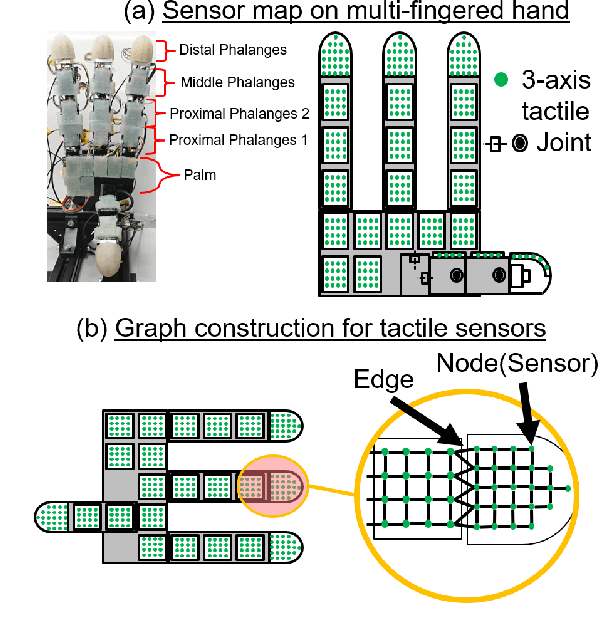

Multi-Fingered In-Hand Manipulation with Various Object Properties Using Graph Convolutional Networks and Distributed Tactile Sensors

May 09, 2022

Abstract:Multi-fingered hands could be used to achieve many dexterous manipulation tasks, similarly to humans, and tactile sensing could enhance the manipulation stability for a variety of objects. However, tactile sensors on multi-fingered hands have a variety of sizes and shapes. Convolutional neural networks (CNN) can be useful for processing tactile information, but the information from multi-fingered hands needs an arbitrary pre-processing, as CNNs require a rectangularly shaped input, which may lead to unstable results. Therefore, how to process such complex shaped tactile information and utilize it for achieving manipulation skills is still an open issue. This paper presents a control method based on a graph convolutional network (GCN) which extracts geodesical features from the tactile data with complicated sensor alignments. Moreover, object property labels are provided to the GCN to adjust in-hand manipulation motions. Distributed tri-axial tactile sensors are mounted on the fingertips, finger phalanges and palm of an Allegro hand, resulting in 1152 tactile measurements. Training data is collected with a data-glove to transfer human dexterous manipulation directly to the robot hand. The GCN achieved high success rates for in-hand manipulation. We also confirmed that fragile objects were deformed less when correct object labels were provided to the GCN. When visualizing the activation of the GCN with a PCA, we verified that the network acquired geodesical features. Our method achieved stable manipulation even when an experimenter pulled a grasped object and for untrained objects.

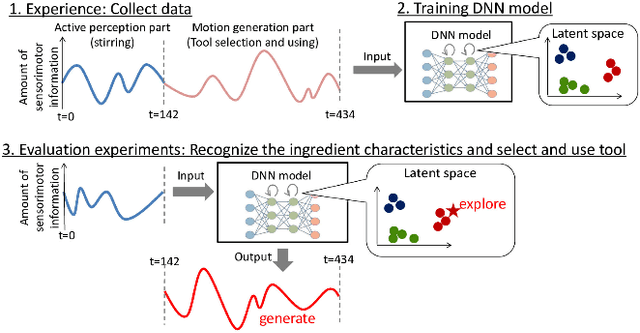

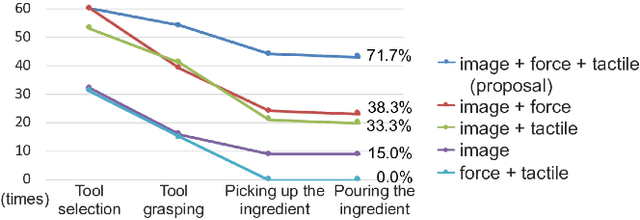

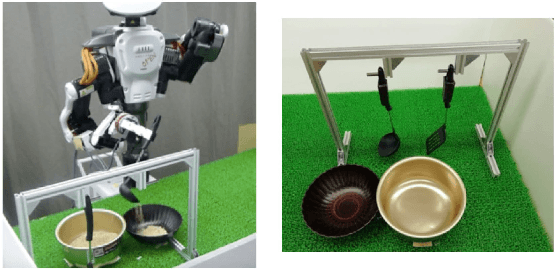

How to select and use tools? : Active Perception of Target Objects Using Multimodal Deep Learning

Jun 04, 2021

Abstract:Selection of appropriate tools and use of them when performing daily tasks is a critical function for introducing robots for domestic applications. In previous studies, however, adaptability to target objects was limited, making it difficult to accordingly change tools and adjust actions. To manipulate various objects with tools, robots must both understand tool functions and recognize object characteristics to discern a tool-object-action relation. We focus on active perception using multimodal sensorimotor data while a robot interacts with objects, and allow the robot to recognize their extrinsic and intrinsic characteristics. We construct a deep neural networks (DNN) model that learns to recognize object characteristics, acquires tool-object-action relations, and generates motions for tool selection and handling. As an example tool-use situation, the robot performs an ingredients transfer task, using a turner or ladle to transfer an ingredient from a pot to a bowl. The results confirm that the robot recognizes object characteristics and servings even when the target ingredients are unknown. We also examine the contributions of images, force, and tactile data and show that learning a variety of multimodal information results in rich perception for tool use.

* Best Paper Award of Cognitive Robotics in ICRA2021 IEEE Robotics and Automation Letters 2021, Proceedings of the 2021 International Conference on Robotics and Automation (ICRA 2021), 2021

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge