Fei Hongyi

Multi-Fingered In-Hand Manipulation with Various Object Properties Using Graph Convolutional Networks and Distributed Tactile Sensors

May 09, 2022

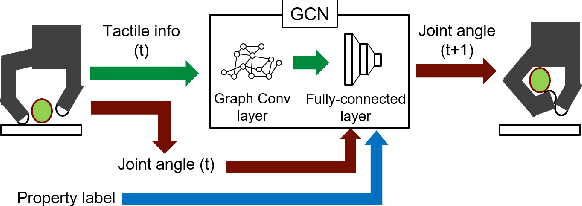

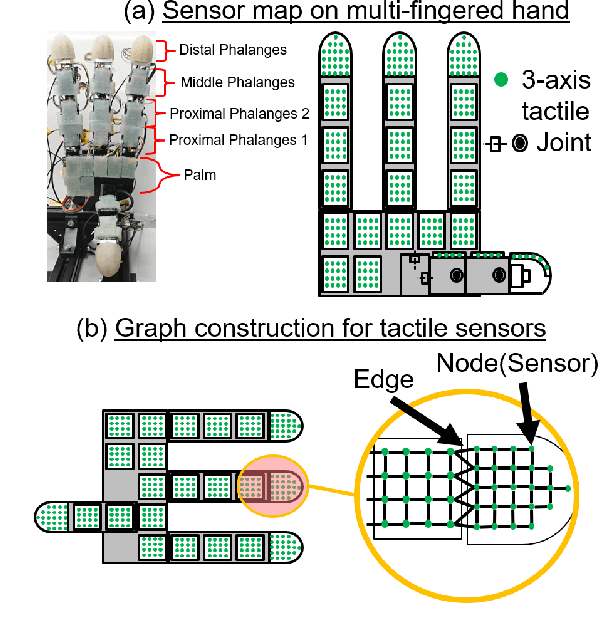

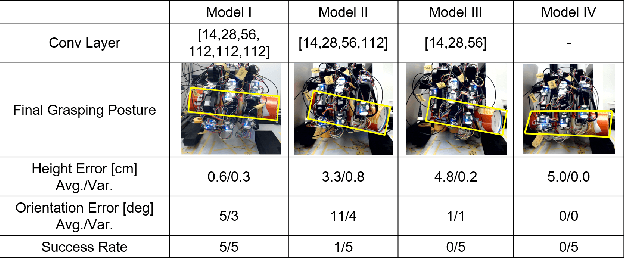

Abstract:Multi-fingered hands could be used to achieve many dexterous manipulation tasks, similarly to humans, and tactile sensing could enhance the manipulation stability for a variety of objects. However, tactile sensors on multi-fingered hands have a variety of sizes and shapes. Convolutional neural networks (CNN) can be useful for processing tactile information, but the information from multi-fingered hands needs an arbitrary pre-processing, as CNNs require a rectangularly shaped input, which may lead to unstable results. Therefore, how to process such complex shaped tactile information and utilize it for achieving manipulation skills is still an open issue. This paper presents a control method based on a graph convolutional network (GCN) which extracts geodesical features from the tactile data with complicated sensor alignments. Moreover, object property labels are provided to the GCN to adjust in-hand manipulation motions. Distributed tri-axial tactile sensors are mounted on the fingertips, finger phalanges and palm of an Allegro hand, resulting in 1152 tactile measurements. Training data is collected with a data-glove to transfer human dexterous manipulation directly to the robot hand. The GCN achieved high success rates for in-hand manipulation. We also confirmed that fragile objects were deformed less when correct object labels were provided to the GCN. When visualizing the activation of the GCN with a PCA, we verified that the network acquired geodesical features. Our method achieved stable manipulation even when an experimenter pulled a grasped object and for untrained objects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge