Tito Pradhono Tomo

FingerTac -- An Interchangeable and Wearable Tactile Sensor for the Fingertips of Human and Robot Hands

Oct 13, 2023Abstract:Skill transfer from humans to robots is challenging. Presently, many researchers focus on capturing only position or joint angle data from humans to teach the robots. Even though this approach has yielded impressive results for grasping applications, reconstructing motion for object handling or fine manipulation from a human hand to a robot hand has been sparsely explored. Humans use tactile feedback to adjust their motion to various objects, but capturing and reproducing the applied forces is an open research question. In this paper we introduce a wearable fingertip tactile sensor, which captures the distributed 3-axis force vectors on the fingertip. The fingertip tactile sensor is interchangeable between the human hand and the robot hand, meaning that it can also be assembled to fit on a robot hand such as the Allegro hand. This paper presents the structural aspects of the sensor as well as the methodology and approach used to design, manufacture, and calibrate the sensor. The sensor is able to measure forces accurately with a mean absolute error of 0.21, 0.16, and 0.44 Newtons in X, Y, and Z directions, respectively.

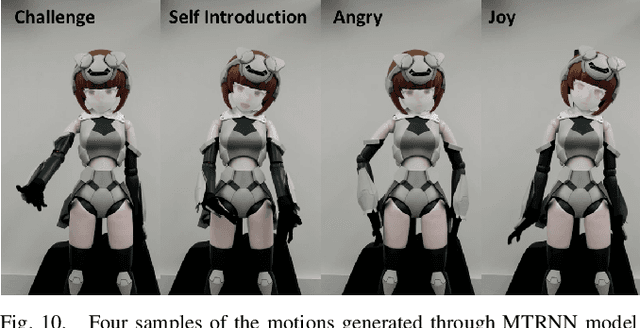

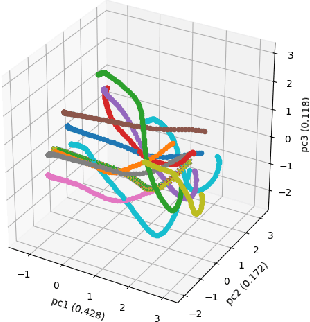

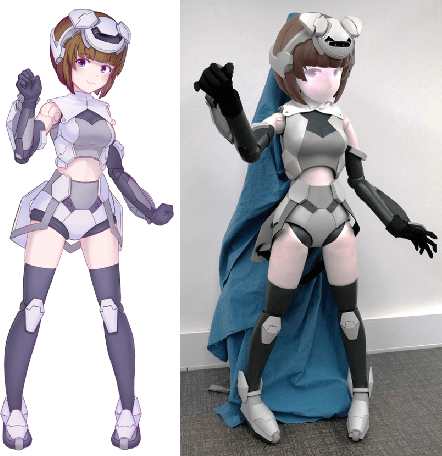

HATSUKI : An anime character like robot figure platform with anime-style expressions and imitation learning based action generation

Mar 31, 2020

Abstract:Japanese character figurines are popular and have pivot position in Otaku culture. Although numerous robots have been developed, less have focused on otaku-culture or on embodying the anime character figurine. Therefore, we take the first steps to bridge this gap by developing Hatsuki, which is a humanoid robot platform with anime based design. Hatsuki's novelty lies in aesthetic design, 2D facial expressions, and anime-style behaviors that allows it to deliver rich interaction experiences resembling anime-characters. We explain our design implementation process of Hatsuki, followed by our evaluations. In order to explore user impressions and opinions towards Hatsuki, we conducted a questionnaire in the world's largest anime-figurine event. The results indicate that participants were generally very satisfied with Hatsuki's design, and proposed various use case scenarios and deployment contexts for Hatsuki. The second evaluation focused on imitation learning, as such method can provide better interaction ability in the real world and generate rich, context-adaptive behavior in different situations. We made Hatsuki learn 11 actions, combining voice, facial expressions and motions, through neuron network based policy model with our proposed interface. Results show our approach was successfully able to generate the actions through self-organized contexts, which shows the potential for generalizing our approach in further actions under different contexts. Lastly, we present our future research direction for Hatsuki, and provide our conclusion.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge