Saraju P. Mohanty

Easydiagnos: a framework for accurate feature selection for automatic diagnosis in smart healthcare

Oct 01, 2024Abstract:The rapid advancements in artificial intelligence (AI) have revolutionized smart healthcare, driving innovations in wearable technologies, continuous monitoring devices, and intelligent diagnostic systems. However, security, explainability, robustness, and performance optimization challenges remain critical barriers to widespread adoption in clinical environments. This research presents an innovative algorithmic method using the Adaptive Feature Evaluator (AFE) algorithm to improve feature selection in healthcare datasets and overcome problems. AFE integrating Genetic Algorithms (GA), Explainable Artificial Intelligence (XAI), and Permutation Combination Techniques (PCT), the algorithm optimizes Clinical Decision Support Systems (CDSS), thereby enhancing predictive accuracy and interpretability. The proposed method is validated across three diverse healthcare datasets using six distinct machine learning algorithms, demonstrating its robustness and superiority over conventional feature selection techniques. The results underscore the transformative potential of AFE in smart healthcare, enabling personalized and transparent patient care. Notably, the AFE algorithm, when combined with a Multi-layer Perceptron (MLP), achieved an accuracy of up to 98.5%, highlighting its capability to improve clinical decision-making processes in real-world healthcare applications.

The World of Generative AI: Deepfakes and Large Language Models

Feb 06, 2024Abstract:We live in the era of Generative Artificial Intelligence (GenAI). Deepfakes and Large Language Models (LLMs) are two examples of GenAI. Deepfakes, in particular, pose an alarming threat to society as they are capable of spreading misinformation and changing the truth. LLMs are powerful language models that generate general-purpose language. However due to its generative aspect, it can also be a risk for people if used with ill intentions. The ethical use of these technologies is a big concern. This short article tries to find out the interrelationship between them.

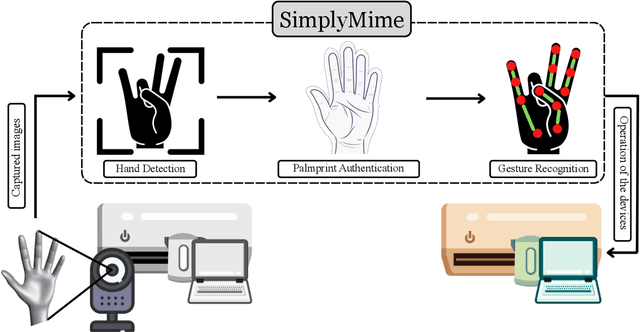

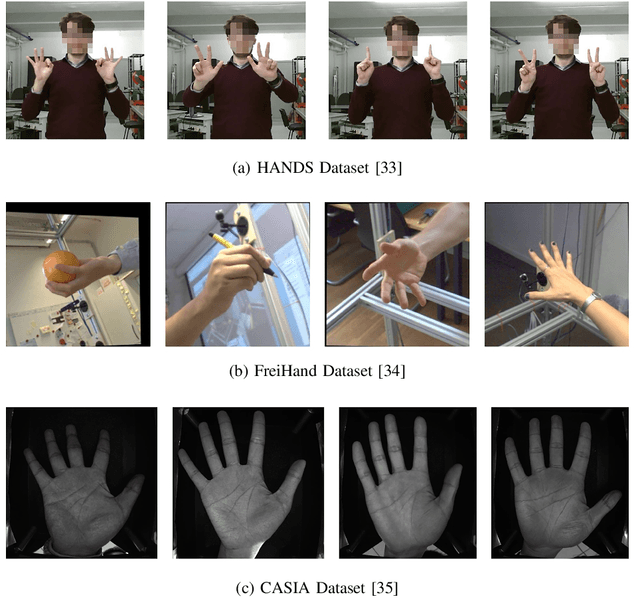

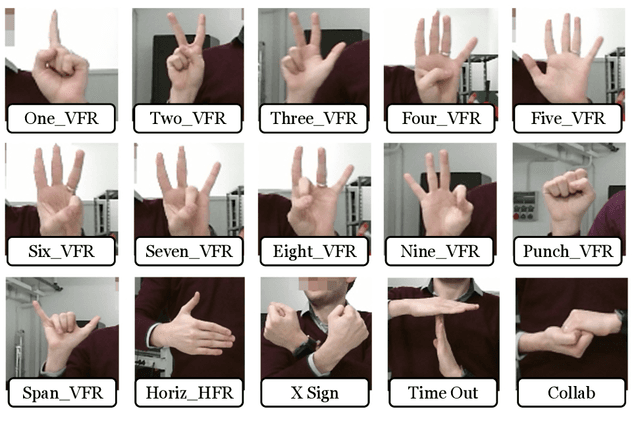

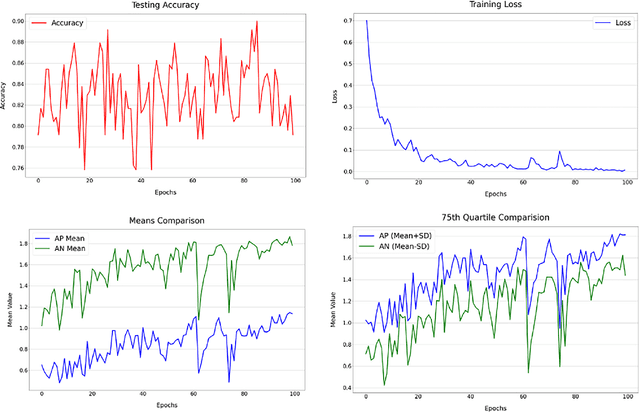

SimplyMime: A Control at Our Fingertips

Apr 22, 2023

Abstract:The utilization of consumer electronics, such as televisions, set-top boxes, home theaters, and air conditioners, has become increasingly prevalent in modern society as technology continues to evolve. As new devices enter our homes each year, the accumulation of multiple infrared remote controls to operate them not only results in a waste of energy and resources, but also creates a cumbersome and cluttered environment for the user. This paper presents a novel system, named SimplyMime, which aims to eliminate the need for multiple remote controls for consumer electronics and provide the user with intuitive control without the need for additional devices. SimplyMime leverages a dynamic hand gesture recognition architecture, incorporating Artificial Intelligence and Human-Computer Interaction, to create a sophisticated system that enables users to interact with a vast majority of consumer electronics with ease. Additionally, SimplyMime has a security aspect where it can verify and authenticate the user utilising the palmprint, which ensures that only authorized users can control the devices. The performance of the proposed method for detecting and recognizing gestures in a stream of motion was thoroughly tested and validated using multiple benchmark datasets, resulting in commendable accuracy levels. One of the distinct advantages of the proposed method is its minimal computational power requirements, making it highly adaptable and reliable in a wide range of circumstances. The paper proposes incorporating this technology into all consumer electronic devices that currently require a secondary remote for operation, thus promoting a more efficient and sustainable living environment.

MagicEye: An Intelligent Wearable Towards Independent Living of Visually Impaired

Mar 24, 2023Abstract:Individuals with visual impairments often face a multitude of challenging obstacles in their daily lives. Vision impairment can severely impair a person's ability to work, navigate, and retain independence. This can result in educational limits, a higher risk of accidents, and a plethora of other issues. To address these challenges, we present MagicEye, a state-of-the-art intelligent wearable device designed to assist visually impaired individuals. MagicEye employs a custom-trained CNN-based object detection model, capable of recognizing a wide range of indoor and outdoor objects frequently encountered in daily life. With a total of 35 classes, the neural network employed by MagicEye has been specifically designed to achieve high levels of efficiency and precision in object detection. The device is also equipped with facial recognition and currency identification modules, providing invaluable assistance to the visually impaired. In addition, MagicEye features a GPS sensor for navigation, allowing users to move about with ease, as well as a proximity sensor for detecting nearby objects without physical contact. In summary, MagicEye is an innovative and highly advanced wearable device that has been designed to address the many challenges faced by individuals with visual impairments. It is equipped with state-of-the-art object detection and navigation capabilities that are tailored to the needs of the visually impaired, making it one of the most promising solutions to assist those who are struggling with visual impairments.

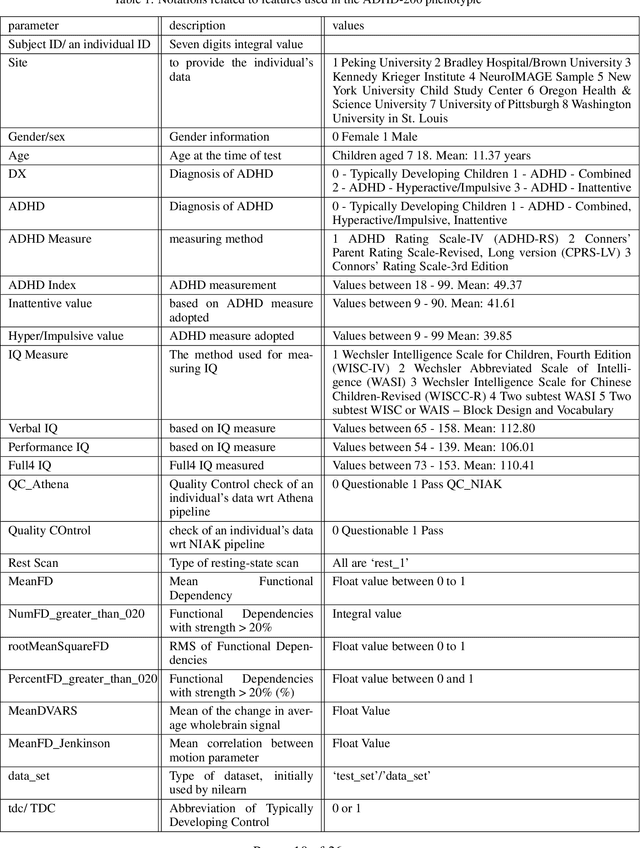

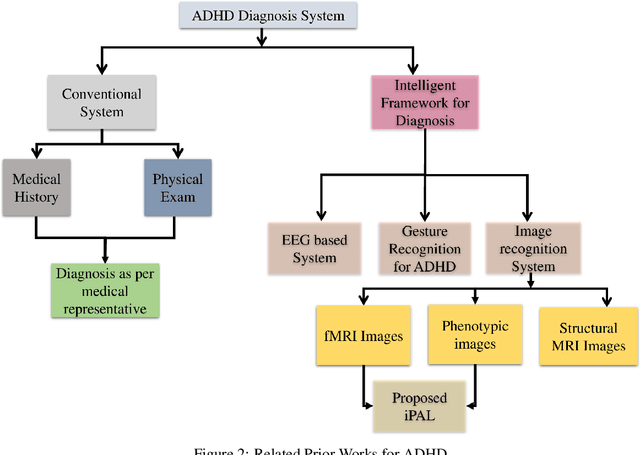

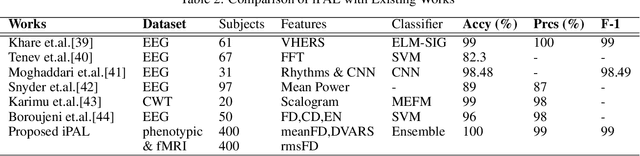

iPAL: A Machine Learning Based Smart Healthcare Framework For Automatic Diagnosis Of Attention Deficit/Hyperactivity Disorder (ADHD)

Feb 01, 2023

Abstract:ADHD is a prevalent disorder among the younger population. Standard evaluation techniques currently use evaluation forms, interviews with the patient, and more. However, its symptoms are similar to those of many other disorders like depression, conduct disorder, and oppositional defiant disorder, and these current diagnosis techniques are not very effective. Thus, a sophisticated computing model holds the potential to provide a promising diagnosis solution to this problem. This work attempts to explore methods to diagnose ADHD using combinations of multiple established machine learning techniques like neural networks and SVM models on the ADHD200 dataset and explore the field of neuroscience. In this work, multiclass classification is performed on phenotypic data using an SVM model. The better results have been analyzed on the phenotypic data compared to other supervised learning techniques like Logistic regression, KNN, AdaBoost, etc. In addition, neural networks have been implemented on functional connectivity from the MRI data of a sample of 40 subjects provided to achieve high accuracy without prior knowledge of neuroscience. It is combined with the phenotypic classifier using the ensemble technique to get a binary classifier. It is further trained and tested on 400 out of 824 subjects from the ADHD200 data set and achieved an accuracy of 92.5% for binary classification The training and testing accuracy has been achieved upto 99% using ensemble classifier.

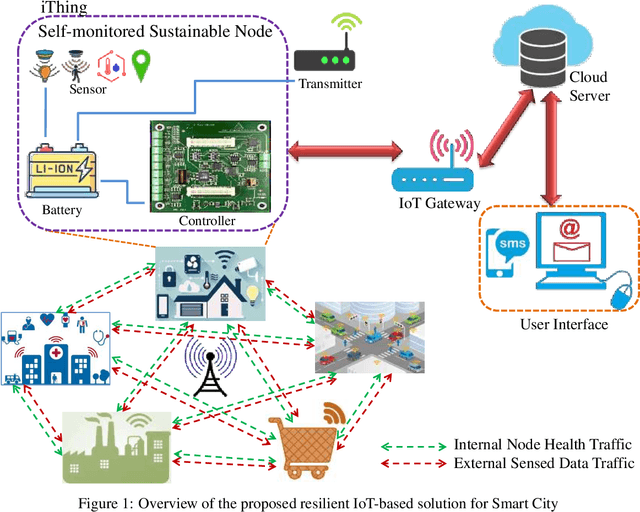

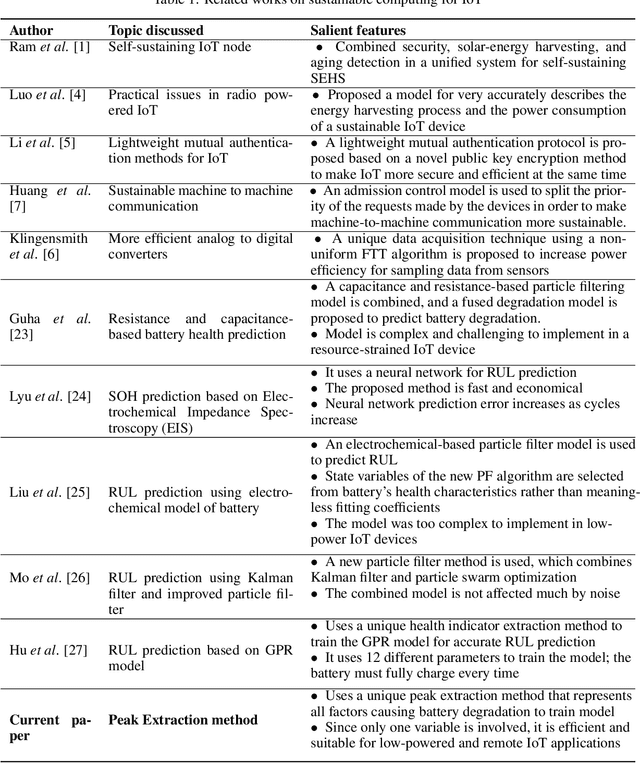

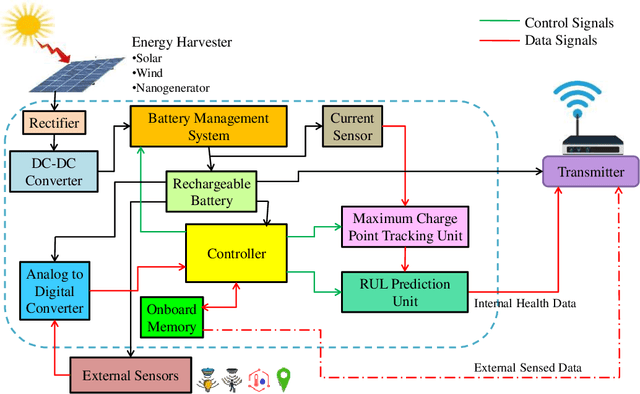

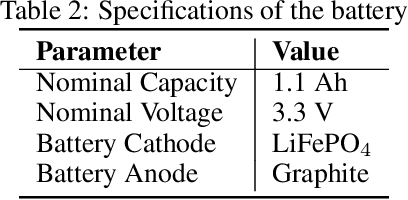

iThing: Designing Next-Generation Things with Battery Health Self-Monitoring Capabilities for Sustainable IoT in Smart Cities

Jun 12, 2021

Abstract:An accurate and reliable technique for predicting Remaining Useful Life (RUL) for battery cells proves helpful in battery-operated IoT devices, especially in remotely operated sensor nodes. Data-driven methods have proved to be the most effective methods until now. These IoT devices have low computational capabilities to save costs, but Data-Driven battery health techniques often require a comparatively large amount of computational power to predict SOH and RUL due to most methods being feature-heavy. This issue calls for ways to predict RUL with the least amount of calculations and memory. This paper proposes an effective and novel peak extraction method to reduce computation and memory needs and provide accurate prediction methods using the least number of features while performing all calculations on-board. The model can self-sustain, requires minimal external interference, and hence operate remotely much longer. Experimental results prove the accuracy and reliability of this method. The Absolute Error (AE), Relative error (RE), and Root Mean Square Error (RMSE) are calculated to compare effectiveness. The training of the GPR model takes less than 2 seconds, and the correlation between SOH from peak extraction and RUL is 0.97.

CoviLearn: A Machine Learning Integrated Smart X-Ray Device in Healthcare Cyber-Physical System for Automatic Initial Screening of COVID-19

Jun 09, 2021

Abstract:The pandemic of novel Coronavirus Disease 2019 (COVID-19) is widespread all over the world causing serious health problems as well as serious impact on the global economy. Reliable and fast testing of the COVID-19 has been a challenge for researchers and healthcare practitioners. In this work we present a novel machine learning (ML) integrated X-ray device in Healthcare Cyber-Physical System (H-CPS) or smart healthcare framework (called CoviLearn) to allow healthcare practitioners to perform automatic initial screening of COVID-19 patients. We propose convolutional neural network (CNN) models of X-ray images integrated into an X-ray device for automatic COVID-19 detection. The proposed CoviLearn device will be useful in detecting if a person is COVID-19 positive or negative by considering the chest X-ray image of individuals. CoviLearn will be useful tool doctors to detect potential COVID-19 infections instantaneously without taking more intrusive healthcare data samples, such as saliva and blood. COVID-19 attacks the endothelium tissues that support respiratory tract, X-rays images can be used to analyze the health of a patient lungs. As all healthcare centers have X-ray machines, it could be possible to use proposed CoviLearn X-rays to test for COVID-19 without the especial test kits. Our proposed automated analysis system CoviLearn which has 99% accuracy will be able to save valuable time of medical professionals as the X-ray machines come with a drawback as it needed a radiology expert.

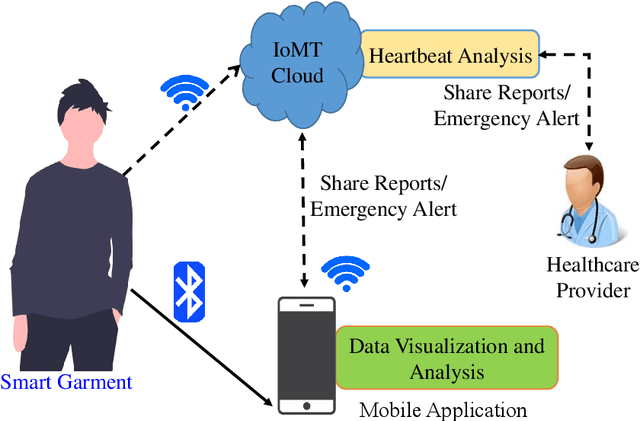

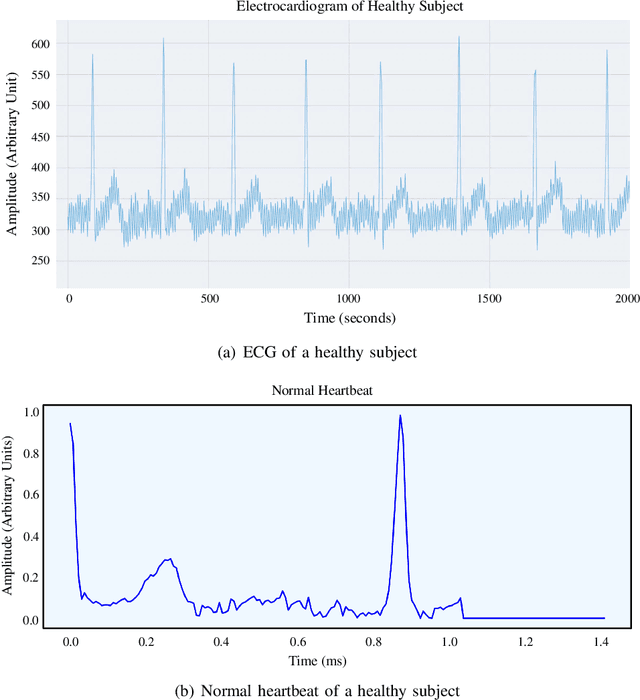

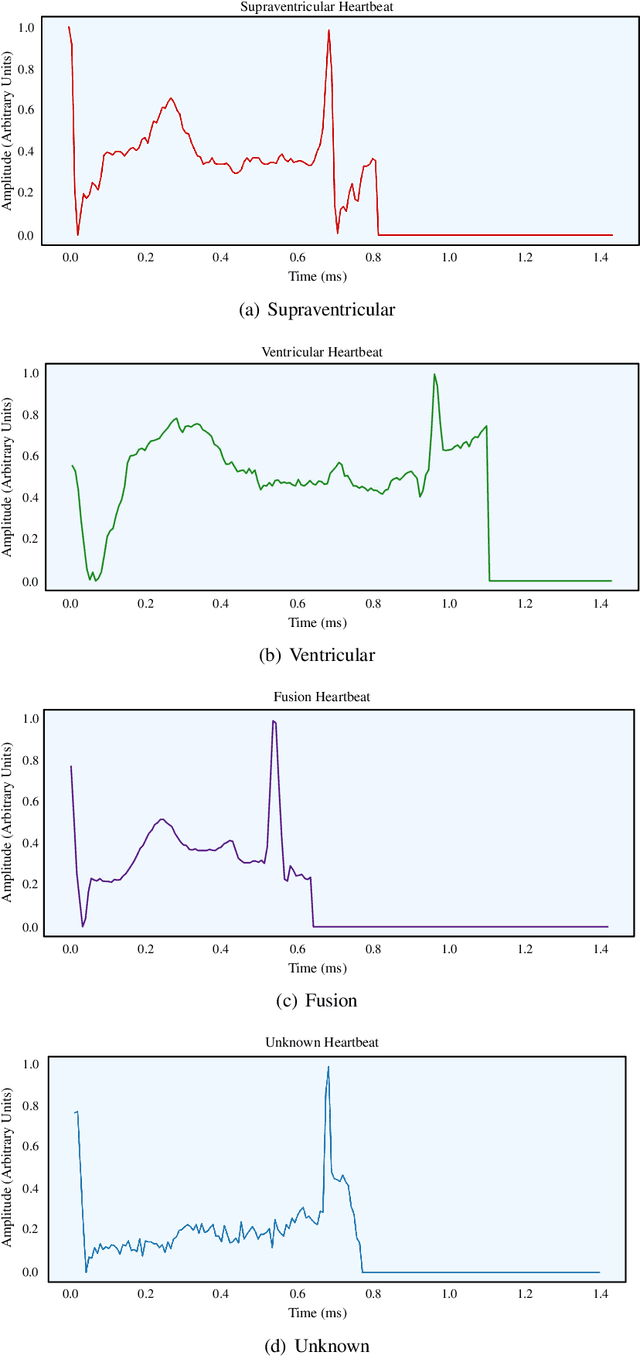

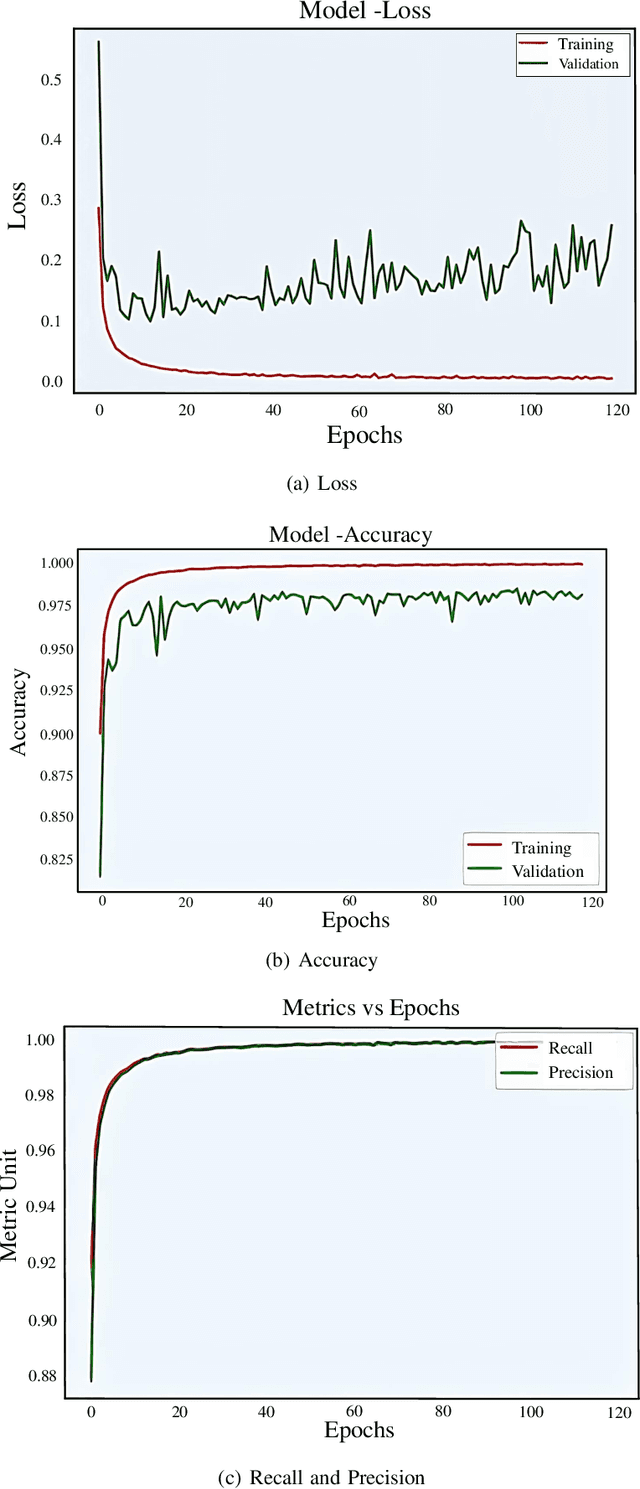

MyWear: A Smart Wear for Continuous Body Vital Monitoring and Emergency Alert

Oct 17, 2020

Abstract:Smart healthcare which is built as healthcare Cyber-Physical System (H-CPS) from Internet-of-Medical-Things (IoMT) is becoming more important than before. Medical devices and their connectivity through Internet with alongwith the electronics health record (EHR) and AI analytics making H-CPS possible. IoMT-end devices like wearables and implantables are key for H-CPS based smart healthcare. Smart garment is a specific wearable which can be used for smart healthcare. There are various smart garments that help users to monitor their body vitals in real-time. Many commercially available garments collect the vital data and transmit it to the mobile application for visualization. However, these don't perform real-time analysis for the user to comprehend their health conditions. Also, such garments are not included with an alert system to alert users and contacts in case of emergency. In MyWear, we propose a wearable body vital monitoring garment that captures physiological data and automatically analyses such heart rate, stress level, muscle activity to detect abnormalities. A copy of the physiological data is transmitted to the cloud for detecting any abnormalities in heart beats and predict any potential heart failure in future. We also propose a deep neural network (DNN) model that automatically classifies abnormal heart beat and potential heart failure. For immediate assistance in such a situation, we propose an alert system that sends an alert message to nearby medical officials. The proposed MyWear has an average accuracy of 96.9% and precision of 97.3% for detection of the abnormalities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge