Debanjan Das

Advancing Smart Malnutrition Monitoring: A Multi-Modal Learning Approach for Vital Health Parameter Estimation

Jul 31, 2023

Abstract:Malnutrition poses a significant threat to global health, resulting from an inadequate intake of essential nutrients that adversely impacts vital organs and overall bodily functioning. Periodic examinations and mass screenings, incorporating both conventional and non-invasive techniques, have been employed to combat this challenge. However, these approaches suffer from critical limitations, such as the need for additional equipment, lack of comprehensive feature representation, absence of suitable health indicators, and the unavailability of smartphone implementations for precise estimations of Body Fat Percentage (BFP), Basal Metabolic Rate (BMR), and Body Mass Index (BMI) to enable efficient smart-malnutrition monitoring. To address these constraints, this study presents a groundbreaking, scalable, and robust smart malnutrition-monitoring system that leverages a single full-body image of an individual to estimate height, weight, and other crucial health parameters within a multi-modal learning framework. Our proposed methodology involves the reconstruction of a highly precise 3D point cloud, from which 512-dimensional feature embeddings are extracted using a headless-3D classification network. Concurrently, facial and body embeddings are also extracted, and through the application of learnable parameters, these features are then utilized to estimate weight accurately. Furthermore, essential health metrics, including BMR, BFP, and BMI, are computed to conduct a comprehensive analysis of the subject's health, subsequently facilitating the provision of personalized nutrition plans. While being robust to a wide range of lighting conditions across multiple devices, our model achieves a low Mean Absolute Error (MAE) of $\pm$ 4.7 cm and $\pm$ 5.3 kg in estimating height and weight.

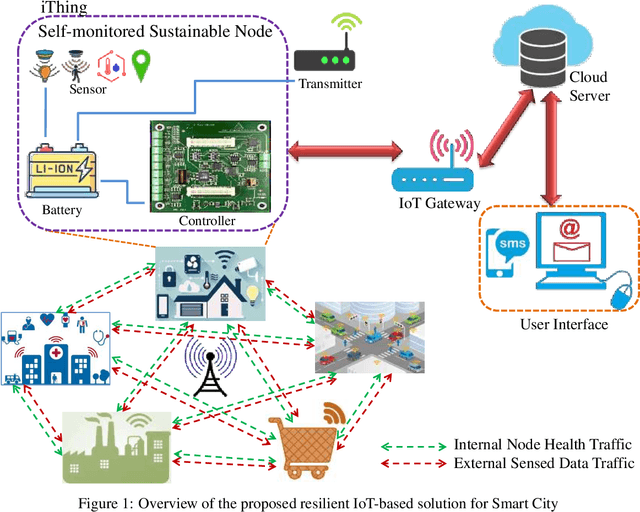

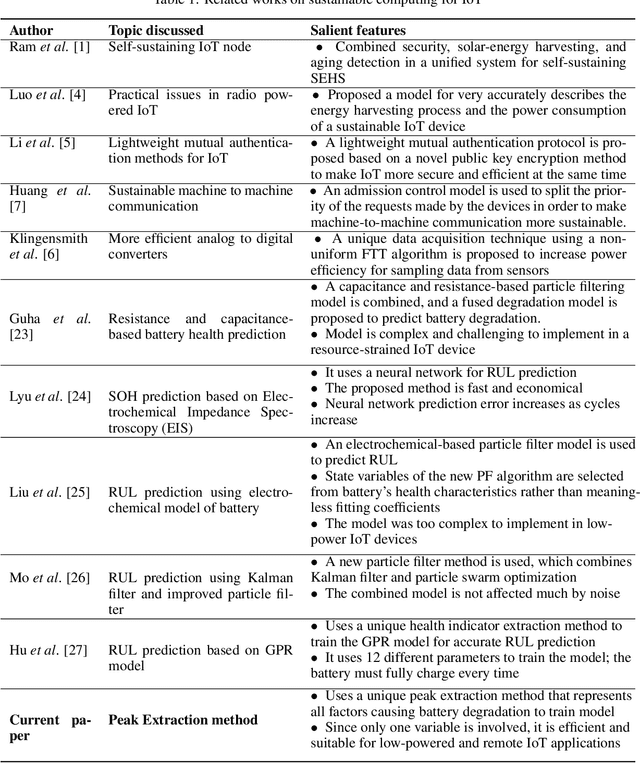

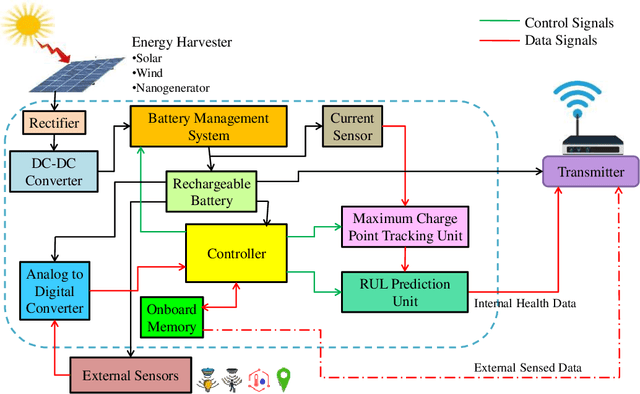

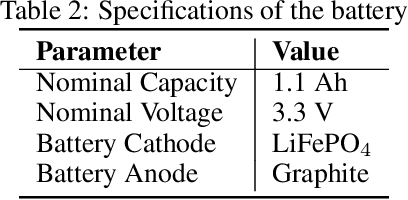

iThing: Designing Next-Generation Things with Battery Health Self-Monitoring Capabilities for Sustainable IoT in Smart Cities

Jun 12, 2021

Abstract:An accurate and reliable technique for predicting Remaining Useful Life (RUL) for battery cells proves helpful in battery-operated IoT devices, especially in remotely operated sensor nodes. Data-driven methods have proved to be the most effective methods until now. These IoT devices have low computational capabilities to save costs, but Data-Driven battery health techniques often require a comparatively large amount of computational power to predict SOH and RUL due to most methods being feature-heavy. This issue calls for ways to predict RUL with the least amount of calculations and memory. This paper proposes an effective and novel peak extraction method to reduce computation and memory needs and provide accurate prediction methods using the least number of features while performing all calculations on-board. The model can self-sustain, requires minimal external interference, and hence operate remotely much longer. Experimental results prove the accuracy and reliability of this method. The Absolute Error (AE), Relative error (RE), and Root Mean Square Error (RMSE) are calculated to compare effectiveness. The training of the GPR model takes less than 2 seconds, and the correlation between SOH from peak extraction and RUL is 0.97.

CoviLearn: A Machine Learning Integrated Smart X-Ray Device in Healthcare Cyber-Physical System for Automatic Initial Screening of COVID-19

Jun 09, 2021

Abstract:The pandemic of novel Coronavirus Disease 2019 (COVID-19) is widespread all over the world causing serious health problems as well as serious impact on the global economy. Reliable and fast testing of the COVID-19 has been a challenge for researchers and healthcare practitioners. In this work we present a novel machine learning (ML) integrated X-ray device in Healthcare Cyber-Physical System (H-CPS) or smart healthcare framework (called CoviLearn) to allow healthcare practitioners to perform automatic initial screening of COVID-19 patients. We propose convolutional neural network (CNN) models of X-ray images integrated into an X-ray device for automatic COVID-19 detection. The proposed CoviLearn device will be useful in detecting if a person is COVID-19 positive or negative by considering the chest X-ray image of individuals. CoviLearn will be useful tool doctors to detect potential COVID-19 infections instantaneously without taking more intrusive healthcare data samples, such as saliva and blood. COVID-19 attacks the endothelium tissues that support respiratory tract, X-rays images can be used to analyze the health of a patient lungs. As all healthcare centers have X-ray machines, it could be possible to use proposed CoviLearn X-rays to test for COVID-19 without the especial test kits. Our proposed automated analysis system CoviLearn which has 99% accuracy will be able to save valuable time of medical professionals as the X-ray machines come with a drawback as it needed a radiology expert.

JU_KS_Group@FIRE 2016: Consumer Health Information Search

Dec 24, 2016

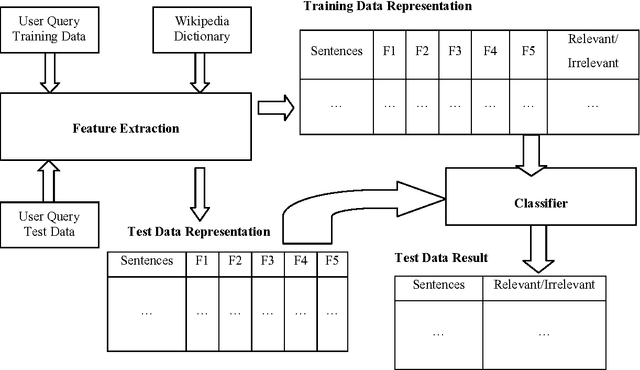

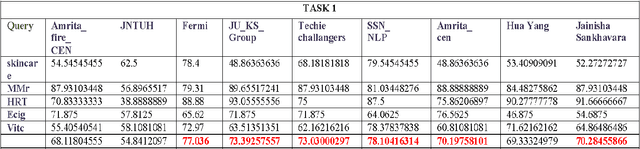

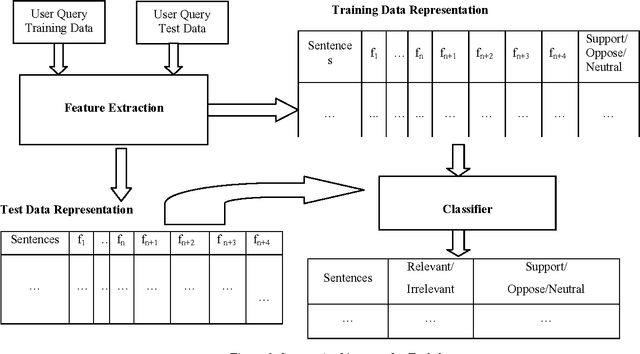

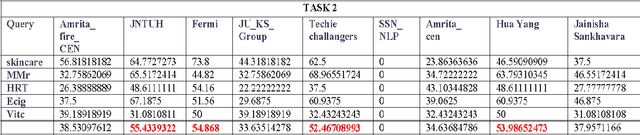

Abstract:In this paper, we describe the methodology used and the results obtained by us for completing the tasks given under the shared task on Consumer Health Information Search (CHIS) collocated with the Forum for Information Retrieval Evaluation (FIRE) 2016, ISI Kolkata. The shared task consists of two sub-tasks - (1) task1: given a query and a document/set of documents associated with that query, the task is to classify the sentences in the document as relevant to the query or not and (2) task 2: the relevant sentences need to be further classified as supporting the claim made in the query, or opposing the claim made in the query. We have participated in both the sub-tasks. The percentage accuracy obtained by our developed system for task1 was 73.39 which is third highest among the 9 teams participated in the shared task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge