Mamta Kumari

FACTS About Building Retrieval Augmented Generation-based Chatbots

Jul 10, 2024Abstract:Enterprise chatbots, powered by generative AI, are emerging as key applications to enhance employee productivity. Retrieval Augmented Generation (RAG), Large Language Models (LLMs), and orchestration frameworks like Langchain and Llamaindex are crucial for building these chatbots. However, creating effective enterprise chatbots is challenging and requires meticulous RAG pipeline engineering. This includes fine-tuning embeddings and LLMs, extracting documents from vector databases, rephrasing queries, reranking results, designing prompts, honoring document access controls, providing concise responses, including references, safeguarding personal information, and building orchestration agents. We present a framework for building RAG-based chatbots based on our experience with three NVIDIA chatbots: for IT/HR benefits, financial earnings, and general content. Our contributions are three-fold: introducing the FACTS framework (Freshness, Architectures, Cost, Testing, Security), presenting fifteen RAG pipeline control points, and providing empirical results on accuracy-latency tradeoffs between large and small LLMs. To the best of our knowledge, this is the first paper of its kind that provides a holistic view of the factors as well as solutions for building secure enterprise-grade chatbots."

JU_KS_Group@FIRE 2016: Consumer Health Information Search

Dec 24, 2016

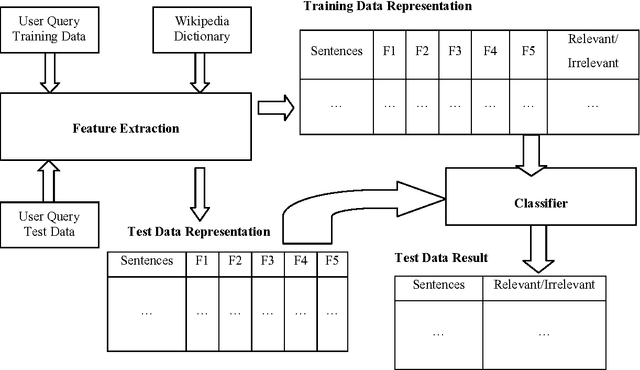

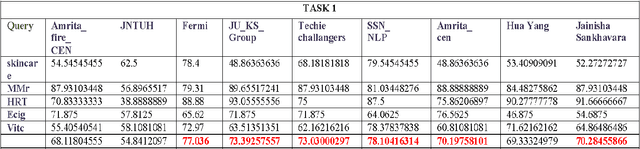

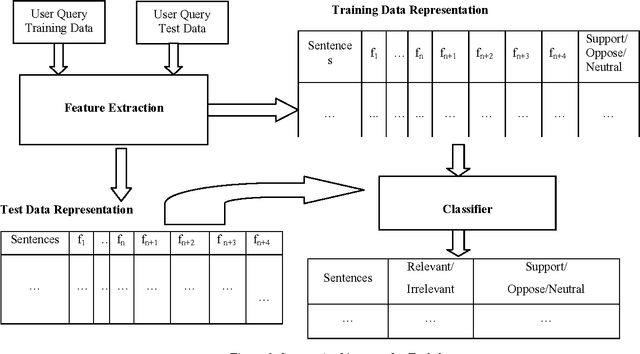

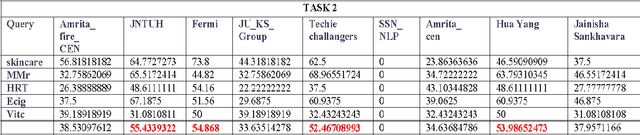

Abstract:In this paper, we describe the methodology used and the results obtained by us for completing the tasks given under the shared task on Consumer Health Information Search (CHIS) collocated with the Forum for Information Retrieval Evaluation (FIRE) 2016, ISI Kolkata. The shared task consists of two sub-tasks - (1) task1: given a query and a document/set of documents associated with that query, the task is to classify the sentences in the document as relevant to the query or not and (2) task 2: the relevant sentences need to be further classified as supporting the claim made in the query, or opposing the claim made in the query. We have participated in both the sub-tasks. The percentage accuracy obtained by our developed system for task1 was 73.39 which is third highest among the 9 teams participated in the shared task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge