Venkanna Udutalapally

Advancing Smart Malnutrition Monitoring: A Multi-Modal Learning Approach for Vital Health Parameter Estimation

Jul 31, 2023

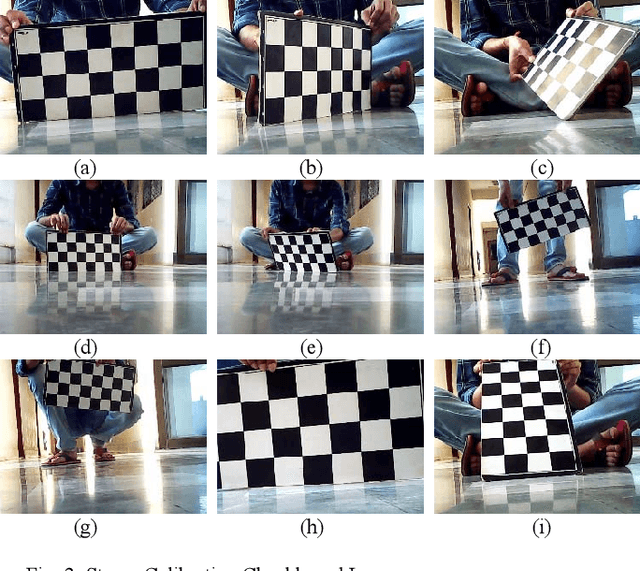

Abstract:Malnutrition poses a significant threat to global health, resulting from an inadequate intake of essential nutrients that adversely impacts vital organs and overall bodily functioning. Periodic examinations and mass screenings, incorporating both conventional and non-invasive techniques, have been employed to combat this challenge. However, these approaches suffer from critical limitations, such as the need for additional equipment, lack of comprehensive feature representation, absence of suitable health indicators, and the unavailability of smartphone implementations for precise estimations of Body Fat Percentage (BFP), Basal Metabolic Rate (BMR), and Body Mass Index (BMI) to enable efficient smart-malnutrition monitoring. To address these constraints, this study presents a groundbreaking, scalable, and robust smart malnutrition-monitoring system that leverages a single full-body image of an individual to estimate height, weight, and other crucial health parameters within a multi-modal learning framework. Our proposed methodology involves the reconstruction of a highly precise 3D point cloud, from which 512-dimensional feature embeddings are extracted using a headless-3D classification network. Concurrently, facial and body embeddings are also extracted, and through the application of learnable parameters, these features are then utilized to estimate weight accurately. Furthermore, essential health metrics, including BMR, BFP, and BMI, are computed to conduct a comprehensive analysis of the subject's health, subsequently facilitating the provision of personalized nutrition plans. While being robust to a wide range of lighting conditions across multiple devices, our model achieves a low Mean Absolute Error (MAE) of $\pm$ 4.7 cm and $\pm$ 5.3 kg in estimating height and weight.

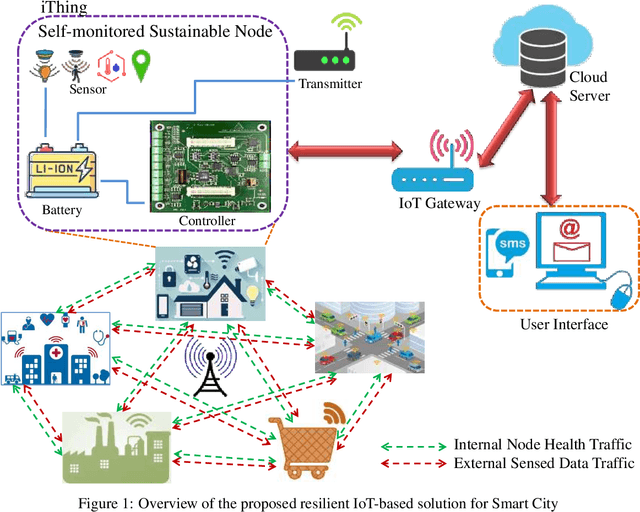

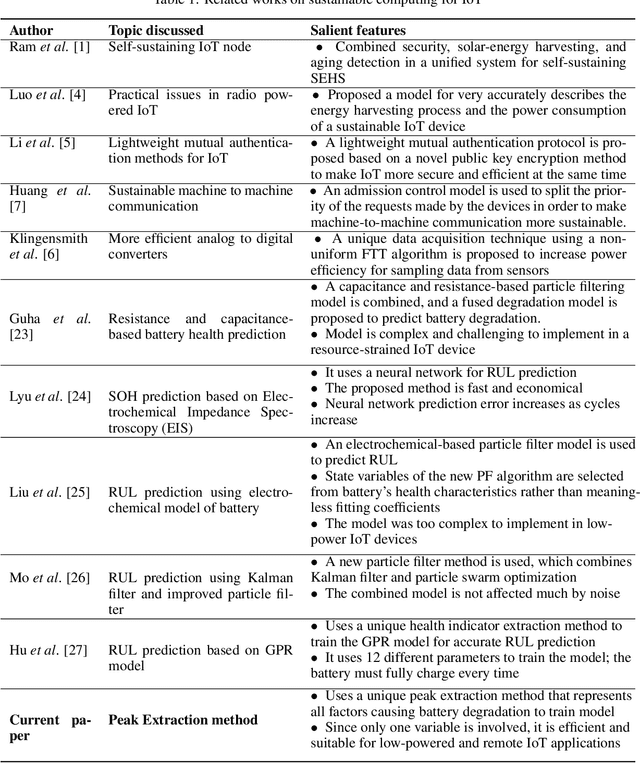

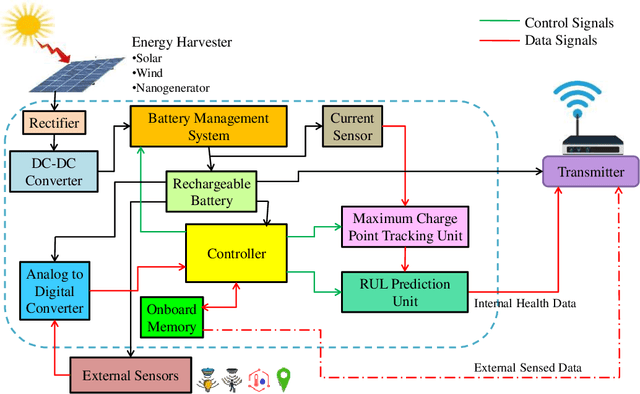

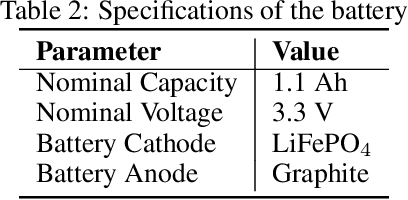

iThing: Designing Next-Generation Things with Battery Health Self-Monitoring Capabilities for Sustainable IoT in Smart Cities

Jun 12, 2021

Abstract:An accurate and reliable technique for predicting Remaining Useful Life (RUL) for battery cells proves helpful in battery-operated IoT devices, especially in remotely operated sensor nodes. Data-driven methods have proved to be the most effective methods until now. These IoT devices have low computational capabilities to save costs, but Data-Driven battery health techniques often require a comparatively large amount of computational power to predict SOH and RUL due to most methods being feature-heavy. This issue calls for ways to predict RUL with the least amount of calculations and memory. This paper proposes an effective and novel peak extraction method to reduce computation and memory needs and provide accurate prediction methods using the least number of features while performing all calculations on-board. The model can self-sustain, requires minimal external interference, and hence operate remotely much longer. Experimental results prove the accuracy and reliability of this method. The Absolute Error (AE), Relative error (RE), and Root Mean Square Error (RMSE) are calculated to compare effectiveness. The training of the GPR model takes less than 2 seconds, and the correlation between SOH from peak extraction and RUL is 0.97.

HAUAR: Home Automation Using Action Recognition

Apr 26, 2019

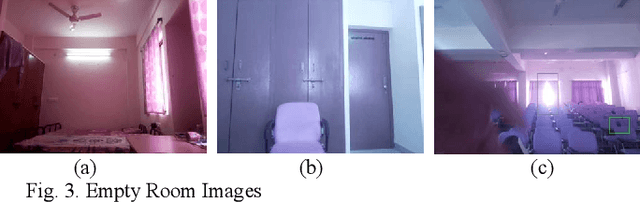

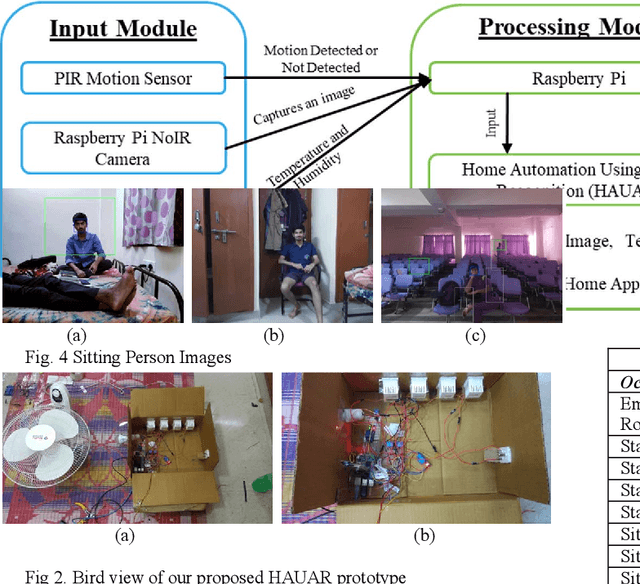

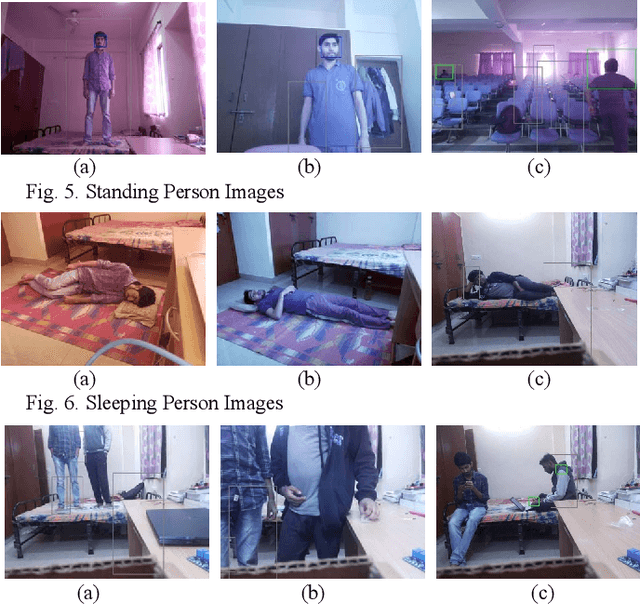

Abstract:Today, many of the home automation systems deployed are mostly controlled by humans. This control by humans restricts the automation of home appliances to an extent. Also, most of the deployed home automation systems use the Internet of Things technology to control the appliances. In this paper, we propose a system developed using action recognition to fully automate the home appliances. We recognize the three actions of a person (sitting, standing and lying) along with the recognition of an empty room. The accuracy of the system was 90% in the real-life test experiments. With this system, we remove the human intervention in home automation systems for controlling the home appliances and at the same time we ensure the data privacy and reduce the energy consumption by efficiently and optimally using home appliances.

Drishtikon: An advanced navigational aid system for visually impaired people

Apr 23, 2019

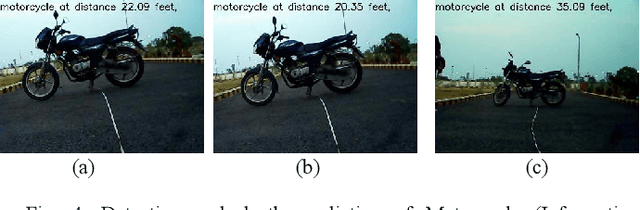

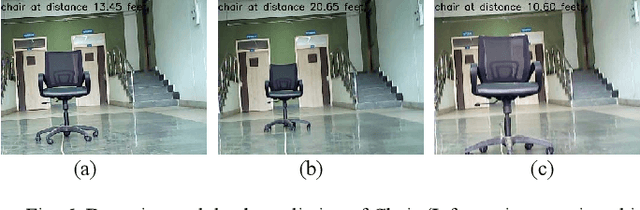

Abstract:Today, many of the aid systems deployed for visually impaired people are mostly made for a single purpose. Be it navigation, object detection, or distance perceiving. Also, most of the deployed aid systems use indoor navigation which requires a pre-knowledge of the environment. These aid systems often fail to help visually impaired people in the unfamiliar scenario. In this paper, we propose an aid system developed using object detection and depth perceivement to navigate a person without dashing into an object. The prototype developed detects 90 different types of objects and compute their distances from the user. We also, implemented a navigation feature to get input from the user about the target destination and hence, navigate the impaired person to his/her destination using Google Directions API. With this system, we built a multi-feature, high accuracy navigational aid system which can be deployed in the wild and help the visually impaired people in their daily life by navigating them effortlessly to their desired destination.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge