Samarjit Chakraborty

Resource-Conscious RL Algorithms for Deep Brain Stimulation

Jan 19, 2026Abstract:Deep Brain Stimulation (DBS) has proven to be a promising treatment of Parkinson's Disease (PD). DBS involves stimulating specific regions of the brain's Basal Ganglia (BG) using electric impulses to alleviate symptoms of PD such as tremors, rigidity, and bradykinesia. Although most clinical DBS approaches today use a fixed frequency and amplitude, they suffer from side effects (such as slurring of speech) and shortened battery life of the implant. Reinforcement learning (RL) approaches have been used in recent research to perform DBS in a more adaptive manner to improve overall patient outcome. These RL algorithms are, however, too complex to be trained in vivo due to their long convergence time and requirement of high computational resources. We propose a new Time & Threshold-Triggered Multi-Armed Bandit (T3P MAB) RL approach for DBS that is more effective than existing algorithms. Further, our T3P agent is lightweight enough to be deployed in the implant, unlike current deep-RL strategies, and even forgoes the need for an offline training phase. Additionally, most existing RL approaches have focused on modulating only frequency or amplitude, and the possibility of tuning them together remains greatly unexplored in the literature. Our RL agent can tune both frequency and amplitude of DBS signals to the brain with better sample efficiency and requires minimal time to converge. We implement an MAB agent for DBS for the first time on hardware to report energy measurements and prove its suitability for resource-constrained platforms. Our T3P MAB algorithm is deployed on a variety of microcontroller unit (MCU) setups to show its efficiency in terms of power consumption as opposed to other existing RL approaches used in recent work.

Enhancing Split Computing and Early Exit Applications through Predefined Sparsity

Jul 16, 2024

Abstract:In the past decade, Deep Neural Networks (DNNs) achieved state-of-the-art performance in a broad range of problems, spanning from object classification and action recognition to smart building and healthcare. The flexibility that makes DNNs such a pervasive technology comes at a price: the computational requirements preclude their deployment on most of the resource-constrained edge devices available today to solve real-time and real-world tasks. This paper introduces a novel approach to address this challenge by combining the concept of predefined sparsity with Split Computing (SC) and Early Exit (EE). In particular, SC aims at splitting a DNN with a part of it deployed on an edge device and the rest on a remote server. Instead, EE allows the system to stop using the remote server and rely solely on the edge device's computation if the answer is already good enough. Specifically, how to apply such a predefined sparsity to a SC and EE paradigm has never been studied. This paper studies this problem and shows how predefined sparsity significantly reduces the computational, storage, and energy burdens during the training and inference phases, regardless of the hardware platform. This makes it a valuable approach for enhancing the performance of SC and EE applications. Experimental results showcase reductions exceeding 4x in storage and computational complexity without compromising performance. The source code is available at https://github.com/intelligolabs/sparsity_sc_ee.

MTL-Split: Multi-Task Learning for Edge Devices using Split Computing

Jul 08, 2024

Abstract:Split Computing (SC), where a Deep Neural Network (DNN) is intelligently split with a part of it deployed on an edge device and the rest on a remote server is emerging as a promising approach. It allows the power of DNNs to be leveraged for latency-sensitive applications that do not allow the entire DNN to be deployed remotely, while not having sufficient computation bandwidth available locally. In many such embedded systems scenarios, such as those in the automotive domain, computational resource constraints also necessitate Multi-Task Learning (MTL), where the same DNN is used for multiple inference tasks instead of having dedicated DNNs for each task, which would need more computing bandwidth. However, how to partition such a multi-tasking DNN to be deployed within a SC framework has not been sufficiently studied. This paper studies this problem, and MTL-Split, our novel proposed architecture, shows encouraging results on both synthetic and real-world data. The source code is available at https://github.com/intelligolabs/MTL-Split.

WiFiEye -- Seeing over WiFi Made Accessible

Apr 06, 2022

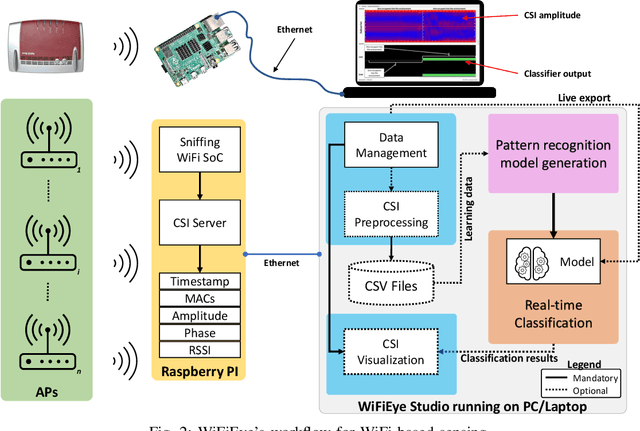

Abstract:While commonly used for communication purposes, an increasing number of recent studies consider WiFi for sensing. In particular, wireless signals are altered (e.g., reflected and attenuated) by the human body and objects in the environment. This can be perceived by an observer to infer information on human activities or changes in the environment and, hence, to "see" over WiFi. Until now, works on WiFi-based sensing have resulted in a set of custom software tools - each designed for a specific purpose. Moreover, given how scattered the literature is, it is difficult to even identify all steps/functions necessary to build a basic system for WiFi-based sensing. This has led to a high entry barrier, hindering further research in this area. There has been no effort to integrate these tools or to build a general software framework that can serve as the basis for further research, e.g., on using machine learning to interpret the altered WiFi signals. To address this issue, in this paper, we propose WiFiEye - a generic software framework that makes all necessary steps/functions available "out of the box". This way, WiFiEye allows researchers to easily bootstrap new WiFi-based sensing applications, thereby, focusing on research rather than on implementation aspects. To illustrate WiFiEye's workflow, we present a case study on WiFi-based human activity recognition.

Vehicle Position Estimation with Aerial Imagery from Unmanned Aerial Vehicles

May 13, 2020

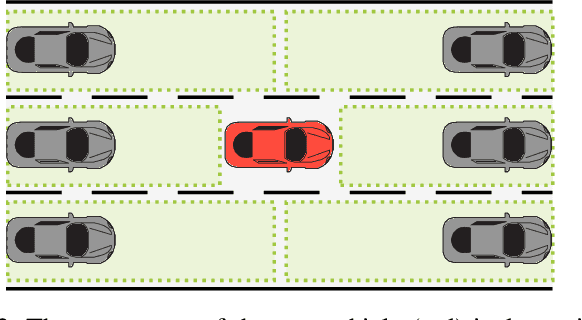

Abstract:The availability of real-world data is a key element for novel developments in the fields of automotive and traffic research. Aerial imagery has the major advantage of recording multiple objects simultaneously and overcomes limitations such as occlusions. However, there are only few data sets available. This work describes a process to estimate a precise vehicle position from aerial imagery. A robust object detection is crucial for reliable results, hence the state-of-the-art deep neural network Mask-RCNN is applied for that purpose. Two training data sets are employed: The first one is optimized for detecting the test vehicle, while the second one consists of randomly selected images recorded on public roads. To reduce errors, several aspects are accounted for, such as the drone movement and the perspective projection from a photograph. The estimated position is comapared with a reference system installed in the test vehicle. It is shown, that a mean accuracy of 20 cm can be achieved with flight altitudes up to 100 m, Full-HD resolution and a frame-by-frame detection. A reliable position estimation is the basis for further data processing, such as obtaining additional vehicle state variables. The source code, training weights, labeled data and example videos are made publicly available. This supports researchers to create new traffic data sets with specific local conditions.

* Copyright 20xx IEEE. Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses, in any current or future media, including reprinting/republishing this material for advertising or promotional purposes, creating new collective works, for resale or redistribution to servers or lists, or reuse of any copyrighted component of this work in other works

Unsupervised and Supervised Learning with the Random Forest Algorithm for Traffic Scenario Clustering and Classification

Apr 05, 2020

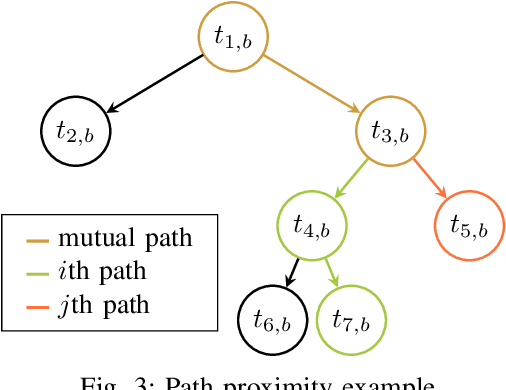

Abstract:The goal of this paper is to provide a method, which is able to find categories of traffic scenarios automatically. The architecture consists of three main components: A microscopic traffic simulation, a clustering technique and a classification technique for the operational phase. The developed simulation tool models each vehicle separately, while maintaining the dependencies between each other. The clustering approach consists of a modified unsupervised Random Forest algorithm to find a data adaptive similarity measure between all scenarios. As part of this, the path proximity, a novel technique to determine a similarity based on the Random Forest algorithm is presented. In the second part of the clustering, the similarities are used to define a set of clusters. In the third part, a Random Forest classifier is trained using the defined clusters for the operational phase. A thresholding technique is described to ensure a certain confidence level for the class assignment. The method is applied for highway scenarios. The results show that the proposed method is an excellent approach to automatically categorize traffic scenarios, which is particularly relevant for testing autonomous vehicle functionality.

* Copyright 20xx IEEE. Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses, in any current or future media, including reprinting/republishing this material for advertising or promotional purposes, creating new collective works, for resale or redistribution to servers or lists, or reuse of any copyrighted component of this work in other works

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge