Eduardo Sánchez Morales

High precision indoor positioning by means of LiDAR

May 14, 2020

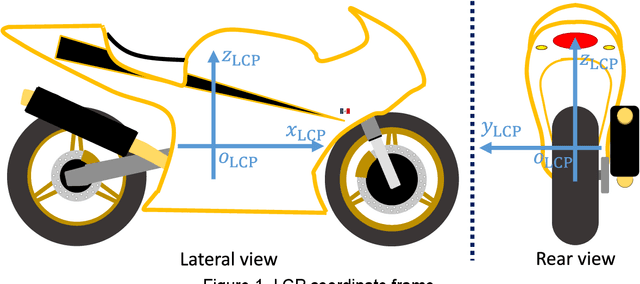

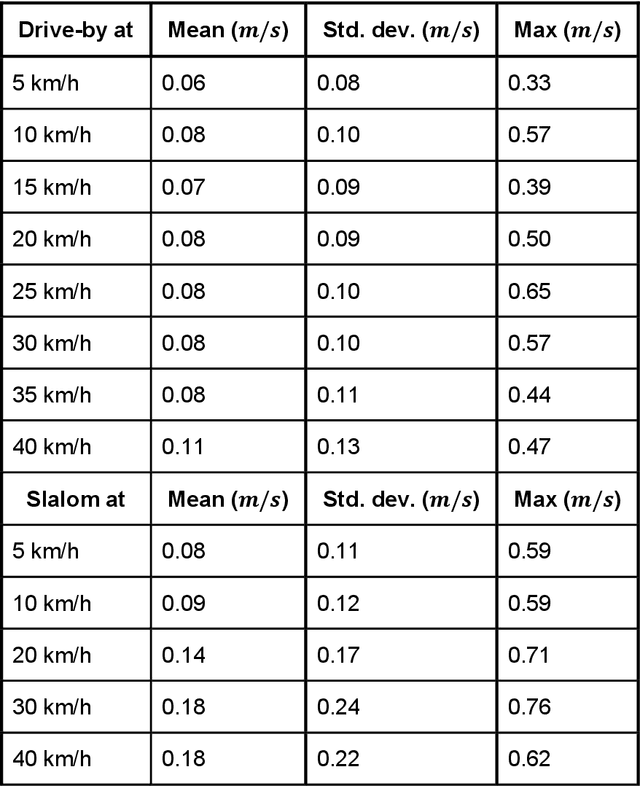

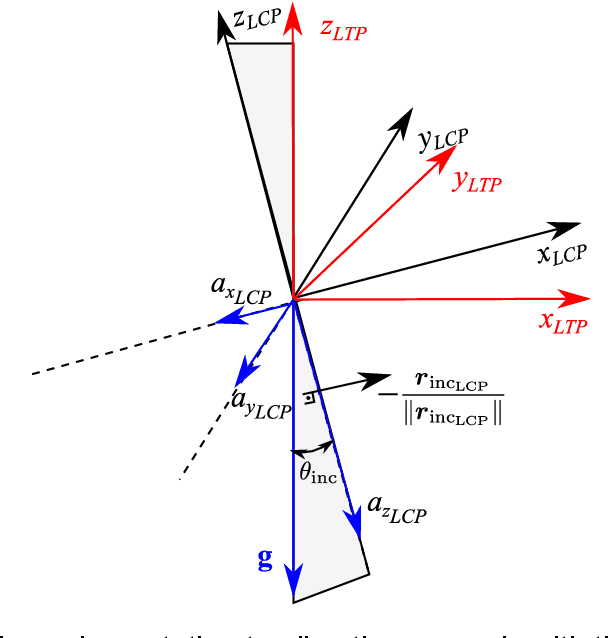

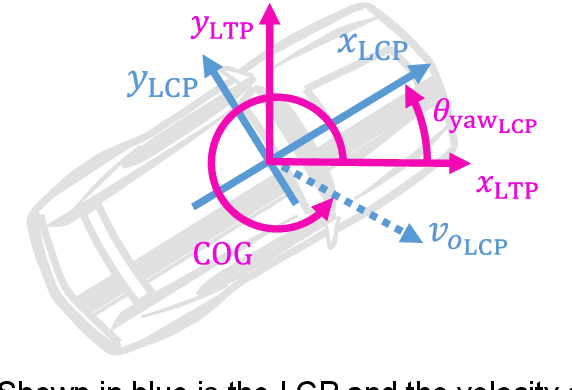

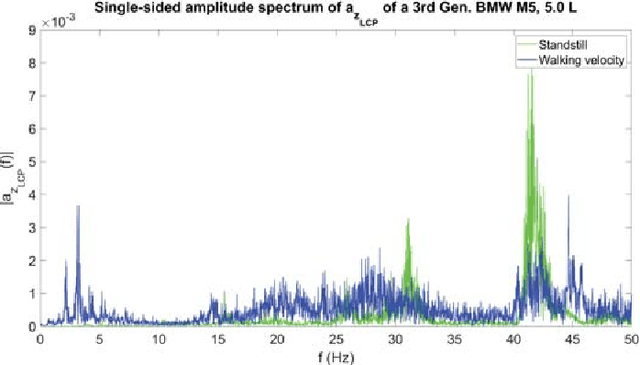

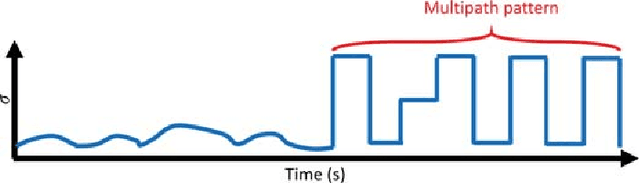

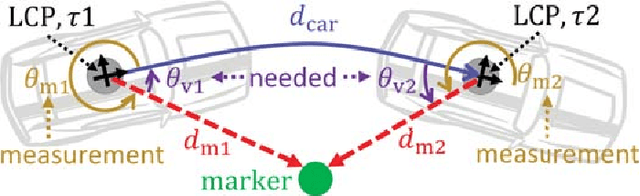

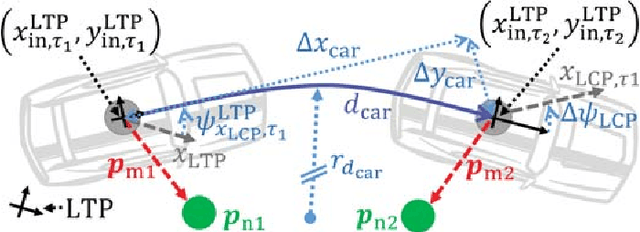

Abstract:The trend towards autonomous driving and the continuous research in the automotive area, like Advanced Driver Assistance Systems (ADAS), requires an accurate localization under all circumstances. An accurate estimation of the vehicle state is a basic requirement for any trajectory-planning algorithm. Still, even when the introduction of the GPS L5 band promises lane-accuracy, coverage limitations in roofed areas still have to be addressed. In this work, a method for high precision indoor positioning using a LiDAR is presented. The method is based on the combination of motion models with LiDAR measurements, and uses infrastructural elements as positioning references. This allows to estimate the orientation, velocity over ground and position of a vehicle in a Local Tangent Plane (LTP) reference frame. When the outputs of the proposed method are compared to those of an Automotive Dynamic Motion Analyzer (ADMA), mean errors of 1 degree, 0.1 m/s and of 4.7 cm respectively are obtained. The method can be implemented by using a LiDAR sensor as a stand-alone unit. A median runtime of 40.77 us on an Intel i7-6820HQ CPU signals the possibility of real-time processing.

High Precision Indoor Navigation for Autonomous Vehicles

May 14, 2020

Abstract:Autonomous driving is an important trend of the automotive industry. The continuous research towards this goal requires a precise reference vehicle state estimation under all circumstances in order to develop and test autonomous vehicle functions. However, even when lane-accurate positioning is expected from oncoming technologies, like the L5 GPS band, the question of accurate positioning in roofed areas, e.\,g., tunnels or park houses, still has to be addressed. In this paper, a novel procedure for a reference vehicle state estimation is presented. The procedure includes three main components. First, a robust standstill detection based purely on signals from an Inertial Measurement Unit. Second, a vehicle state estimation by means of statistical filtering. Third, a high accuracy LiDAR-based positioning method that delivers velocity, position and orientation correction data with a mean error of 0.1 m/s, 4.7 cm and 1$^\circ$ respectively. Runtime tests on a CPU indicates the possibility of real-time implementation.

Parallel Multi-Hypothesis Algorithm for Criticality Estimation in Traffic and Collision Avoidance

May 14, 2020

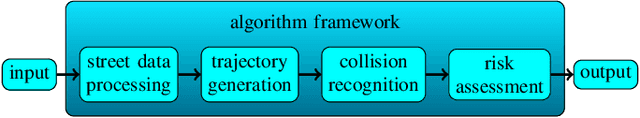

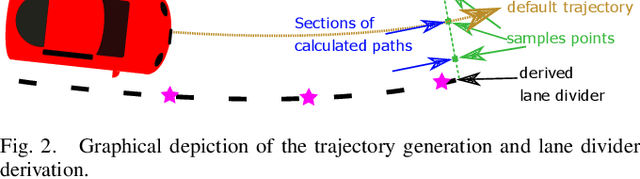

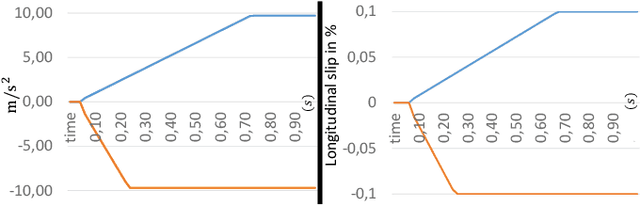

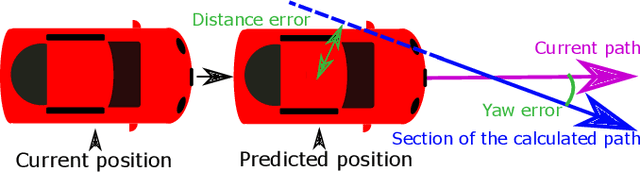

Abstract:Due to the current developments towards autonomous driving and vehicle active safety, there is an increasing necessity for algorithms that are able to perform complex criticality predictions in real-time. Being able to process multi-object traffic scenarios aids the implementation of a variety of automotive applications such as driver assistance systems for collision prevention and mitigation as well as fall-back systems for autonomous vehicles. We present a fully model-based algorithm with a parallelizable architecture. The proposed algorithm can evaluate the criticality of complex, multi-modal (vehicles and pedestrians) traffic scenarios by simulating millions of trajectory combinations and detecting collisions between objects. The algorithm is able to estimate upcoming criticality at very early stages, demonstrating its potential for vehicle safety-systems and autonomous driving applications. An implementation on an embedded system in a test vehicle proves in a prototypical manner the compatibility of the algorithm with the hardware possibilities of modern cars. For a complex traffic scenario with 11 dynamic objects, more than 86 million pose combinations are evaluated in 21 ms on the GPU of a Drive PX~2.

Vehicle Position Estimation with Aerial Imagery from Unmanned Aerial Vehicles

May 13, 2020

Abstract:The availability of real-world data is a key element for novel developments in the fields of automotive and traffic research. Aerial imagery has the major advantage of recording multiple objects simultaneously and overcomes limitations such as occlusions. However, there are only few data sets available. This work describes a process to estimate a precise vehicle position from aerial imagery. A robust object detection is crucial for reliable results, hence the state-of-the-art deep neural network Mask-RCNN is applied for that purpose. Two training data sets are employed: The first one is optimized for detecting the test vehicle, while the second one consists of randomly selected images recorded on public roads. To reduce errors, several aspects are accounted for, such as the drone movement and the perspective projection from a photograph. The estimated position is comapared with a reference system installed in the test vehicle. It is shown, that a mean accuracy of 20 cm can be achieved with flight altitudes up to 100 m, Full-HD resolution and a frame-by-frame detection. A reliable position estimation is the basis for further data processing, such as obtaining additional vehicle state variables. The source code, training weights, labeled data and example videos are made publicly available. This supports researchers to create new traffic data sets with specific local conditions.

* Copyright 20xx IEEE. Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses, in any current or future media, including reprinting/republishing this material for advertising or promotional purposes, creating new collective works, for resale or redistribution to servers or lists, or reuse of any copyrighted component of this work in other works

Unsupervised and Supervised Learning with the Random Forest Algorithm for Traffic Scenario Clustering and Classification

Apr 05, 2020

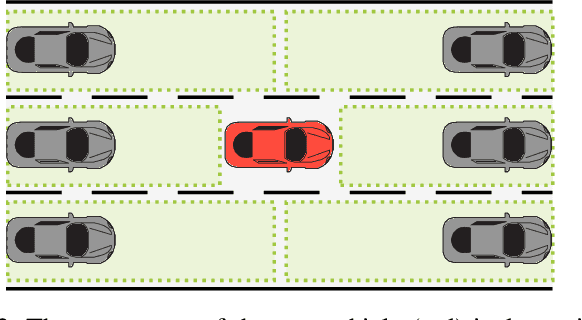

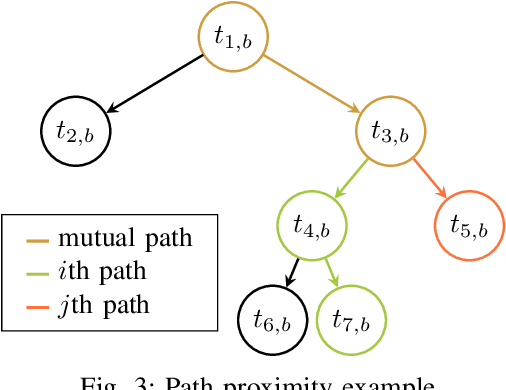

Abstract:The goal of this paper is to provide a method, which is able to find categories of traffic scenarios automatically. The architecture consists of three main components: A microscopic traffic simulation, a clustering technique and a classification technique for the operational phase. The developed simulation tool models each vehicle separately, while maintaining the dependencies between each other. The clustering approach consists of a modified unsupervised Random Forest algorithm to find a data adaptive similarity measure between all scenarios. As part of this, the path proximity, a novel technique to determine a similarity based on the Random Forest algorithm is presented. In the second part of the clustering, the similarities are used to define a set of clusters. In the third part, a Random Forest classifier is trained using the defined clusters for the operational phase. A thresholding technique is described to ensure a certain confidence level for the class assignment. The method is applied for highway scenarios. The results show that the proposed method is an excellent approach to automatically categorize traffic scenarios, which is particularly relevant for testing autonomous vehicle functionality.

* Copyright 20xx IEEE. Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses, in any current or future media, including reprinting/republishing this material for advertising or promotional purposes, creating new collective works, for resale or redistribution to servers or lists, or reuse of any copyrighted component of this work in other works

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge