Andrés García Higuera

High precision indoor positioning by means of LiDAR

May 14, 2020

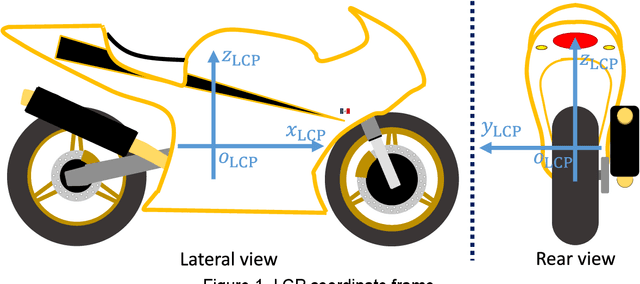

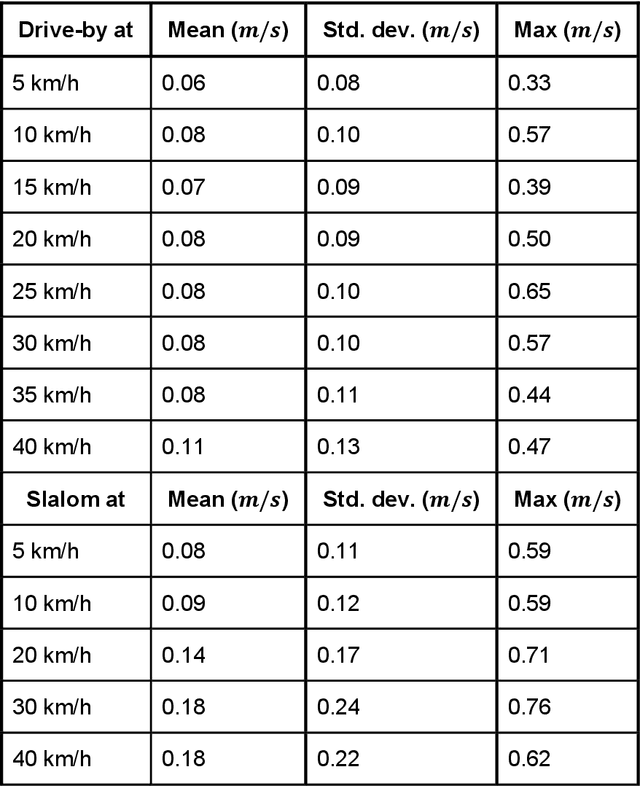

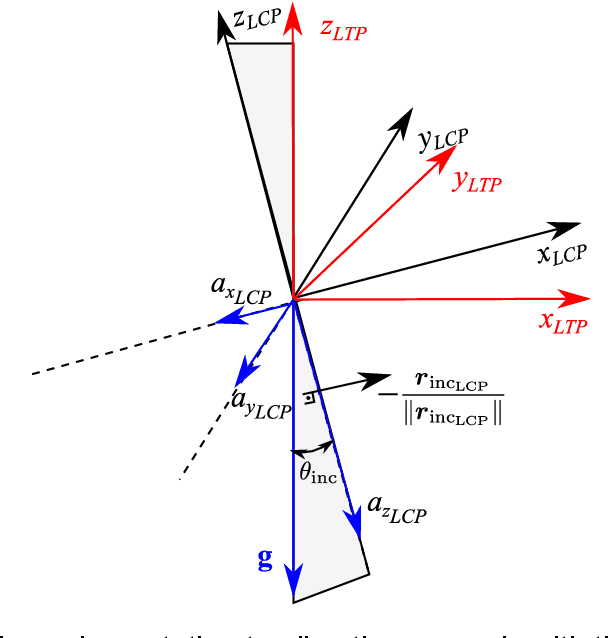

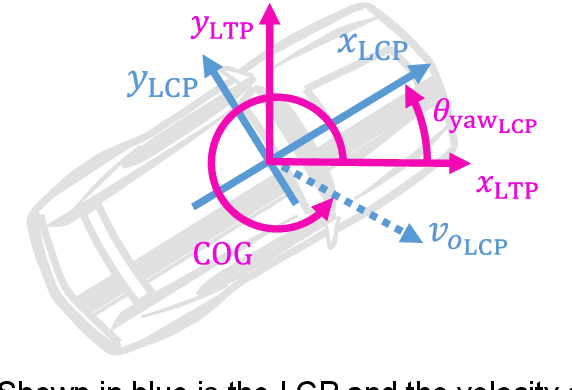

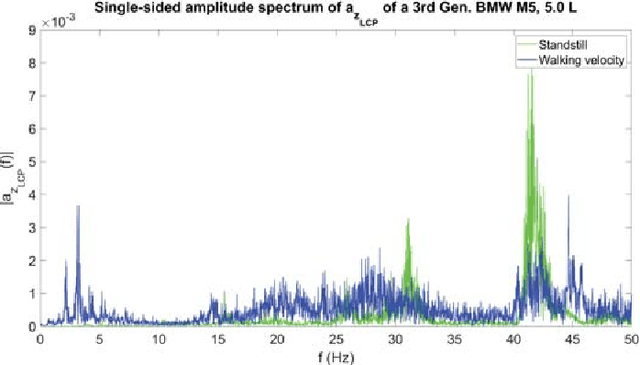

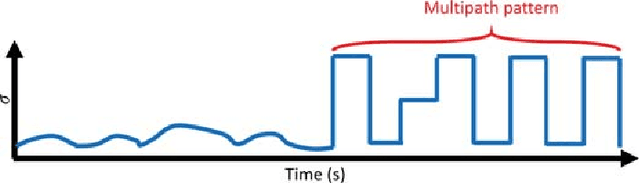

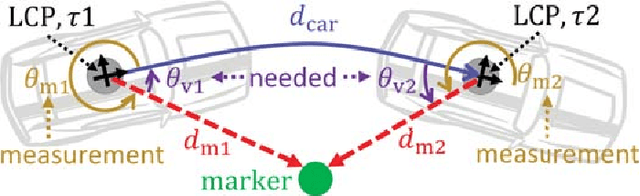

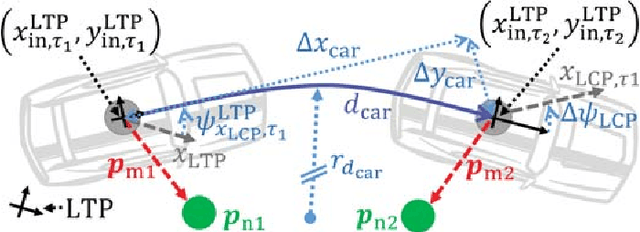

Abstract:The trend towards autonomous driving and the continuous research in the automotive area, like Advanced Driver Assistance Systems (ADAS), requires an accurate localization under all circumstances. An accurate estimation of the vehicle state is a basic requirement for any trajectory-planning algorithm. Still, even when the introduction of the GPS L5 band promises lane-accuracy, coverage limitations in roofed areas still have to be addressed. In this work, a method for high precision indoor positioning using a LiDAR is presented. The method is based on the combination of motion models with LiDAR measurements, and uses infrastructural elements as positioning references. This allows to estimate the orientation, velocity over ground and position of a vehicle in a Local Tangent Plane (LTP) reference frame. When the outputs of the proposed method are compared to those of an Automotive Dynamic Motion Analyzer (ADMA), mean errors of 1 degree, 0.1 m/s and of 4.7 cm respectively are obtained. The method can be implemented by using a LiDAR sensor as a stand-alone unit. A median runtime of 40.77 us on an Intel i7-6820HQ CPU signals the possibility of real-time processing.

High Precision Indoor Navigation for Autonomous Vehicles

May 14, 2020

Abstract:Autonomous driving is an important trend of the automotive industry. The continuous research towards this goal requires a precise reference vehicle state estimation under all circumstances in order to develop and test autonomous vehicle functions. However, even when lane-accurate positioning is expected from oncoming technologies, like the L5 GPS band, the question of accurate positioning in roofed areas, e.\,g., tunnels or park houses, still has to be addressed. In this paper, a novel procedure for a reference vehicle state estimation is presented. The procedure includes three main components. First, a robust standstill detection based purely on signals from an Inertial Measurement Unit. Second, a vehicle state estimation by means of statistical filtering. Third, a high accuracy LiDAR-based positioning method that delivers velocity, position and orientation correction data with a mean error of 0.1 m/s, 4.7 cm and 1$^\circ$ respectively. Runtime tests on a CPU indicates the possibility of real-time implementation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge