Samar Fares

SPDMark: Selective Parameter Displacement for Robust Video Watermarking

Dec 12, 2025Abstract:The advent of high-quality video generation models has amplified the need for robust watermarking schemes that can be used to reliably detect and track the provenance of generated videos. Existing video watermarking methods based on both post-hoc and in-generation approaches fail to simultaneously achieve imperceptibility, robustness, and computational efficiency. This work introduces a novel framework for in-generation video watermarking called SPDMark (pronounced `SpeedMark') based on selective parameter displacement of a video diffusion model. Watermarks are embedded into the generated videos by modifying a subset of parameters in the generative model. To make the problem tractable, the displacement is modeled as an additive composition of layer-wise basis shifts, where the final composition is indexed by the watermarking key. For parameter efficiency, this work specifically leverages low-rank adaptation (LoRA) to implement the basis shifts. During the training phase, the basis shifts and the watermark extractor are jointly learned by minimizing a combination of message recovery, perceptual similarity, and temporal consistency losses. To detect and localize temporal modifications in the watermarked videos, we use a cryptographic hashing function to derive frame-specific watermark messages from the given base watermarking key. During watermark extraction, maximum bipartite matching is applied to recover the correct frame order, even from temporally tampered videos. Evaluations on both text-to-video and image-to-video generation models demonstrate the ability of SPDMark to generate imperceptible watermarks that can be recovered with high accuracy and also establish its robustness against a variety of common video modifications.

MirrorCheck: Efficient Adversarial Defense for Vision-Language Models

Jun 13, 2024

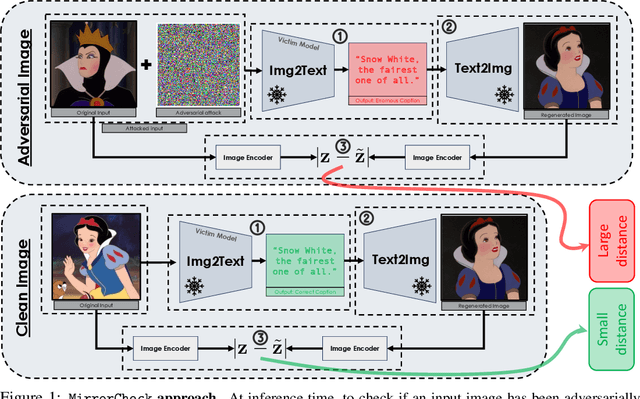

Abstract:Vision-Language Models (VLMs) are becoming increasingly vulnerable to adversarial attacks as various novel attack strategies are being proposed against these models. While existing defenses excel in unimodal contexts, they currently fall short in safeguarding VLMs against adversarial threats. To mitigate this vulnerability, we propose a novel, yet elegantly simple approach for detecting adversarial samples in VLMs. Our method leverages Text-to-Image (T2I) models to generate images based on captions produced by target VLMs. Subsequently, we calculate the similarities of the embeddings of both input and generated images in the feature space to identify adversarial samples. Empirical evaluations conducted on different datasets validate the efficacy of our approach, outperforming baseline methods adapted from image classification domains. Furthermore, we extend our methodology to classification tasks, showcasing its adaptability and model-agnostic nature. Theoretical analyses and empirical findings also show the resilience of our approach against adaptive attacks, positioning it as an excellent defense mechanism for real-world deployment against adversarial threats.

Redefining Contributions: Shapley-Driven Federated Learning

Jun 01, 2024Abstract:Federated learning (FL) has emerged as a pivotal approach in machine learning, enabling multiple participants to collaboratively train a global model without sharing raw data. While FL finds applications in various domains such as healthcare and finance, it is challenging to ensure global model convergence when participants do not contribute equally and/or honestly. To overcome this challenge, principled mechanisms are required to evaluate the contributions made by individual participants in the FL setting. Existing solutions for contribution assessment rely on general accuracy evaluation, often failing to capture nuanced dynamics and class-specific influences. This paper proposes a novel contribution assessment method called ShapFed for fine-grained evaluation of participant contributions in FL. Our approach uses Shapley values from cooperative game theory to provide a granular understanding of class-specific influences. Based on ShapFed, we introduce a weighted aggregation method called ShapFed-WA, which outperforms conventional federated averaging, especially in class-imbalanced scenarios. Personalizing participant updates based on their contributions further enhances collaborative fairness by delivering differentiated models commensurate with the participant contributions. Experiments on CIFAR-10, Chest X-Ray, and Fed-ISIC2019 datasets demonstrate the effectiveness of our approach in improving utility, efficiency, and fairness in FL systems. The code can be found at https://github.com/tnurbek/shapfed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge