Saloni Dash

Rethinking Counterfactual Data Augmentation Under Confounding

May 29, 2023

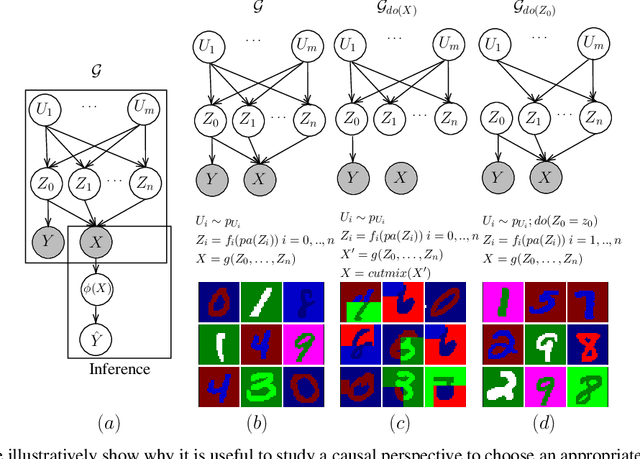

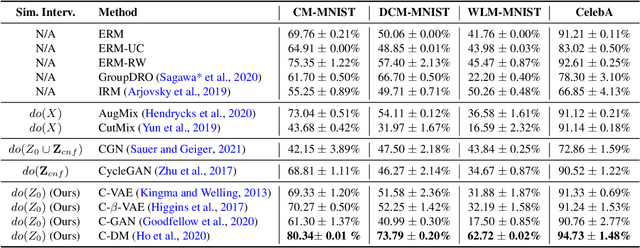

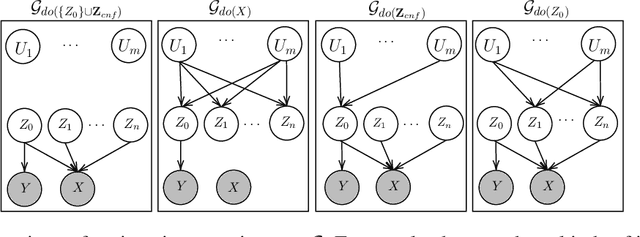

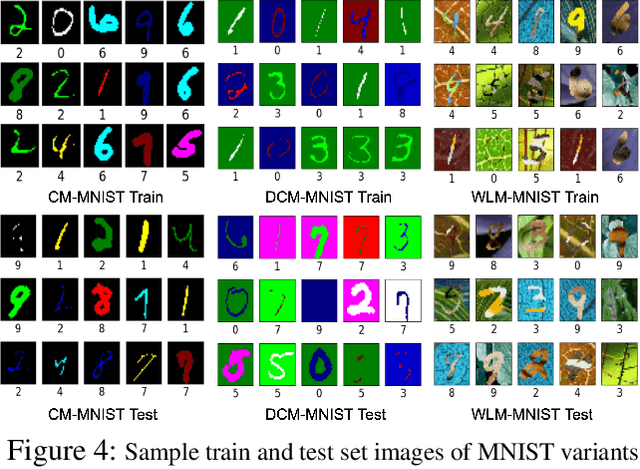

Abstract:Counterfactual data augmentation has recently emerged as a method to mitigate confounding biases in the training data for a machine learning model. These biases, such as spurious correlations, arise due to various observed and unobserved confounding variables in the data generation process. In this paper, we formally analyze how confounding biases impact downstream classifiers and present a causal viewpoint to the solutions based on counterfactual data augmentation. We explore how removing confounding biases serves as a means to learn invariant features, ultimately aiding in generalization beyond the observed data distribution. Additionally, we present a straightforward yet powerful algorithm for generating counterfactual images, which effectively mitigates the influence of confounding effects on downstream classifiers. Through experiments on MNIST variants and the CelebA datasets, we demonstrate the effectiveness and practicality of our approach.

Divided We Rule: Influencer Polarization on Twitter During Political Crises in India

May 18, 2021

Abstract:Influencers are key to the nature and networks of information propagation on social media. Influencers are particularly important in political discourse through their engagement with issues, and may derive their legitimacy either solely or partly through online operation, or have an offline sphere of expertise such as entertainers, journalists etc. To quantify influencers' political engagement and polarity, we use Google's Universal Sentence Encoder (USE) to encode the tweets of 6k influencers and 26k Indian politicians during political crises in India. We then obtain aggregate vector representations of the influencers based on their tweet embeddings, which alongside retweet graphs help compute the stance and polarity of these influencers with respect to the political issues. We find that while on COVID-19 there is a confluence of influencers on the side of the government, on three other contentious issues around citizenship, Kashmir's statehood, and farmers' protests, it is mainly government-aligned fan accounts that amplify the incumbent's positions. We propose that this method offers insight into the political schisms in present-day India, but also offers a means to study influencers and polarization in other contexts.

Counterfactual Generation and Fairness Evaluation Using Adversarially Learned Inference

Sep 17, 2020

Abstract:Recent studies have reported biases in machine learning image classifiers, especially against particular demographic groups. Counterfactual examples for an input---perturbations that change specific features but not others---have been shown to be useful for evaluating explainability and fairness of machine learning models. However, generating counterfactual examples for images is non-trivial due to the underlying causal structure governing the various features of an image. To be meaningful, generated perturbations need to satisfy constraints implied by the causal model. We present a method for generating counterfactuals by incorporating a known causal graph structure in a conditional variant of Adversarially Learned Inference (ALI). The proposed approach learns causal relationships between the specified attributes of an image and generates counterfactuals in accordance with these relationships. On Morpho-MNIST and CelebA datasets, the method generates counterfactuals that can change specified attributes and their causal descendants while keeping other attributes constant. As an application, we apply the generated counterfactuals from CelebA images to evaluate fairness biases in a classifier that predicts attractiveness of a face.

Synthetic Event Time Series Health Data Generation

Nov 27, 2019

Abstract:Synthetic medical data which preserves privacy while maintaining utility can be used as an alternative to real medical data, which has privacy costs and resource constraints associated with it. At present, most models focus on generating cross-sectional health data which is not necessarily representative of real data. In reality, medical data is longitudinal in nature, with a single patient having multiple health events, non-uniformly distributed throughout their lifetime. These events are influenced by patient covariates such as comorbidities, age group, gender etc. as well as external temporal effects (e.g. flu season). While there exist seminal methods to model time series data, it becomes increasingly challenging to extend these methods to medical event time series data. Due to the complexity of the real data, in which each patient visit is an event, we transform the data by using summary statistics to characterize the events for a fixed set of time intervals, to facilitate analysis and interpretability. We then train a generative adversarial network to generate synthetic data. We demonstrate this approach by generating human sleep patterns, from a publicly available dataset. We empirically evaluate the generated data and show close univariate resemblance between synthetic and real data. However, we also demonstrate how stratification by covariates is required to gain a deeper understanding of synthetic data quality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge