Charchit Sharma

On Evaluation of Vision Datasets and Models using Human Competency Frameworks

Sep 06, 2024

Abstract:Evaluating models and datasets in computer vision remains a challenging task, with most leaderboards relying solely on accuracy. While accuracy is a popular metric for model evaluation, it provides only a coarse assessment by considering a single model's score on all dataset items. This paper explores Item Response Theory (IRT), a framework that infers interpretable latent parameters for an ensemble of models and each dataset item, enabling richer evaluation and analysis beyond the single accuracy number. Leveraging IRT, we assess model calibration, select informative data subsets, and demonstrate the usefulness of its latent parameters for analyzing and comparing models and datasets in computer vision.

Rethinking Counterfactual Data Augmentation Under Confounding

May 29, 2023

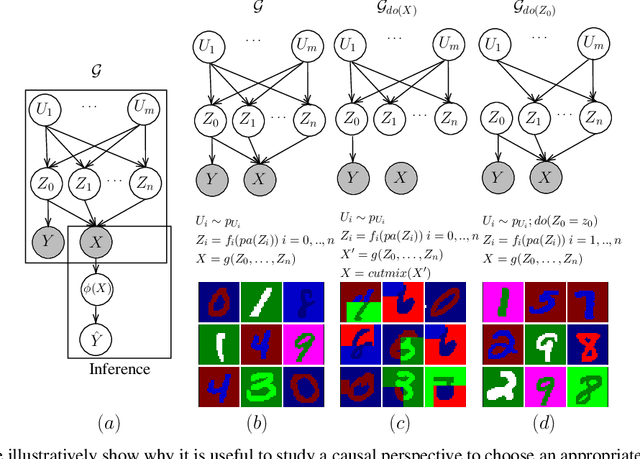

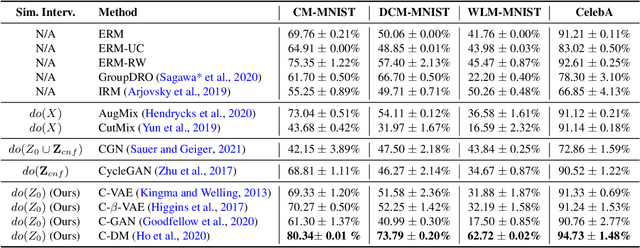

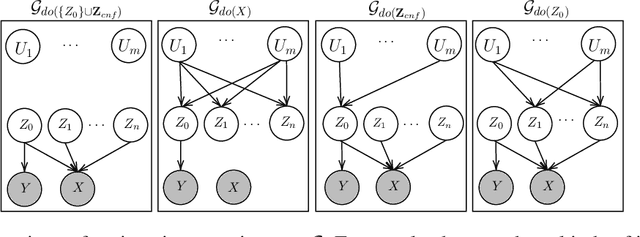

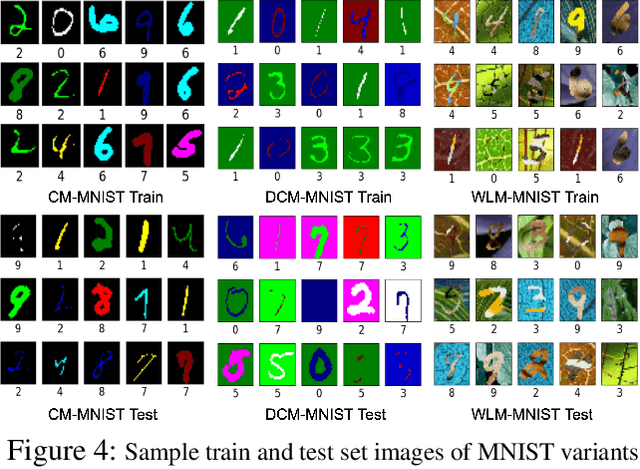

Abstract:Counterfactual data augmentation has recently emerged as a method to mitigate confounding biases in the training data for a machine learning model. These biases, such as spurious correlations, arise due to various observed and unobserved confounding variables in the data generation process. In this paper, we formally analyze how confounding biases impact downstream classifiers and present a causal viewpoint to the solutions based on counterfactual data augmentation. We explore how removing confounding biases serves as a means to learn invariant features, ultimately aiding in generalization beyond the observed data distribution. Additionally, we present a straightforward yet powerful algorithm for generating counterfactual images, which effectively mitigates the influence of confounding effects on downstream classifiers. Through experiments on MNIST variants and the CelebA datasets, we demonstrate the effectiveness and practicality of our approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge