Salman Ahmed

Biomedical image analysis competitions: The state of current participation practice

Dec 16, 2022Abstract:The number of international benchmarking competitions is steadily increasing in various fields of machine learning (ML) research and practice. So far, however, little is known about the common practice as well as bottlenecks faced by the community in tackling the research questions posed. To shed light on the status quo of algorithm development in the specific field of biomedical imaging analysis, we designed an international survey that was issued to all participants of challenges conducted in conjunction with the IEEE ISBI 2021 and MICCAI 2021 conferences (80 competitions in total). The survey covered participants' expertise and working environments, their chosen strategies, as well as algorithm characteristics. A median of 72% challenge participants took part in the survey. According to our results, knowledge exchange was the primary incentive (70%) for participation, while the reception of prize money played only a minor role (16%). While a median of 80 working hours was spent on method development, a large portion of participants stated that they did not have enough time for method development (32%). 25% perceived the infrastructure to be a bottleneck. Overall, 94% of all solutions were deep learning-based. Of these, 84% were based on standard architectures. 43% of the respondents reported that the data samples (e.g., images) were too large to be processed at once. This was most commonly addressed by patch-based training (69%), downsampling (37%), and solving 3D analysis tasks as a series of 2D tasks. K-fold cross-validation on the training set was performed by only 37% of the participants and only 50% of the participants performed ensembling based on multiple identical models (61%) or heterogeneous models (39%). 48% of the respondents applied postprocessing steps.

OpTorch: Optimized deep learning architectures for resource limited environments

May 04, 2021

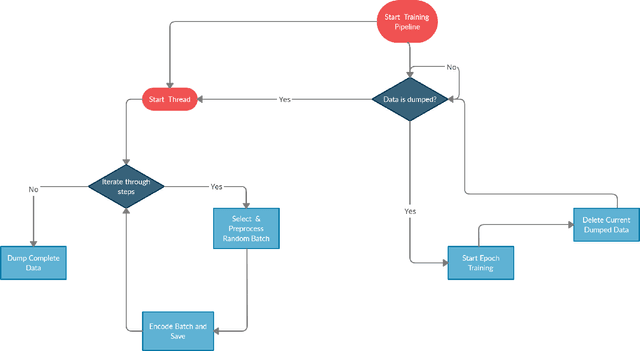

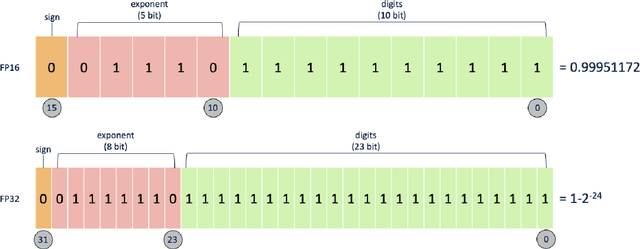

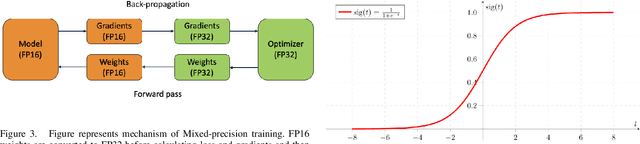

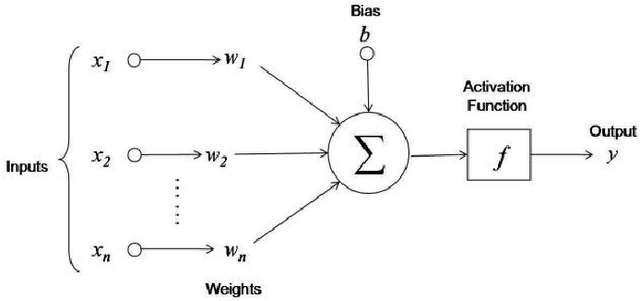

Abstract:Deep learning algorithms have made many breakthroughs and have various applications in real life. Computational resources become a bottleneck as the data and complexity of the deep learning pipeline increases. In this paper, we propose optimized deep learning pipelines in multiple aspects of training including time and memory. OpTorch is a machine learning library designed to overcome weaknesses in existing implementations of neural network training. OpTorch provides features to train complex neural networks with limited computational resources. OpTorch achieved the same accuracy as existing libraries on Cifar-10 and Cifar-100 datasets while reducing memory usage to approximately 50%. We also explore the effect of weights on total memory usage in deep learning pipelines. In our experiments, parallel encoding-decoding along with sequential checkpoints results in much improved memory and time usage while keeping the accuracy similar to existing pipelines. OpTorch python package is available at available at https://github.com/cbrl-nuces/optorch

Embedding Code Contexts for Cryptographic API Suggestion:New Methodologies and Comparisons

Mar 18, 2021

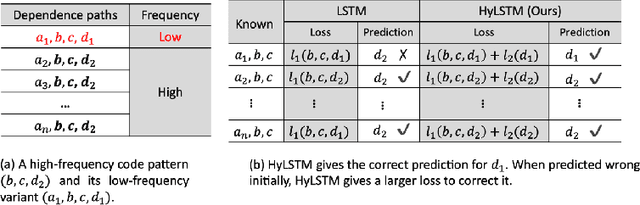

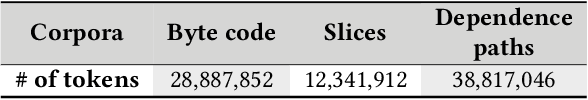

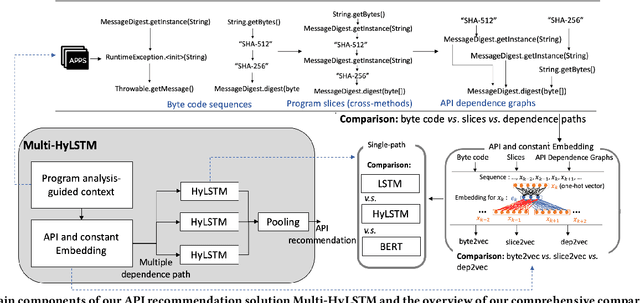

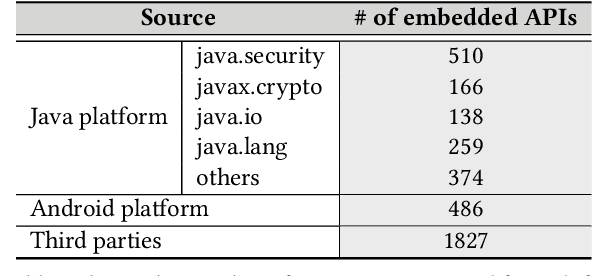

Abstract:Despite recent research efforts, the vision of automatic code generation through API recommendation has not been realized. Accuracy and expressiveness challenges of API recommendation needs to be systematically addressed. We present a new neural network-based approach, Multi-HyLSTM for API recommendation --targeting cryptography-related code. Multi-HyLSTM leverages program analysis to guide the API embedding and recommendation. By analyzing the data dependence paths of API methods, we train embedding and specialize a multi-path neural network architecture for API recommendation tasks that accurately predict the next API method call. We address two previously unreported programming language-specific challenges, differentiating functionally similar APIs and capturing low-frequency long-range influences. Our results confirm the effectiveness of our design choices, including program-analysis-guided embedding, multi-path code suggestion architecture, and low-frequency long-range-enhanced sequence learning, with high accuracy on top-1 recommendations. We achieve a top-1 accuracy of 91.41% compared with 77.44% from the state-of-the-art tool SLANG. In an analysis of 245 test cases, compared with the commercial tool Codota, we achieve a top-1 recommendation accuracy of 88.98%, which is significantly better than Codota's accuracy of 64.90%. We publish our data and code as a large Java cryptographic code dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge