Sajjad Ghiasvand

MI-to-Mid Distilled Compression (M2M-DC): An Hybrid-Information-Guided-Block Pruning with Progressive Inner Slicing Approach to Model Compression

Nov 18, 2025Abstract:We introduce MI-to-Mid Distilled Compression (M2M-DC), a two-scale, shape-safe compression framework that interleaves information-guided block pruning with progressive inner slicing and staged knowledge distillation (KD). First, M2M-DC ranks residual (or inverted-residual) blocks by a label-aware mutual information (MI) signal and removes the least informative units (structured prune-after-training). It then alternates short KD phases with stage-coherent, residual-safe channel slicing: (i) stage "planes" (co-slicing conv2 out-channels with the downsample path and next-stage inputs), and (ii) an optional mid-channel trim (conv1 out / bn1 / conv2 in). This targets complementary redundancy, whole computational motifs and within-stage width while preserving residual shape invariants. On CIFAR-100, M2M-DC yields a clean accuracy-compute frontier. For ResNet-18, we obtain 85.46% Top-1 with 3.09M parameters and 0.0139 GMacs (72% params, 63% GMacs vs. teacher; mean final 85.29% over three seeds). For ResNet-34, we reach 85.02% Top-1 with 5.46M params and 0.0195 GMacs (74% / 74% vs. teacher; mean final 84.62%). Extending to inverted-residuals, MobileNetV2 achieves a mean final 68.54% Top-1 at 1.71M params (27%) and 0.0186 conv GMacs (24%), improving over the teacher's 66.03% by +2.5 points across three seeds. Because M2M-DC exposes only a thin, architecture-aware interface (blocks, stages, and down sample/skip wiring), it generalizes across residual CNNs and extends to inverted-residual families with minor legalization rules. The result is a compact, practical recipe for deployment-ready models that match or surpass teacher accuracy at a fraction of the compute.

Few-Shot Adversarial Low-Rank Fine-Tuning of Vision-Language Models

May 21, 2025Abstract:Vision-Language Models (VLMs) such as CLIP have shown remarkable performance in cross-modal tasks through large-scale contrastive pre-training. To adapt these large transformer-based models efficiently for downstream tasks, Parameter-Efficient Fine-Tuning (PEFT) techniques like LoRA have emerged as scalable alternatives to full fine-tuning, especially in few-shot scenarios. However, like traditional deep neural networks, VLMs are highly vulnerable to adversarial attacks, where imperceptible perturbations can significantly degrade model performance. Adversarial training remains the most effective strategy for improving model robustness in PEFT. In this work, we propose AdvCLIP-LoRA, the first algorithm designed to enhance the adversarial robustness of CLIP models fine-tuned with LoRA in few-shot settings. Our method formulates adversarial fine-tuning as a minimax optimization problem and provides theoretical guarantees for convergence under smoothness and nonconvex-strong-concavity assumptions. Empirical results across eight datasets using ViT-B/16 and ViT-B/32 models show that AdvCLIP-LoRA significantly improves robustness against common adversarial attacks (e.g., FGSM, PGD), without sacrificing much clean accuracy. These findings highlight AdvCLIP-LoRA as a practical and theoretically grounded approach for robust adaptation of VLMs in resource-constrained settings.

Decentralized Low-Rank Fine-Tuning of Large Language Models

Jan 26, 2025

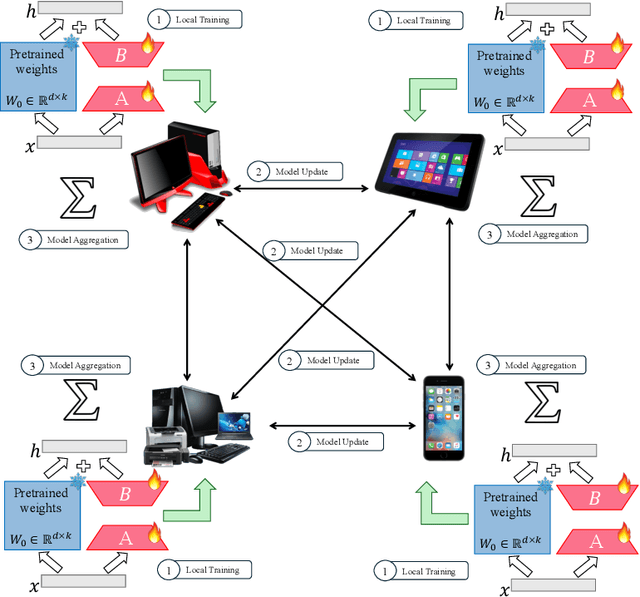

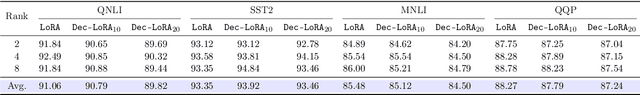

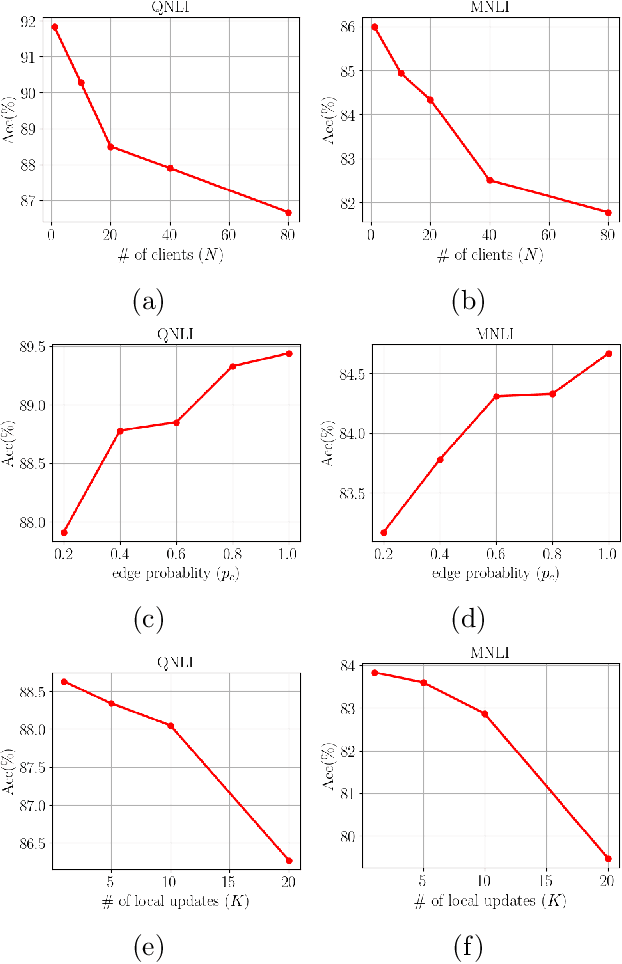

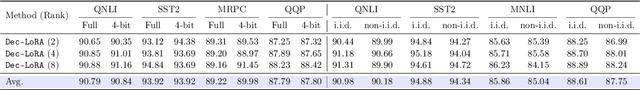

Abstract:The emergence of Large Language Models (LLMs) such as GPT-4, LLaMA, and BERT has transformed artificial intelligence, enabling advanced capabilities across diverse applications. While parameter-efficient fine-tuning (PEFT) techniques like LoRA offer computationally efficient adaptations of these models, their practical deployment often assumes centralized data and training environments. However, real-world scenarios frequently involve distributed, privacy-sensitive datasets that require decentralized solutions. Federated learning (FL) addresses data privacy by coordinating model updates across clients, but it is typically based on centralized aggregation through a parameter server, which can introduce bottlenecks and communication constraints. Decentralized learning, in contrast, eliminates this dependency by enabling direct collaboration between clients, improving scalability and efficiency in distributed environments. Despite its advantages, decentralized LLM fine-tuning remains underexplored. In this work, we propose \texttt{Dec-LoRA}, an algorithm for decentralized fine-tuning of LLMs based on low-rank adaptation (LoRA). Through extensive experiments on BERT and LLaMA-2 models, we evaluate \texttt{Dec-LoRA}'s performance in handling data heterogeneity and quantization constraints, enabling scalable, privacy-preserving LLM fine-tuning in decentralized settings.

Communication-Efficient and Tensorized Federated Fine-Tuning of Large Language Models

Oct 16, 2024Abstract:Parameter-efficient fine-tuning (PEFT) methods typically assume that Large Language Models (LLMs) are trained on data from a single device or client. However, real-world scenarios often require fine-tuning these models on private data distributed across multiple devices. Federated Learning (FL) offers an appealing solution by preserving user privacy, as sensitive data remains on local devices during training. Nonetheless, integrating PEFT methods into FL introduces two main challenges: communication overhead and data heterogeneity. In this paper, we introduce FedTT and FedTT+, methods for adapting LLMs by integrating tensorized adapters into client-side models' encoder/decoder blocks. FedTT is versatile and can be applied to both cross-silo FL and large-scale cross-device FL. FedTT+, an extension of FedTT tailored for cross-silo FL, enhances robustness against data heterogeneity by adaptively freezing portions of tensor factors, further reducing the number of trainable parameters. Experiments on BERT and LLaMA models demonstrate that our proposed methods successfully address data heterogeneity challenges and perform on par or even better than existing federated PEFT approaches while achieving up to 10$\times$ reduction in communication cost.

Robust Decentralized Learning with Local Updates and Gradient Tracking

May 02, 2024

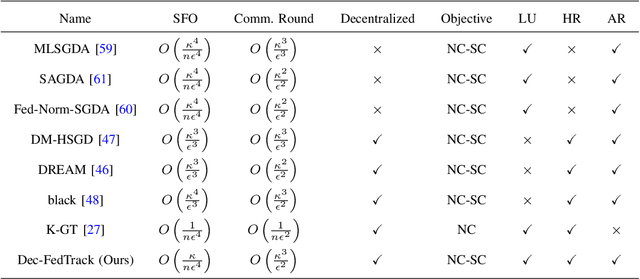

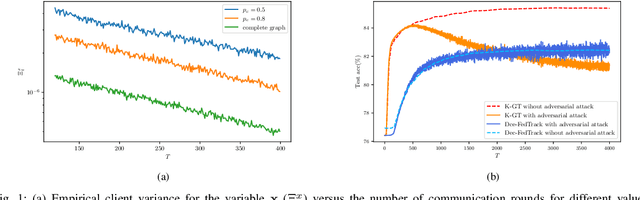

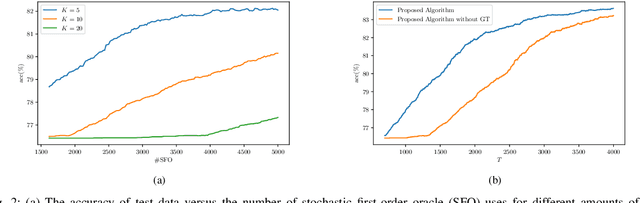

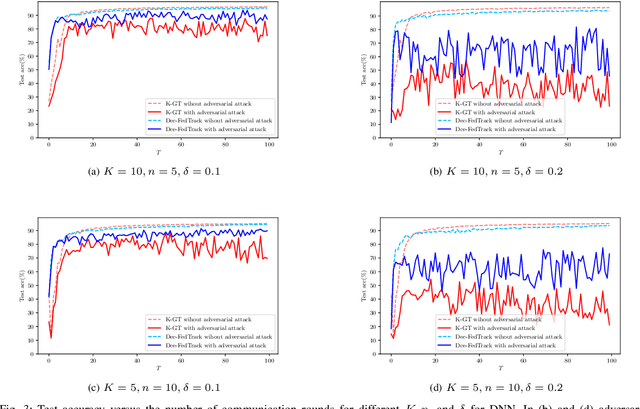

Abstract:As distributed learning applications such as Federated Learning, the Internet of Things (IoT), and Edge Computing grow, it is critical to address the shortcomings of such technologies from a theoretical perspective. As an abstraction, we consider decentralized learning over a network of communicating clients or nodes and tackle two major challenges: data heterogeneity and adversarial robustness. We propose a decentralized minimax optimization method that employs two important modules: local updates and gradient tracking. Minimax optimization is the key tool to enable adversarial training for ensuring robustness. Having local updates is essential in Federated Learning (FL) applications to mitigate the communication bottleneck, and utilizing gradient tracking is essential to proving convergence in the case of data heterogeneity. We analyze the performance of the proposed algorithm, Dec-FedTrack, in the case of nonconvex-strongly concave minimax optimization, and prove that it converges a stationary point. We also conduct numerical experiments to support our theoretical findings.

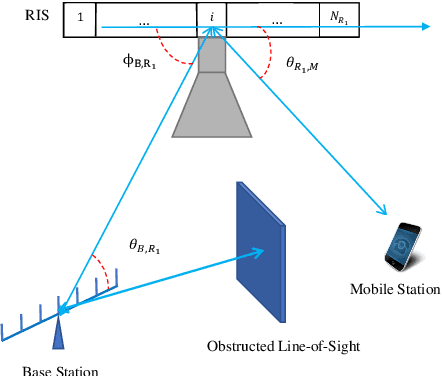

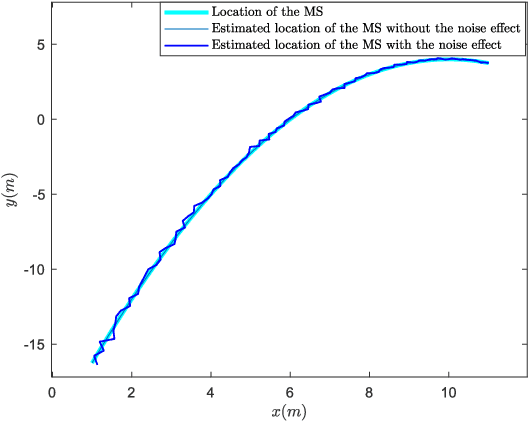

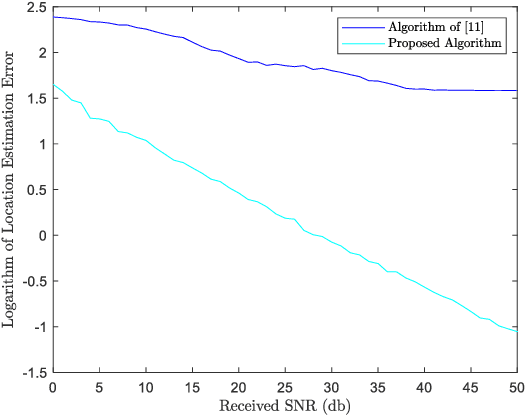

MISO Wireless Localization in The Presence of Reconfigurable Intelligent Surface

Aug 19, 2022

Abstract:Reconfigurable Intelligent Surface (RIS) plays a pivotal role in the sixth generation networks to enhance communication rate and localization accuracy. In this letter, we propose a positioning algorithm in a RIS-assisted environment, where the Base Station (BS) is multi-antenna, and the Mobile Station (MS) is single-antenna. We show that our method can achieve a high-precision positioning if the line-of-sight (LOS) is obstructed and three RISs are available. We send several known signals to the receiver in different time slots and change the phase shifters of the RISs simultaneously in a proper way, and we propose a technique to eliminate the destructive effect of the angle-of-departure (AoD) in order to determine the distances between each RISs and the MS. The accuracy of the proposed algorithm is better than the algorithms which do not estimate the AoD, shown in the numerical result section.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge