Haniyeh Ehsani Oskouie

Exploring a Datasets Statistical Effect Size Impact on Model Performance, and Data Sample-Size Sufficiency

Jan 05, 2025Abstract:Having a sufficient quantity of quality data is a critical enabler of training effective machine learning models. Being able to effectively determine the adequacy of a dataset prior to training and evaluating a model's performance would be an essential tool for anyone engaged in experimental design or data collection. However, despite the need for it, the ability to prospectively assess data sufficiency remains an elusive capability. We report here on two experiments undertaken in an attempt to better ascertain whether or not basic descriptive statistical measures can be indicative of how effective a dataset will be at training a resulting model. Leveraging the effect size of our features, this work first explores whether or not a correlation exists between effect size, and resulting model performance (theorizing that the magnitude of the distinction between classes could correlate to a classifier's resulting success). We then explore whether or not the magnitude of the effect size will impact the rate of convergence of our learning rate, (theorizing again that a greater effect size may indicate that the model will converge more rapidly, and with a smaller sample size needed). Our results appear to indicate that this is not an effective heuristic for determining adequate sample size or projecting model performance, and therefore that additional work is still needed to better prospectively assess adequacy of data.

Leveraging Large Language Models and Topic Modeling for Toxicity Classification

Nov 26, 2024

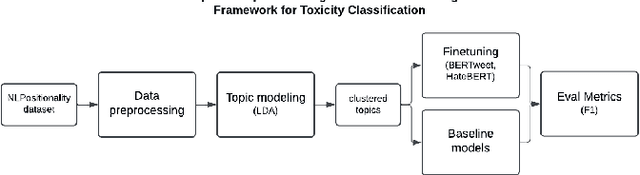

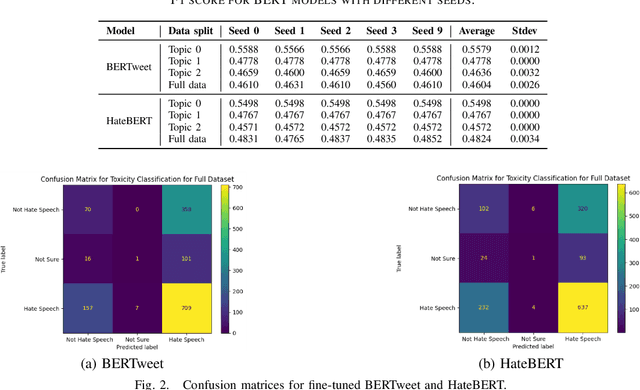

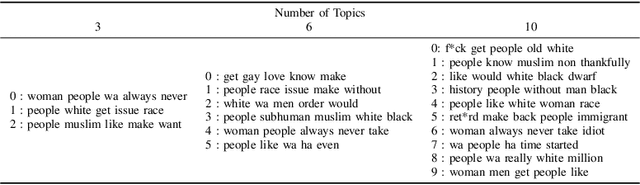

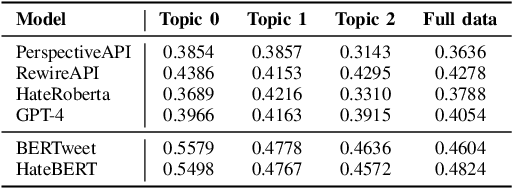

Abstract:Content moderation and toxicity classification represent critical tasks with significant social implications. However, studies have shown that major classification models exhibit tendencies to magnify or reduce biases and potentially overlook or disadvantage certain marginalized groups within their classification processes. Researchers suggest that the positionality of annotators influences the gold standard labels in which the models learned from propagate annotators' bias. To further investigate the impact of annotator positionality, we delve into fine-tuning BERTweet and HateBERT on the dataset while using topic-modeling strategies for content moderation. The results indicate that fine-tuning the models on specific topics results in a notable improvement in the F1 score of the models when compared to the predictions generated by other prominent classification models such as GPT-4, PerspectiveAPI, and RewireAPI. These findings further reveal that the state-of-the-art large language models exhibit significant limitations in accurately detecting and interpreting text toxicity contrasted with earlier methodologies. Code is available at https://github.com/aheldis/Toxicity-Classification.git.

Exploring Cross-model Neuronal Correlations in the Context of Predicting Model Performance and Generalizability

Aug 15, 2024

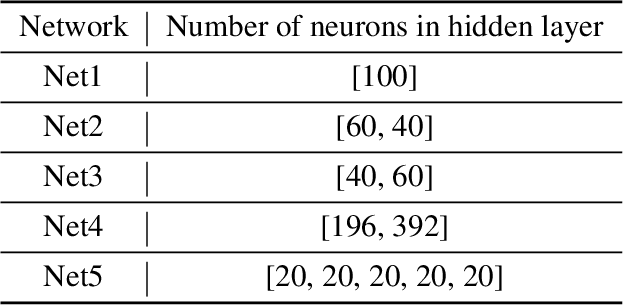

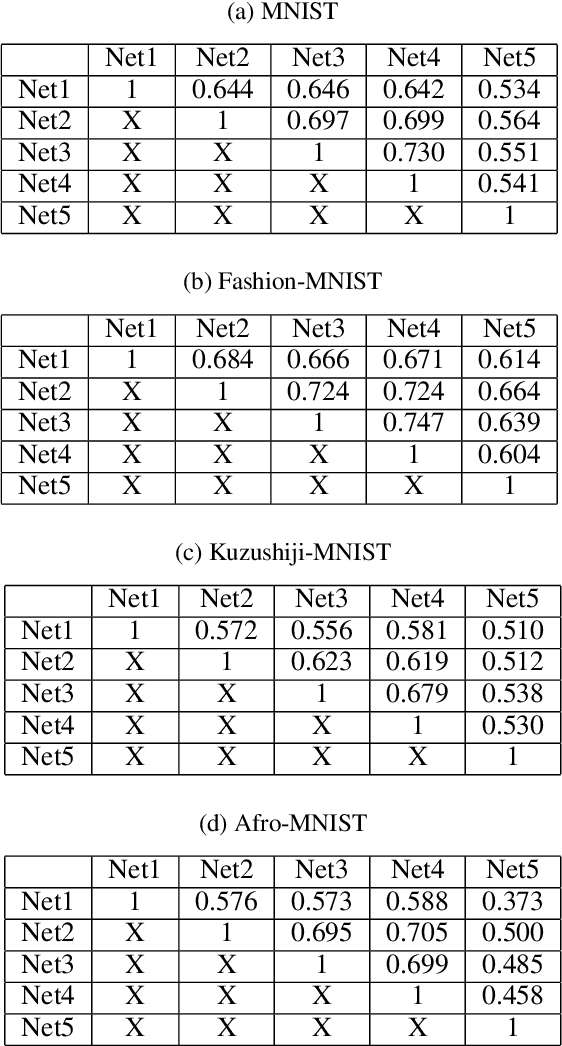

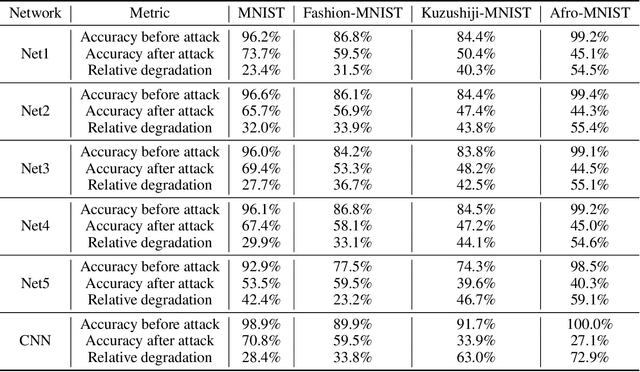

Abstract:As Artificial Intelligence (AI) models are increasingly integrated into critical systems, the need for a robust framework to establish the trustworthiness of AI is increasingly paramount. While collaborative efforts have established conceptual foundations for such a framework, there remains a significant gap in developing concrete, technically robust methods for assessing AI model quality and performance. A critical drawback in the traditional methods for assessing the validity and generalizability of models is their dependence on internal developer datasets, rendering it challenging to independently assess and verify their performance claims. This paper introduces a novel approach for assessing a newly trained model's performance based on another known model by calculating correlation between neural networks. The proposed method evaluates correlations by determining if, for each neuron in one network, there exists a neuron in the other network that produces similar output. This approach has implications for memory efficiency, allowing for the use of smaller networks when high correlation exists between networks of different sizes. Additionally, the method provides insights into robustness, suggesting that if two highly correlated networks are compared and one demonstrates robustness when operating in production environments, the other is likely to exhibit similar robustness. This contribution advances the technical toolkit for responsible AI, supporting more comprehensive and nuanced evaluations of AI models to ensure their safe and effective deployment.

Attack on Scene Flow using Point Clouds

Apr 28, 2024

Abstract:Deep neural networks have made significant advancements in accurately estimating scene flow using point clouds, which is vital for many applications like video analysis, action recognition, and navigation. Robustness of these techniques, however, remains a concern, particularly in the face of adversarial attacks that have been proven to deceive state-of-the-art deep neural networks in many domains. Surprisingly, the robustness of scene flow networks against such attacks has not been thoroughly investigated. To address this problem, the proposed approach aims to bridge this gap by introducing adversarial white-box attacks specifically tailored for scene flow networks. Experimental results show that the generated adversarial examples obtain up to 33.7 relative degradation in average end-point error on the KITTI and FlyingThings3D datasets. The study also reveals the significant impact that attacks targeting point clouds in only one dimension or color channel have on average end-point error. Analyzing the success and failure of these attacks on the scene flow networks and their 2D optical flow network variants show a higher vulnerability for the optical flow networks.

Interpretation of Neural Networks is Susceptible to Universal Adversarial Perturbations

Nov 30, 2022

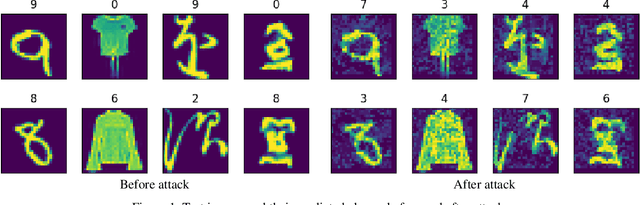

Abstract:Interpreting neural network classifiers using gradient-based saliency maps has been extensively studied in the deep learning literature. While the existing algorithms manage to achieve satisfactory performance in application to standard image recognition datasets, recent works demonstrate the vulnerability of widely-used gradient-based interpretation schemes to norm-bounded perturbations adversarially designed for every individual input sample. However, such adversarial perturbations are commonly designed using the knowledge of an input sample, and hence perform sub-optimally in application to an unknown or constantly changing data point. In this paper, we show the existence of a Universal Perturbation for Interpretation (UPI) for standard image datasets, which can alter a gradient-based feature map of neural networks over a significant fraction of test samples. To design such a UPI, we propose a gradient-based optimization method as well as a principal component analysis (PCA)-based approach to compute a UPI which can effectively alter a neural network's gradient-based interpretation on different samples. We support the proposed UPI approaches by presenting several numerical results of their successful applications to standard image datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge