Saiful Islam

Green-NAS: A Global-Scale Multi-Objective Neural Architecture Search for Robust and Efficient Edge-Native Weather Forecasting

Jan 30, 2026Abstract:We introduce Green-NAS, a multi-objective NAS (neural architecture search) framework designed for low-resource environments using weather forecasting as a case study. By adhering to 'Green AI' principles, the framework explicitly minimizes computational energy costs and carbon footprints, prioritizing sustainable deployment over raw computational scale. The Green-NAS architecture search method is optimized for both model accuracy and efficiency to find lightweight models with high accuracy and very few model parameters; this is accomplished through an optimization process that simultaneously optimizes multiple objectives. Our best-performing model, Green-NAS-A, achieved an RMSE of 0.0988 (i.e., within 1.4% of our manually tuned baseline) using only 153k model parameters, which is 239 times fewer than other globally applied weather forecasting models, such as GraphCast. In addition, we also describe how the use of transfer learning will improve the weather forecasting accuracy by approximately 5.2%, in comparison to a naive approach of training a new model for each city, when there is limited historical weather data available for that city.

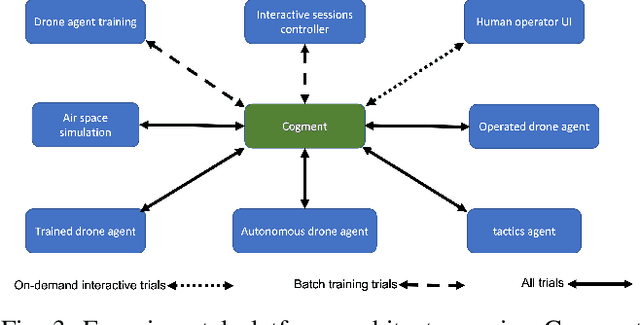

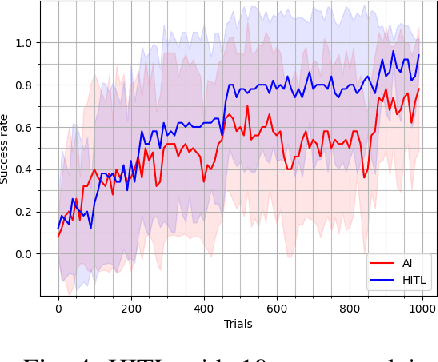

A Systematic Approach to Design Real-World Human-in-the-Loop Deep Reinforcement Learning: Salient Features, Challenges and Trade-offs

Apr 23, 2025

Abstract:With the growing popularity of deep reinforcement learning (DRL), human-in-the-loop (HITL) approach has the potential to revolutionize the way we approach decision-making problems and create new opportunities for human-AI collaboration. In this article, we introduce a novel multi-layered hierarchical HITL DRL algorithm that comprises three types of learning: self learning, imitation learning and transfer learning. In addition, we consider three forms of human inputs: reward, action and demonstration. Furthermore, we discuss main challenges, trade-offs and advantages of HITL in solving complex problems and how human information can be integrated in the AI solution systematically. To verify our technical results, we present a real-world unmanned aerial vehicles (UAV) problem wherein a number of enemy drones attack a restricted area. The objective is to design a scalable HITL DRL algorithm for ally drones to neutralize the enemy drones before they reach the area. To this end, we first implement our solution using an award-winning open-source HITL software called Cogment. We then demonstrate several interesting results such as (a) HITL leads to faster training and higher performance, (b) advice acts as a guiding direction for gradient methods and lowers variance, and (c) the amount of advice should neither be too large nor too small to avoid over-training and under-training. Finally, we illustrate the role of human-AI cooperation in solving two real-world complex scenarios, i.e., overloaded and decoy attacks.

A Structural Feature-Based Approach for Comprehensive Graph Classification

Aug 10, 2024

Abstract:The increasing prevalence of graph-structured data across various domains has intensified greater interest in graph classification tasks. While numerous sophisticated graph learning methods have emerged, their complexity often hinders practical implementation. In this article, we address this challenge by proposing a method that constructs feature vectors based on fundamental graph structural properties. We demonstrate that these features, despite their simplicity, are powerful enough to capture the intrinsic characteristics of graphs within the same class. We explore the efficacy of our approach using three distinct machine learning methods, highlighting how our feature-based classification leverages the inherent structural similarities of graphs within the same class to achieve accurate classification. A key advantage of our approach is its simplicity, which makes it accessible and adaptable to a broad range of applications, including social network analysis, bioinformatics, and cybersecurity. Furthermore, we conduct extensive experiments to validate the performance of our method, showing that it not only reveals a competitive performance but in some cases surpasses the accuracy of more complex, state-of-the-art techniques. Our findings suggest that a focus on fundamental graph features can provide a robust and efficient alternative for graph classification, offering significant potential for both research and practical applications.

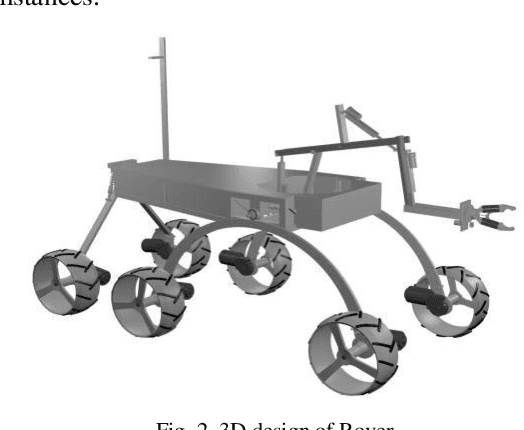

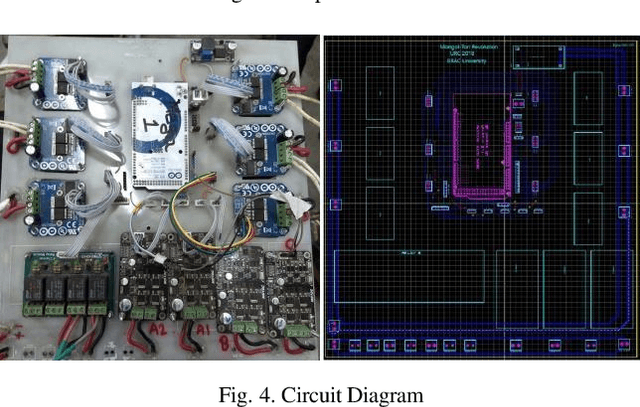

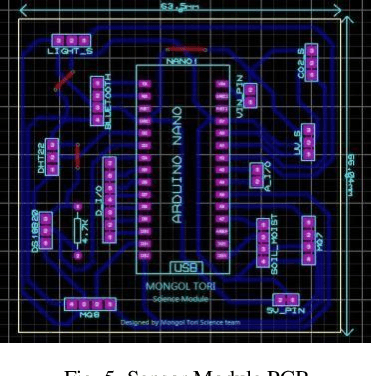

BRACU Mongol Tori: Next Generation Mars Exploration Rover

Nov 03, 2021

Abstract:BRAC University (BRACU) has participated in the University Rover Challenge (URC), a robotics competition for university level students organized by the Mars Society to design and build a rover that would be of use to early explorers on Mars. BRACU has designed and developed a full functional next-generation mars rover, Mongol Tori, which can be operated in the extreme, hostile condition expected in planet Mars. Not only has Mongol Tori embedded with both autonomous and manual controlled features to functionalize, it can also capable of conducting scientific tasks to identify the characteristics of soils and weathering in the mars environment.

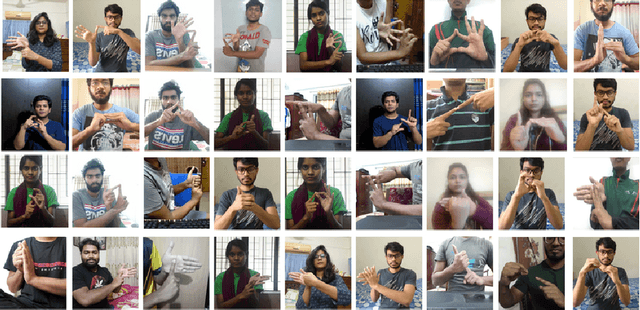

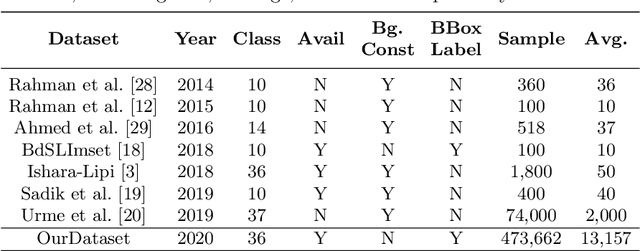

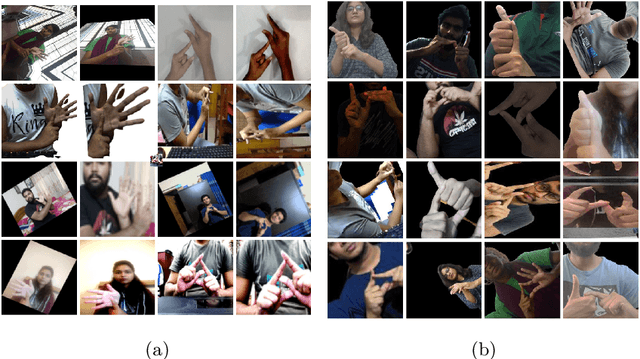

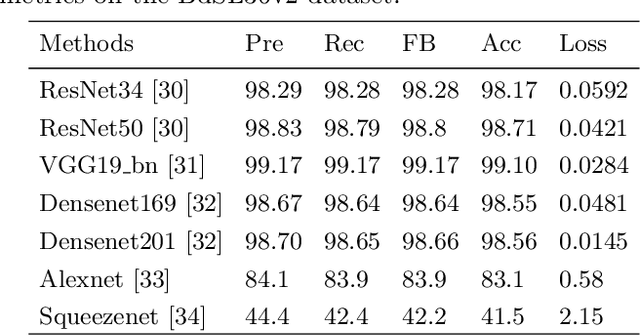

BdSL36: A Dataset for Bangladeshi Sign Letters Recognition

Oct 02, 2021

Abstract:Bangladeshi Sign Language (BdSL) is a commonly used medium of communication for the hearing-impaired people in Bangladesh. A real-time BdSL interpreter with no controlled lab environment has a broad social impact and an interesting avenue of research as well. Also, it is a challenging task due to the variation in different subjects (age, gender, color, etc.), complex features, and similarities of signs and clustered backgrounds. However, the existing dataset for BdSL classification task is mainly built in a lab friendly setup which limits the application of powerful deep learning technology. In this paper, we introduce a dataset named BdSL36 which incorporates background augmentation to make the dataset versatile and contains over four million images belonging to 36 categories. Besides, we annotate about 40,000 images with bounding boxes to utilize the potentiality of object detection algorithms. Furthermore, several intensive experiments are performed to establish the baseline performance of our BdSL36. Moreover, we employ beta testing of our classifiers at the user level to justify the possibilities of real-world application with this dataset. We believe our BdSL36 will expedite future research on practical sign letter classification. We make the datasets and all the pre-trained models available for further researcher.

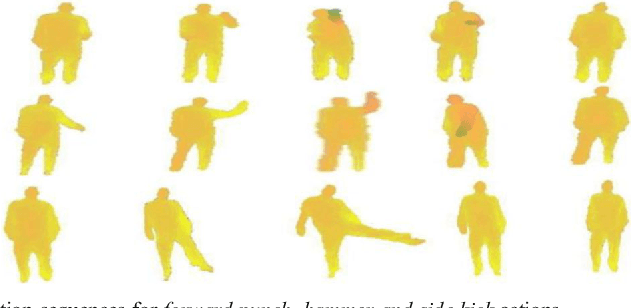

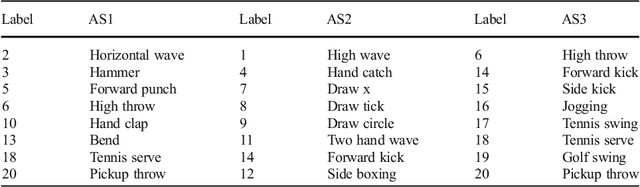

3D human action analysis and recognition through GLAC descriptor on 2D motion and static posture images

Mar 19, 2019

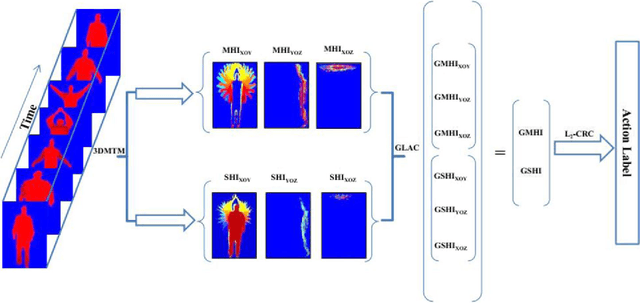

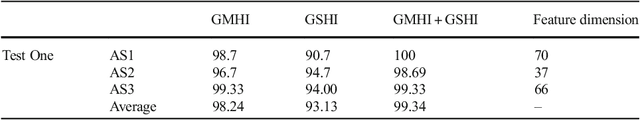

Abstract:In this paper, we present an approach for identification of actions within depth action videos. First, we process the video to get motion history images (MHIs) and static history images (SHIs) corresponding to an action video based on the use of 3D Motion Trail Model (3DMTM). We then characterize the action video by extracting the Gradient Local Auto-Correlations (GLAC) features from the SHIs and the MHIs. The two sets of features i.e., GLAC features from MHIs and GLAC features from SHIs are concatenated to obtain a representation vector for action. Finally, we perform the classification on all the action samples by using the l2-regularized Collaborative Representation Classifier (l2-CRC) to recognize different human actions in an effective way. We perform evaluation of the proposed method on three action datasets, MSR-Action3D, DHA and UTD-MHAD. Through experimental results, we observe that the proposed method performs superior to other approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge