Sahba Aghajani Pedram

Temporal Segmentation of Surgical Sub-tasks through Deep Learning with Multiple Data Sources

Feb 07, 2020

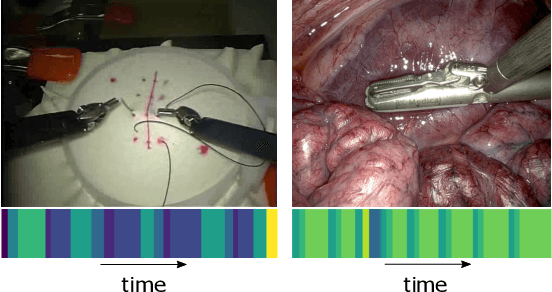

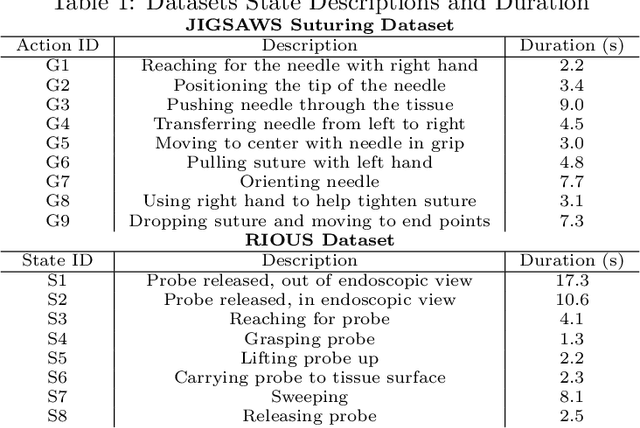

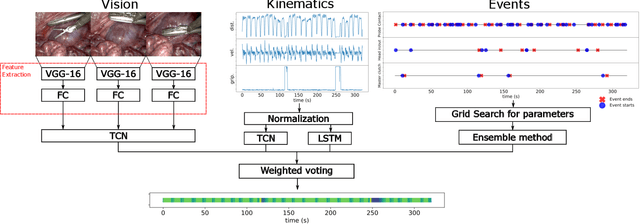

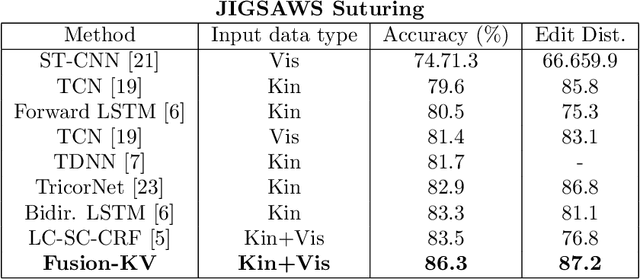

Abstract:Many tasks in robot-assisted surgeries (RAS) can be represented by finite-state machines (FSMs), where each state represents either an action (such as picking up a needle) or an observation (such as bleeding). A crucial step towards the automation of such surgical tasks is the temporal perception of the current surgical scene, which requires a real-time estimation of the states in the FSMs. The objective of this work is to estimate the current state of the surgical task based on the actions performed or events occurred as the task progresses. We propose Fusion-KVE, a unified surgical state estimation model that incorporates multiple data sources including the Kinematics, Vision, and system Events. Additionally, we examine the strengths and weaknesses of different state estimation models in segmenting states with different representative features or levels of granularity. We evaluate our model on the JHU-ISI Gesture and Skill Assessment Working Set (JIGSAWS), as well as a more complex dataset involving robotic intra-operative ultrasound (RIOUS) imaging, created using the da Vinci Xi surgical system. Our model achieves a superior frame-wise state estimation accuracy up to 89.4%, which improves the state-of-the-art surgical state estimation models in both JIGSAWS suturing dataset and our RIOUS dataset.

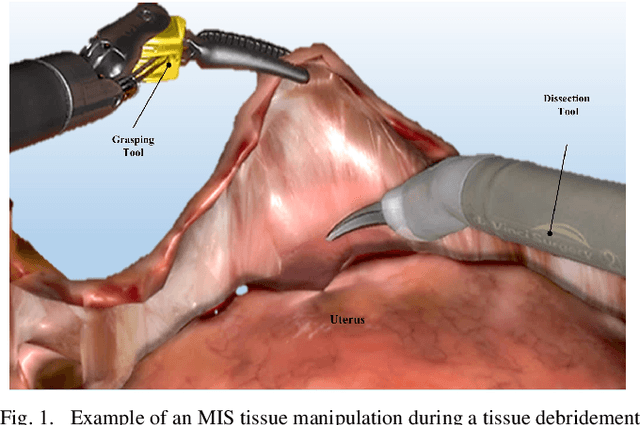

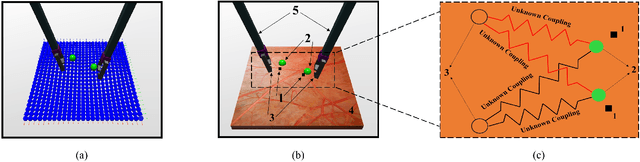

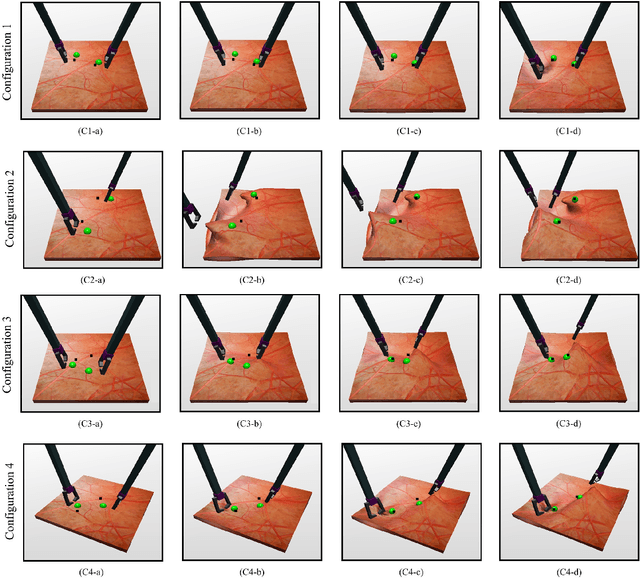

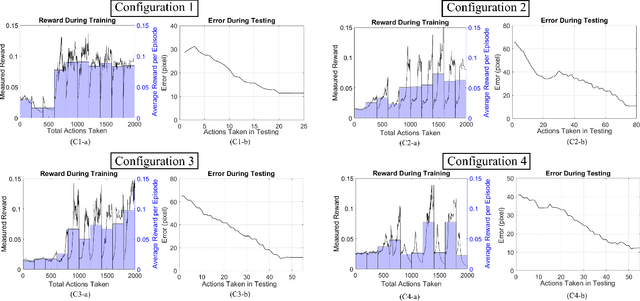

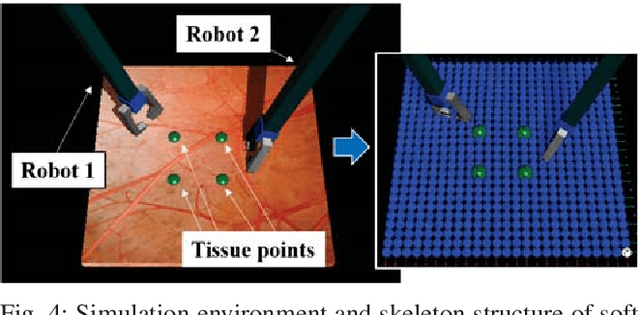

Toward Synergic Learning for Autonomous Manipulation of Deformable Tissues via Surgical Robots: An Approximate Q-Learning Approach

Oct 11, 2019

Abstract:In this paper, we present a synergic learning algorithm to address the task of indirect manipulation of an unknown deformable tissue. Tissue manipulation is a common yet challenging task in various surgical interventions, which makes it a good candidate for robotic automation. We propose using a linear approximate Q-learning method in which human knowledge contributes to selecting useful yet simple features of tissue manipulation while the algorithm learns to take optimal actions and accomplish the task. The algorithm is implemented and evaluated on a simulation using the OpenCV and CHAI3D libraries. Successful simulation results for four different configurations which are based on realistic tissue manipulation scenarios are presented. Results indicate that with a careful selection of relatively simple and intuitive features, the developed Q-learning algorithm can successfully learn an optimal policy without any prior knowledge of tissue dynamics or camera intrinsic/extrinsic calibration parameters.

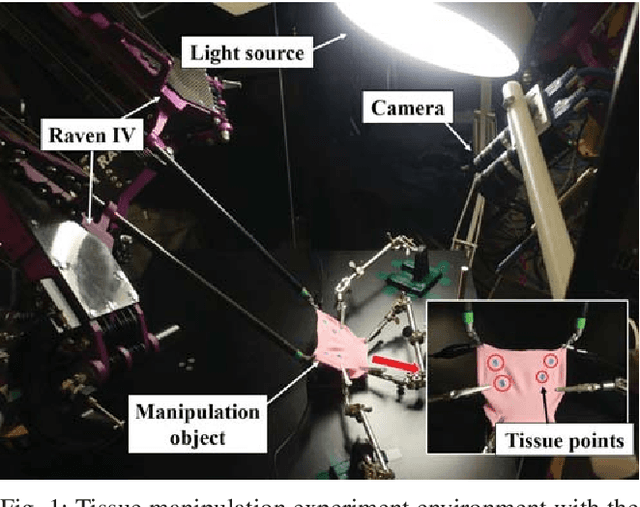

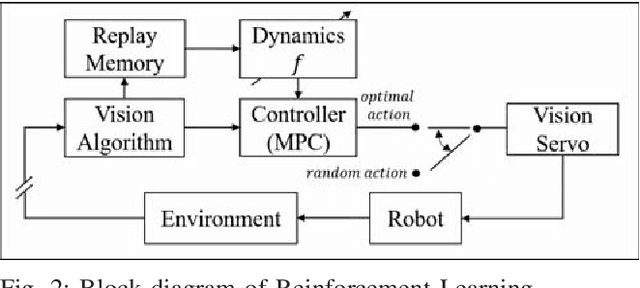

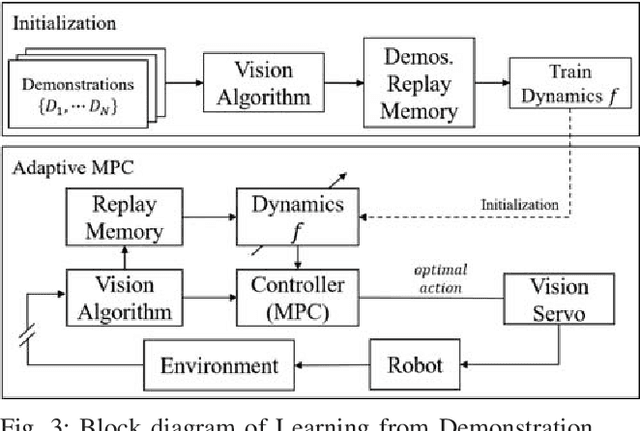

Autonomous Tissue Manipulation via Surgical Robot Using Learning Based Model Predictive Control

Mar 03, 2019

Abstract:Tissue manipulation is a frequently used fundamental subtask of any surgical procedures, and in some cases it may require the involvement of a surgeon's assistant. The complex dynamics of soft tissue as an unstructured environment is one of the main challenges in any attempt to automate the manipulation of it via a surgical robotic system. Two AI learning based model predictive control algorithms using vision strategies are proposed and studied: (1) reinforcement learning and (2) learning from demonstration. Comparison of the performance of these AI algorithms in a simulation setting indicated that the learning from demonstration algorithm can boost the learning policy by initializing the predicted dynamics with given demonstrations. Furthermore, the learning from demonstration algorithm is implemented on a Raven IV surgical robotic system and successfully demonstrated feasibility of the proposed algorithm using an experimental approach. This study is part of a profound vision in which the role of a surgeon will be redefined as a pure decision maker whereas the vast majority of the manipulation will be conducted autonomously by a surgical robotic system. A supplementary video can be found at: http://bionics.seas.ucla.edu/research/surgeryproject17.html

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge