Sagar Gubbi

GeniL: A Multilingual Dataset on Generalizing Language

Apr 08, 2024

Abstract:LLMs are increasingly transforming our digital ecosystem, but they often inherit societal biases learned from their training data, for instance stereotypes associating certain attributes with specific identity groups. While whether and how these biases are mitigated may depend on the specific use cases, being able to effectively detect instances of stereotype perpetuation is a crucial first step. Current methods to assess presence of stereotypes in generated language rely on simple template or co-occurrence based measures, without accounting for the variety of sentential contexts they manifest in. We argue that understanding the sentential context is crucial for detecting instances of generalization. We distinguish two types of generalizations: (1) language that merely mentions the presence of a generalization ("people think the French are very rude"), and (2) language that reinforces such a generalization ("as French they must be rude"), from non-generalizing context ("My French friends think I am rude"). For meaningful stereotype evaluations, we need to reliably distinguish such instances of generalizations. We introduce the new task of detecting generalization in language, and build GeniL, a multilingual dataset of over 50K sentences from 9 languages (English, Arabic, Bengali, Spanish, French, Hindi, Indonesian, Malay, and Portuguese) annotated for instances of generalizations. We demonstrate that the likelihood of a co-occurrence being an instance of generalization is usually low, and varies across different languages, identity groups, and attributes. We build classifiers to detect generalization in language with an overall PR-AUC of 58.7, with varying degrees of performance across languages. Our research provides data and tools to enable a nuanced understanding of stereotype perpetuation, a crucial step towards more inclusive and responsible language technologies.

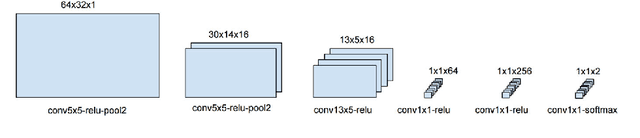

Scene Text Detection for Augmented Reality -- Character Bigram Approach to reduce False Positive Rate

Dec 26, 2020

Abstract:Natural scene text detection is an important aspect of scene understanding and could be a useful tool in building engaging augmented reality applications. In this work, we address the problem of false positives in text spotting. We propose improving the performace of sliding window text spotters by looking for character pairs (bigrams) rather than single characters. An efficient convolutional neural network is designed and trained to detect bigrams. The proposed detector reduces false positive rate by 28.16% on the ICDAR 2015 dataset. We demonstrate that detecting bigrams is a computationally inexpensive way to improve sliding window text spotters.

Imitation Learning for High Precision Peg-in-Hole Tasks

Dec 26, 2020

Abstract:Industrial robot manipulators are not able to match the precision and speed with which humans are able to execute contact rich tasks even to this day. Therefore, as a means overcome this gap, we demonstrate generative methods for imitating a peg-in-hole insertion task in a 6-DOF robot manipulator. In particular, generative adversarial imitation learning (GAIL) is used to successfully achieve this task with a 10 um, and a 6 um peg-hole clearance on the Yaskawa GP8 industrial robot. Experimental results show that the policy successfully learns within 20 episodes from a handful of human expert demonstrations on the robot (i.e., < 10 tele-operated robot demonstrations). The insertion time improves from > 20 seconds (which also includes failed insertions) to < 15 seconds, thereby validating the effectiveness of this approach.

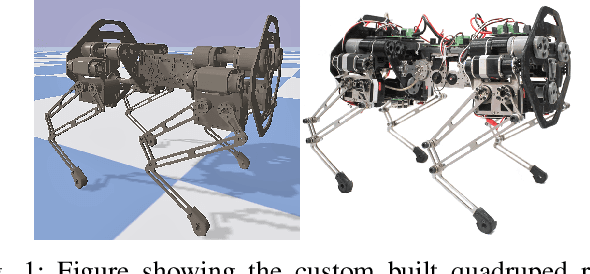

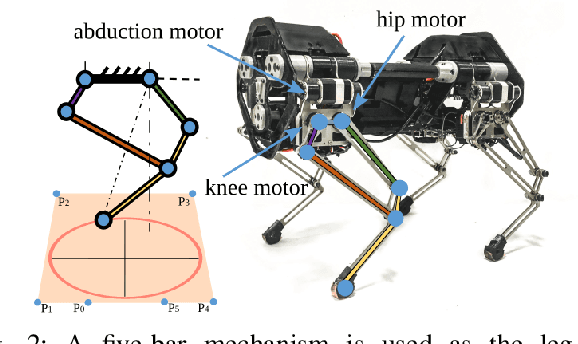

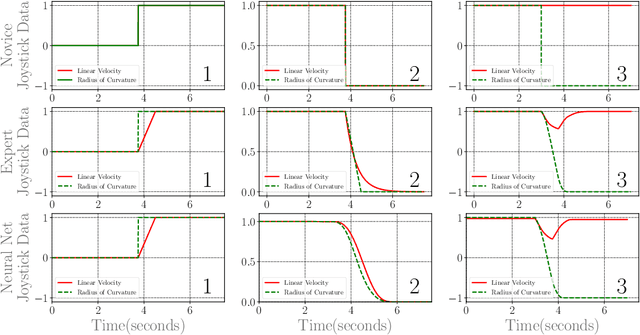

Learning Stable Manoeuvres in Quadruped Robots from Expert Demonstrations

Jul 28, 2020

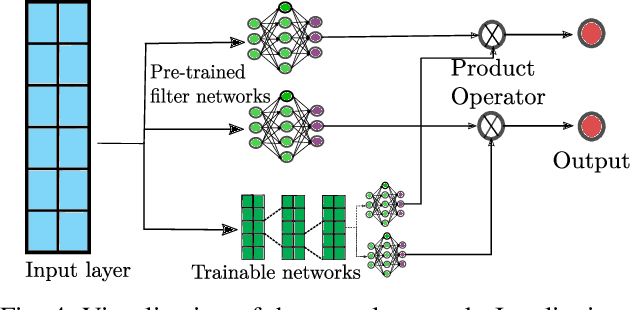

Abstract:With the research into development of quadruped robots picking up pace, learning based techniques are being explored for developing locomotion controllers for such robots. A key problem is to generate leg trajectories for continuously varying target linear and angular velocities, in a stable manner. In this paper, we propose a two pronged approach to address this problem. First, multiple simpler policies are trained to generate trajectories for a discrete set of target velocities and turning radius. These policies are then augmented using a higher level neural network for handling the transition between the learned trajectories. Specifically, we develop a neural network-based filter that takes in target velocity, radius and transforms them into new commands that enable smooth transitions to the new trajectory. This transformation is achieved by learning from expert demonstrations. An application of this is the transformation of a novice user's input into an expert user's input, thereby ensuring stable manoeuvres regardless of the user's experience. Training our proposed architecture requires much less expert demonstrations compared to standard neural network architectures. Finally, we demonstrate experimentally these results in the in-house quadruped Stoch 2.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge