S. Ali A. Moosavian

Learning-based Control for Tendon-Driven Continuum Robotic Arms

Dec 06, 2024

Abstract:This paper presents a learning-based approach for centralized position control of Tendon Driven Continuum Robots (TDCRs) using Deep Reinforcement Learning (DRL), with a particular focus on the Sim-to-Real transfer of control policies. The proposed control method employs the Modified Transpose Jacobian (MTJ) control strategy, with its parameters optimally tuned using the Deep Deterministic Policy Gradient (DDPG) algorithm. Classical model-based controllers encounter significant challenges due to the inherent uncertainties and nonlinear dynamics of continuum robots. In contrast, model-free control strategies require efficient gain-tuning to handle diverse operational scenarios. This research aims to develop a model-free controller with performance comparable to model-based strategies by integrating an optimal adaptive gain-tuning system. Both simulations and real-world implementations demonstrate that the proposed method significantly enhances the trajectory-tracking performance of continuum robots independent of initial conditions and paths within the operational task-space, effectively establishing a task-free controller.

Robust walking based on MPC with viability-based feasibility guarantees

Oct 09, 2020

Abstract:Model predictive control (MPC) has shown great success for controlling complex systems such as legged robots. However, when closing the loop, the performance and feasibility of the finite horizon optimal control problem solved at each control cycle is not guaranteed anymore. This is due to model discrepancies, the effect of low-level controllers, uncertainties and sensor noise. To address these issues, we propose a modified version of a standard MPC approach used in legged locomotion with viability (weak forward invariance) guarantees that ensures the feasibility of the optimal control problem. Moreover, we use past experimental data to find the best cost weights, which measure a combination of performance, constraint satisfaction robustness, or stability (invariance). These interpretable costs measure the trade off between robustness and performance. For this purpose, we use Bayesian optimization (BO) to systematically design experiments that help efficiently collect data to learn a cost function leading to robust performance. Our simulation results with different realistic disturbances (i.e. external pushes, unmodeled actuator dynamics and computational delay) show the effectiveness of our approach to create robust controllers for humanoid robots.

Robust Humanoid Locomotion Using Trajectory Optimization and Sample-Efficient Learning

Jul 10, 2019

Abstract:Trajectory optimization (TO) is one of the most powerful tools for generating feasible motions for humanoid robots. However, including uncertainties and stochasticity in the TO problem to generate robust motions can easily lead to intractable problems. Furthermore, since the models used in TO have always some level of abstraction, it can be hard to find a realistic set of uncertainties in the model space. In this paper we leverage a sample-efficient learning technique (Bayesian optimization) to robustify TO for humanoid locomotion. The main idea is to use data from full-body simulations to make the TO stage robust by tuning the cost weights. To this end, we split the TO problem into two phases. The first phase solves a convex optimization problem for generating center of mass (CoM) trajectories based on simplified linear dynamics. The second stage employs iterative Linear-Quadratic Gaussian (iLQG) as a whole-body controller to generate full body control inputs. Then we use Bayesian optimization to find the cost weights to use in the first stage that yields robust performance in the simulation/experiment, in the presence of different disturbance/uncertainties. The results show that the proposed approach is able to generate robust motions for different sets of disturbances and uncertainties.

RoboWalk: Explicit Augmented Human-Robot Dynamics Modeling for Design Optimization

Jul 09, 2019

Abstract:Utilizing orthoses and exoskeleton technology in various applications and medical industries, particularly to help elderly and ordinary people in their daily activities is a new growing field for research institutes. In this paper, after introducing an assistive lower limb exoskeleton (RoboWalk), the dynamics models of both multi-body kinematic tree structure human and robot is derived separately, using Newton's method. The obtained models are then verified by comparing the results with those of the Recursive Newton-Euler Algorithms (RNEA). These models are then augmented to investigate the RoboWalk joint torques, and those of the human body, and also the floor reaction force of the complete system. Since RoboWalk is an under-actuated robot, despite the assistive force, an undesirable disturbing force exerts to the human. So, optimization strategies are proposed to find an optimal design to maximize the assistive behavior of RoboWalk and reduce joint torques of the human body as a result. To this end, a human-in-the-loop optimization algorithm will be used. The solution of this optimization problem is carried out by Particle Swarm Optimization (PSO) method. The designed analysis and the optimization results demonstrate the effectiveness of the proposed approaches, leading to the elimination of disturbing forces, lower torque demand for RoboWalk motors and lower weights.

Trajectory Optimization for Robust Humanoid Locomotion with Sample-Efficient Learning

Jun 09, 2019

Abstract:Trajectory optimization (TO) is one of the most powerful tools for generating feasible motions for humanoid robots. However, including uncertainties and stochasticity in the TO problem to generate robust motions can easily lead to an interactable problem. Furthermore, since the models used in the TO have always some level of abstraction, it is hard to find a realistic set of uncertainty in the space of abstract model. In this paper we aim at leveraging a sample-efficient learning technique (Bayesian optimization) to robustify trajectory optimization for humanoid locomotion. The main idea is to use Bayesian optimization to find the optimal set of cost weights which compromises performance with respect to robustness with a few realistic simulation/experiment. The results show that the proposed approach is able to generate robust motions for different set of disturbances and uncertainties.

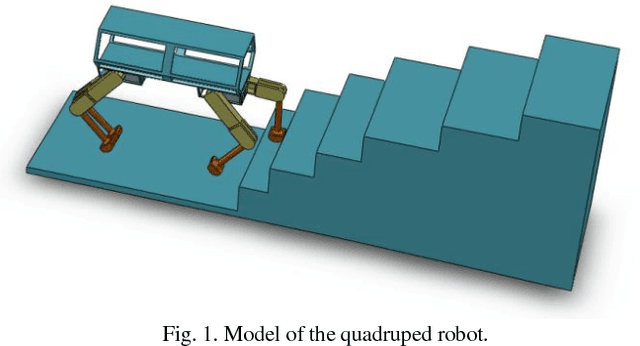

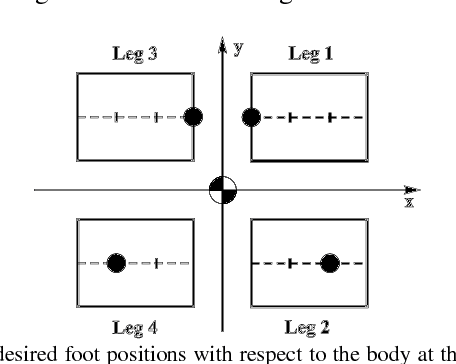

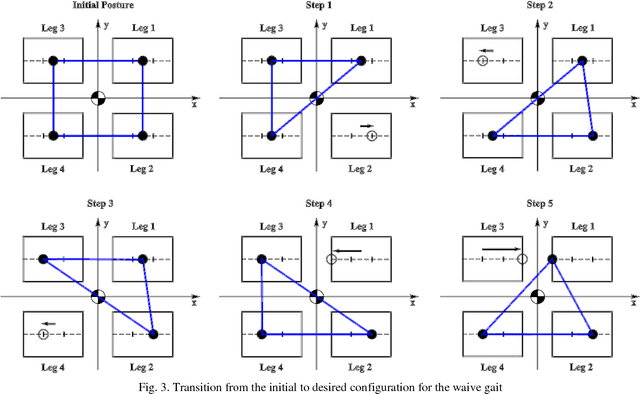

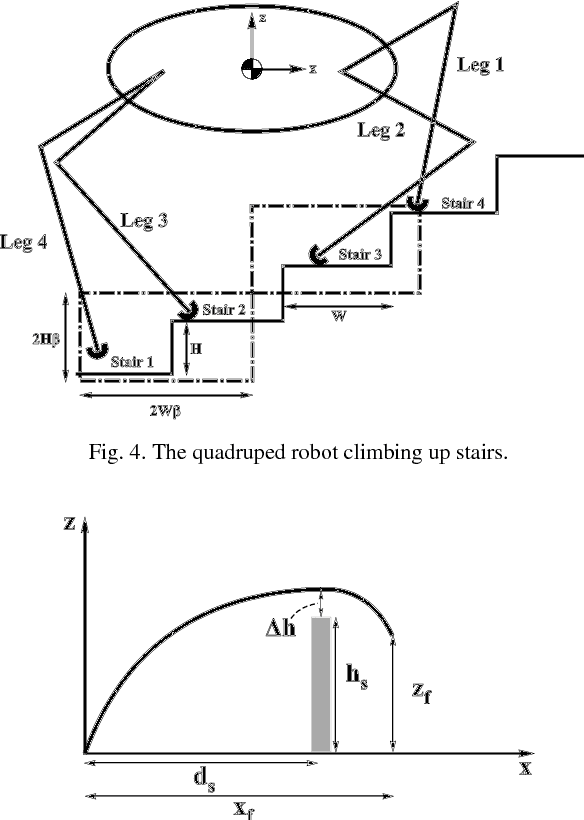

Stable Stair-Climbing of a Quadruped Robot

Sep 08, 2018

Abstract:Synthesizing a stable gait that enables a quadruped robot to climb stairs is the focus of this paper. To this end, first a stable transition from initial to desired configuration is made based on the minimum number of steps and maximum use of the leg workspace to prepare the robot for the movement. Next, swing leg and body trajectories are planned for a successful stair- climbing gait. Afterwards, a stable spinning gait is proposed to change the orientation of the body. We simulate our gait planning algorithms on a model of quadruped robot. The results show that the robot is able to climb up stairs, rotate about its yaw axis, and climb down stairs while its stability is guaranteed.

Walking Control Based on Step Timing Adaptation

Jul 23, 2018

Abstract:Step adjustment for biped robots has been shown to improve gait robustness, however the adaptation of step timing is often neglected in control strategies because it gives rise to non-convex problems when optimized over several steps. In this paper, we argue that it is not necessary to optimize walking over several steps to guarantee stability and that it is sufficient to merely select the next step timing and location. From this insight, we propose a novel walking pattern generator with linear constraints that optimally selects step location and timing at every control cycle. The resulting controller is computationally simple, yet guarantees that any viable state will remain viable in the future. We propose a swing foot adaptation strategy and show how the approach can be used with an inverse dynamics controller without any explicit control of the center of mass or the foot center of pressure. This is particularly useful for biped robots with limited control authority on their foot center of pressure, such as robots with point feet and robots with passive ankles. Extensive simulations on a humanoid robot with passive ankles subject to external pushes and foot slippage demonstrate the capabilities of the approach in cases where the foot center of pressure cannot be controlled and emphasize the importance of step timing adaptation to stabilize walking.

Pattern Generation for Walking on Slippery Terrains

Oct 07, 2017

Abstract:In this paper, we extend state of the art Model Predictive Control (MPC) approaches to generate safe bipedal walking on slippery surfaces. In this setting, we formulate walking as a trade off between realizing a desired walking velocity and preserving robust foot-ground contact. Exploiting this formulation inside MPC, we show that safe walking on various flat terrains can be achieved by compromising three main attributes, i. e. walking velocity tracking, the Zero Moment Point (ZMP) modulation, and the Required Coefficient of Friction (RCoF) regulation. Simulation results show that increasing the walking velocity increases the possibility of slippage, while reducing the slippage possibility conflicts with reducing the tip-over possibility of the contact and vice versa.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge