Ryma Boumazouza

CRIL

Robust Vision-Based Runway Detection through Conformal Prediction and Conformal mAP

May 22, 2025Abstract:We explore the use of conformal prediction to provide statistical uncertainty guarantees for runway detection in vision-based landing systems (VLS). Using fine-tuned YOLOv5 and YOLOv6 models on aerial imagery, we apply conformal prediction to quantify localization reliability under user-defined risk levels. We also introduce Conformal mean Average Precision (C-mAP), a novel metric aligning object detection performance with conformal guarantees. Our results show that conformal prediction can improve the reliability of runway detection by quantifying uncertainty in a statistically sound way, increasing safety on-board and paving the way for certification of ML system in the aerospace domain.

Trustworthy and Explainable Decision-Making for Workforce allocation

Dec 13, 2024Abstract:In industrial contexts, effective workforce allocation is crucial for operational efficiency. This paper presents an ongoing project focused on developing a decision-making tool designed for workforce allocation, emphasising the explainability to enhance its trustworthiness. Our objective is to create a system that not only optimises the allocation of teams to scheduled tasks but also provides clear, understandable explanations for its decisions, particularly in cases where the problem is infeasible. By incorporating human-in-the-loop mechanisms, the tool aims to enhance user trust and facilitate interactive conflict resolution. We implemented our approach on a prototype tool/digital demonstrator intended to be evaluated on a real industrial scenario both in terms of performance and user acceptability.

Surrogate Neural Networks Local Stability for Aircraft Predictive Maintenance

Jan 11, 2024Abstract:Surrogate Neural Networks (NN) now routinely serve as substitutes for computationally demanding simulations (e.g., finite element). They enable faster analyses in industrial applications e.g., manufacturing processes, performance assessment. The verification of surrogate models is a critical step to assess their robustness under different scenarios. We explore the combination of empirical and formal methods in one NN verification pipeline. We showcase its efficiency on an industrial use case of aircraft predictive maintenance. We assess the local stability of surrogate NN designed to predict the stress sustained by an aircraft part from external loads. Our contribution lies in the complete verification of the surrogate models that possess a high-dimensional input and output space, thus accommodating multi-objective constraints. We also demonstrate the pipeline effectiveness in substantially decreasing the runtime needed to assess the targeted property.

Robustness Assessment of a Runway Object Classifier for Safe Aircraft Taxiing

Jan 08, 2024

Abstract:As deep neural networks (DNNs) are becoming the prominent solution for many computational problems, the aviation industry seeks to explore their potential in alleviating pilot workload and in improving operational safety. However, the use of DNNs in this type of safety-critical applications requires a thorough certification process. This need can be addressed through formal verification, which provides rigorous assurances -- e.g.,~by proving the absence of certain mispredictions. In this case-study paper, we demonstrate this process using an image-classifier DNN currently under development at Airbus and intended for use during the aircraft taxiing phase. We use formal methods to assess this DNN's robustness to three common image perturbation types: noise, brightness and contrast, and some of their combinations. This process entails multiple invocations of the underlying verifier, which might be computationally expensive; and we therefore propose a method that leverages the monotonicity of these robustness properties, as well as the results of past verification queries, in order to reduce the overall number of verification queries required by nearly 60%. Our results provide an indication of the level of robustness achieved by the DNN classifier under study, and indicate that it is considerably more vulnerable to noise than to brightness or contrast perturbations.

ASTERYX : A model-Agnostic SaT-basEd appRoach for sYmbolic and score-based eXplanations

Jun 23, 2022

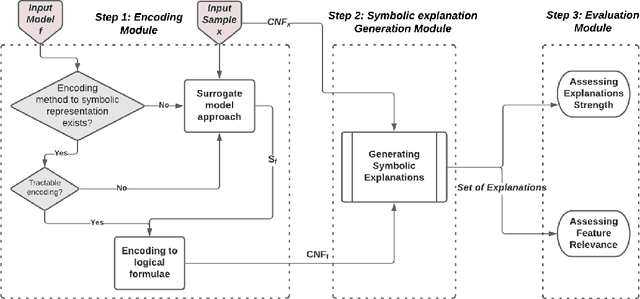

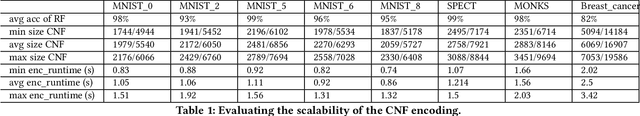

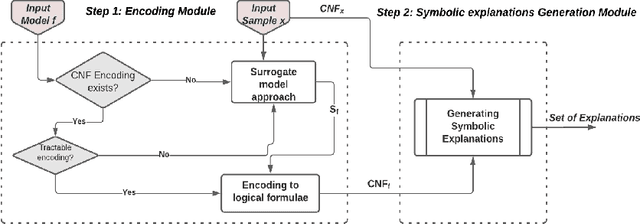

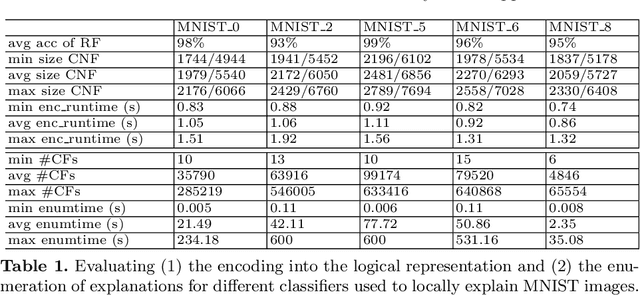

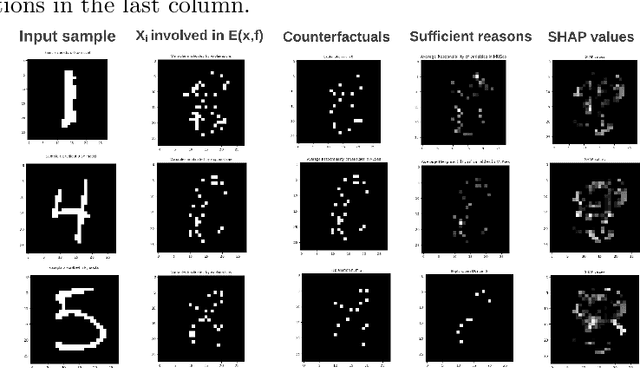

Abstract:The ever increasing complexity of machine learning techniques used more and more in practice, gives rise to the need to explain the predictions and decisions of these models, often used as black-boxes. Explainable AI approaches are either numerical feature-based aiming to quantify the contribution of each feature in a prediction or symbolic providing certain forms of symbolic explanations such as counterfactuals. This paper proposes a generic agnostic approach named ASTERYX allowing to generate both symbolic explanations and score-based ones. Our approach is declarative and it is based on the encoding of the model to be explained in an equivalent symbolic representation, this latter serves to generate in particular two types of symbolic explanations which are sufficient reasons and counterfactuals. We then associate scores reflecting the relevance of the explanations and the features w.r.t to some properties. Our experimental results show the feasibility of the proposed approach and its effectiveness in providing symbolic and score-based explanations.

* arXiv admin note: text overlap with arXiv:2206.11539

A Model-Agnostic SAT-based Approach for Symbolic Explanation Enumeration

Jun 23, 2022

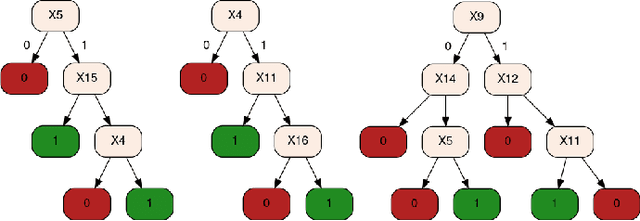

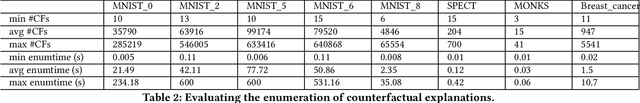

Abstract:In this paper titled A Model-Agnostic SAT-based approach for Symbolic Explanation Enumeration we propose a generic agnostic approach allowing to generate different and complementary types of symbolic explanations. More precisely, we generate explanations to locally explain a single prediction by analyzing the relationship between the features and the output. Our approach uses a propositional encoding of the predictive model and a SAT-based setting to generate two types of symbolic explanations which are Sufficient Reasons and Counterfactuals. The experimental results on image classification task show the feasibility of the proposed approach and its effectiveness in providing Sufficient Reasons and Counterfactuals explanations.

A Symbolic Approach for Counterfactual Explanations

Jun 20, 2022Abstract:In this paper titled A Symbolic Approach for Counterfactual Explanations we propose a novel symbolic approach to provide counterfactual explanations for a classifier predictions. Contrary to most explanation approaches where the goal is to understand which and to what extent parts of the data helped to give a prediction, counterfactual explanations indicate which features must be changed in the data in order to change this classifier prediction. Our approach is symbolic in the sense that it is based on encoding the decision function of a classifier in an equivalent CNF formula. In this approach, counterfactual explanations are seen as the Minimal Correction Subsets (MCS), a well-known concept in knowledge base reparation. Hence, this approach takes advantage of the strengths of already existing and proven solutions for the generation of MCS. Our preliminary experimental studies on Bayesian classifiers show the potential of this approach on several datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge