Yizhak Elboher

Robustness Assessment of a Runway Object Classifier for Safe Aircraft Taxiing

Jan 08, 2024

Abstract:As deep neural networks (DNNs) are becoming the prominent solution for many computational problems, the aviation industry seeks to explore their potential in alleviating pilot workload and in improving operational safety. However, the use of DNNs in this type of safety-critical applications requires a thorough certification process. This need can be addressed through formal verification, which provides rigorous assurances -- e.g.,~by proving the absence of certain mispredictions. In this case-study paper, we demonstrate this process using an image-classifier DNN currently under development at Airbus and intended for use during the aircraft taxiing phase. We use formal methods to assess this DNN's robustness to three common image perturbation types: noise, brightness and contrast, and some of their combinations. This process entails multiple invocations of the underlying verifier, which might be computationally expensive; and we therefore propose a method that leverages the monotonicity of these robustness properties, as well as the results of past verification queries, in order to reduce the overall number of verification queries required by nearly 60%. Our results provide an indication of the level of robustness achieved by the DNN classifier under study, and indicate that it is considerably more vulnerable to noise than to brightness or contrast perturbations.

Efficiently Finding Adversarial Examples with DNN Preprocessing

Nov 16, 2022

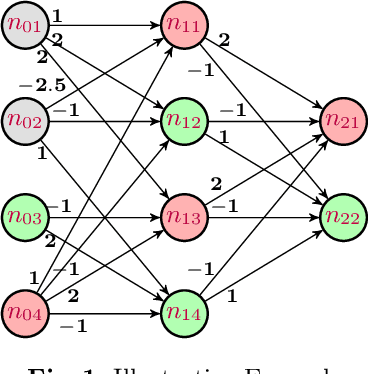

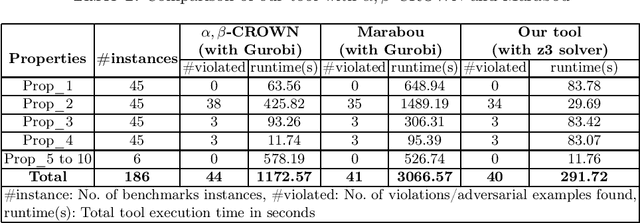

Abstract:Deep Neural Networks (DNNs) are everywhere, frequently performing a fairly complex task that used to be unimaginable for machines to carry out. In doing so, they do a lot of decision making which, depending on the application, may be disastrous if gone wrong. This necessitates a formal argument that the underlying neural networks satisfy certain desirable properties. Robustness is one such key property for DNNs, particularly if they are being deployed in safety or business critical applications. Informally speaking, a DNN is not robust if very small changes to its input may affect the output in a considerable way (e.g. changes the classification for that input). The task of finding an adversarial example is to demonstrate this lack of robustness, whenever applicable. While this is doable with the help of constrained optimization techniques, scalability becomes a challenge due to large-sized networks. This paper proposes the use of information gathered by preprocessing the DNN to heavily simplify the optimization problem. Our experiments substantiate that this is effective, and does significantly better than the state-of-the-art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge