Ruth Cobos

A Multimodal Dataset of Student Oral Presentations with Sensors and Evaluation Data

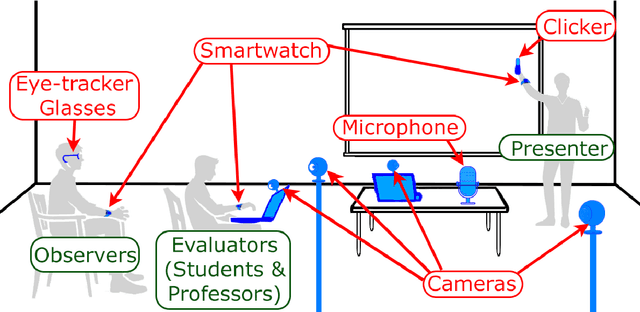

Jan 12, 2026Abstract:Oral presentation skills are a critical component of higher education, yet comprehensive datasets capturing real-world student performance across multiple modalities remain scarce. To address this gap, we present SOPHIAS (Student Oral Presentation monitoring for Holistic Insights & Analytics using Sensors), a 12-hour multimodal dataset containing recordings of 50 oral presentations (10-15-minute presentation followed by 5-15-minute Q&A) delivered by 65 undergraduate and master's students at the Universidad Autonoma de Madrid. SOPHIAS integrates eight synchronized sensor streams from high-definition webcams, ambient and webcam audio, eye-tracking glasses, smartwatch physiological sensors, and clicker, keyboard, and mouse interactions. In addition, the dataset includes slides and rubric-based evaluations from teachers, peers, and self-assessments, along with timestamped contextual annotations. The dataset captures presentations conducted in real classroom settings, preserving authentic student behaviors, interactions, and physiological responses. SOPHIAS enables the exploration of relationships between multimodal behavioral and physiological signals and presentation performance, supports the study of peer assessment, and provides a benchmark for developing automated feedback and Multimodal Learning Analytics tools. The dataset is publicly available for research through GitHub and Science Data Bank.

Enhancing Online Learning by Integrating Biosensors and Multimodal Learning Analytics for Detecting and Predicting Student Behavior: A Review

Sep 09, 2025Abstract:In modern online learning, understanding and predicting student behavior is crucial for enhancing engagement and optimizing educational outcomes. This systematic review explores the integration of biosensors and Multimodal Learning Analytics (MmLA) to analyze and predict student behavior during computer-based learning sessions. We examine key challenges, including emotion and attention detection, behavioral analysis, experimental design, and demographic considerations in data collection. Our study highlights the growing role of physiological signals, such as heart rate, brain activity, and eye-tracking, combined with traditional interaction data and self-reports to gain deeper insights into cognitive states and engagement levels. We synthesize findings from 54 key studies, analyzing commonly used methodologies such as advanced machine learning algorithms and multimodal data pre-processing techniques. The review identifies current research trends, limitations, and emerging directions in the field, emphasizing the transformative potential of biosensor-driven adaptive learning systems. Our findings suggest that integrating multimodal data can facilitate personalized learning experiences, real-time feedback, and intelligent educational interventions, ultimately advancing toward a more customized and adaptive online learning experience.

MOSAIC-F: A Framework for Enhancing Students' Oral Presentation Skills through Personalized Feedback

Jun 10, 2025

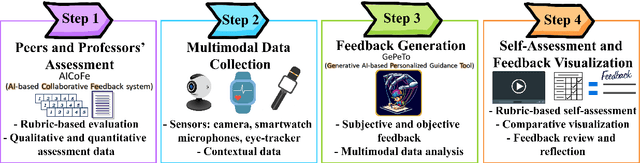

Abstract:In this article, we present a novel multimodal feedback framework called MOSAIC-F, an acronym for a data-driven Framework that integrates Multimodal Learning Analytics (MMLA), Observations, Sensors, Artificial Intelligence (AI), and Collaborative assessments for generating personalized feedback on student learning activities. This framework consists of four key steps. First, peers and professors' assessments are conducted through standardized rubrics (that include both quantitative and qualitative evaluations). Second, multimodal data are collected during learning activities, including video recordings, audio capture, gaze tracking, physiological signals (heart rate, motion data), and behavioral interactions. Third, personalized feedback is generated using AI, synthesizing human-based evaluations and data-based multimodal insights such as posture, speech patterns, stress levels, and cognitive load, among others. Finally, students review their own performance through video recordings and engage in self-assessment and feedback visualization, comparing their own evaluations with peers and professors' assessments, class averages, and AI-generated recommendations. By combining human-based and data-based evaluation techniques, this framework enables more accurate, personalized and actionable feedback. We tested MOSAIC-F in the context of improving oral presentation skills.

M2LADS Demo: A System for Generating Multimodal Learning Analytics Dashboards

Feb 21, 2025

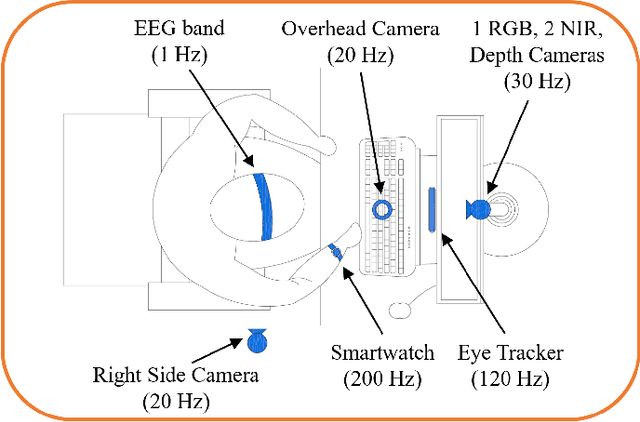

Abstract:We present a demonstration of a web-based system called M2LADS ("System for Generating Multimodal Learning Analytics Dashboards"), designed to integrate, synchronize, visualize, and analyze multimodal data recorded during computer-based learning sessions with biosensors. This system presents a range of biometric and behavioral data on web-based dashboards, providing detailed insights into various physiological and activity-based metrics. The multimodal data visualized include electroencephalogram (EEG) data for assessing attention and brain activity, heart rate metrics, eye-tracking data to measure visual attention, webcam video recordings, and activity logs of the monitored tasks. M2LADS aims to assist data scientists in two key ways: (1) by providing a comprehensive view of participants' experiences, displaying all data categorized by the activities in which participants are engaged, and (2) by synchronizing all biosignals and videos, facilitating easier data relabeling if any activity information contains errors.

IMPROVE: Impact of Mobile Phones on Remote Online Virtual Education

Dec 13, 2024Abstract:This work presents the IMPROVE dataset, designed to evaluate the effects of mobile phone usage on learners during online education. The dataset not only assesses academic performance and subjective learner feedback but also captures biometric, behavioral, and physiological signals, providing a comprehensive analysis of the impact of mobile phone use on learning. Multimodal data were collected from 120 learners in three groups with different phone interaction levels. A setup involving 16 sensors was implemented to collect data that have proven to be effective indicators for understanding learner behavior and cognition, including electroencephalography waves, videos, eye tracker, etc. The dataset includes metadata from the processed videos like face bounding boxes, facial landmarks, and Euler angles for head pose estimation. In addition, learner performance data and self-reported forms are included. Phone usage events were labeled, covering both supervisor-triggered and uncontrolled events. A semi-manual re-labeling system, using head pose and eye tracker data, is proposed to improve labeling accuracy. Technical validation confirmed signal quality, with statistical analyses revealing biometric changes during phone use.

Visual Attention Analysis in Online Learning

May 31, 2024

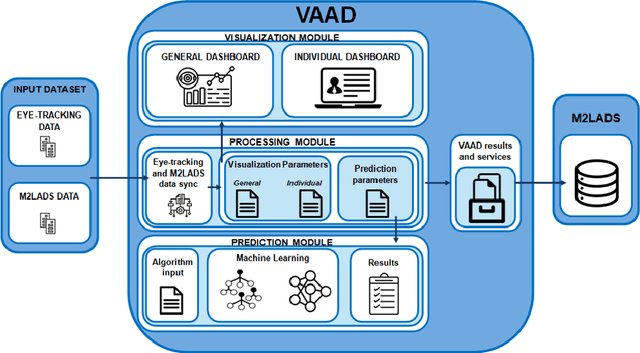

Abstract:In this paper, we present an approach in the Multimodal Learning Analytics field. Within this approach, we have developed a tool to visualize and analyze eye movement data collected during learning sessions in online courses. The tool is named VAAD (an acronym for Visual Attention Analysis Dashboard). These eye movement data have been gathered using an eye-tracker and subsequently processed and visualized for interpretation. The purpose of the tool is to conduct a descriptive analysis of the data by facilitating its visualization, enabling the identification of differences and learning patterns among various learner populations. Additionally, it integrates a predictive module capable of anticipating learner activities during a learning session. Consequently, VAAD holds the potential to offer valuable insights into online learning behaviors from both descriptive and predictive perspectives.

Biometrics and Behavioral Modelling for Detecting Distractions in Online Learning

May 24, 2024

Abstract:In this article, we explore computer vision approaches to detect abnormal head pose during e-learning sessions and we introduce a study on the effects of mobile phone usage during these sessions. We utilize behavioral data collected from 120 learners monitored while participating in a MOOC learning sessions. Our study focuses on the influence of phone-usage events on behavior and physiological responses, specifically attention, heart rate, and meditation, before, during, and after phone usage. Additionally, we propose an approach for estimating head pose events using images taken by the webcam during the MOOC learning sessions to detect phone-usage events. Our hypothesis suggests that head posture undergoes significant changes when learners interact with a mobile phone, contrasting with the typical behavior seen when learners face a computer during e-learning sessions. We propose an approach designed to detect deviations in head posture from the average observed during a learner's session, operating as a semi-supervised method. This system flags events indicating alterations in head posture for subsequent human review and selection of mobile phone usage occurrences with a sensitivity over 90%.

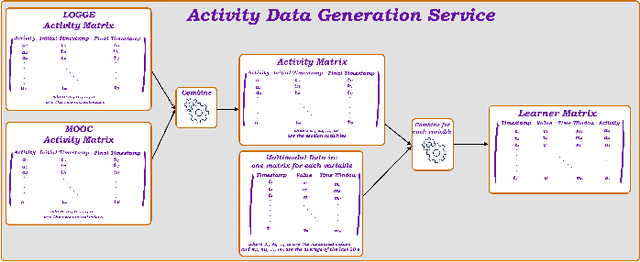

M2LADS: A System for Generating MultiModal Learning Analytics Dashboards in Open Education

May 21, 2023

Abstract:In this article, we present a Web-based System called M2LADS, which supports the integration and visualization of multimodal data recorded in learning sessions in a MOOC in the form of Web-based Dashboards. Based on the edBB platform, the multimodal data gathered contains biometric and behavioral signals including electroencephalogram data to measure learners' cognitive attention, heart rate for affective measures, visual attention from the video recordings. Additionally, learners' static background data and their learning performance measures are tracked using LOGCE and MOOC tracking logs respectively, and both are included in the Web-based System. M2LADS provides opportunities to capture learners' holistic experience during their interactions with the MOOC, which can in turn be used to improve their learning outcomes through feedback visualizations and interventions, as well as to enhance learning analytics models and improve the open content of the MOOC.

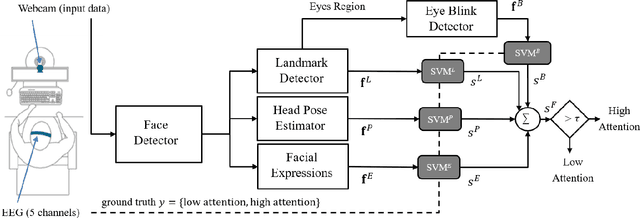

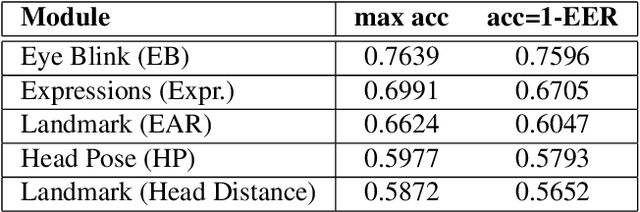

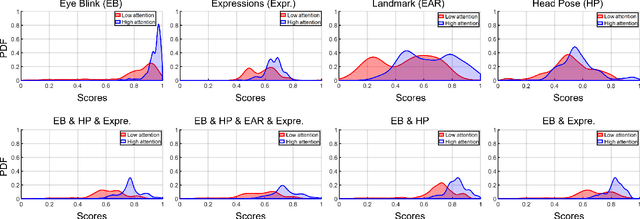

MATT: Multimodal Attention Level Estimation for e-learning Platforms

Jan 22, 2023

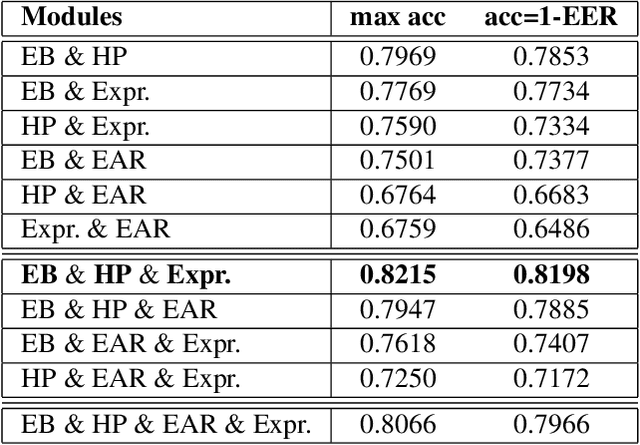

Abstract:This work presents a new multimodal system for remote attention level estimation based on multimodal face analysis. Our multimodal approach uses different parameters and signals obtained from the behavior and physiological processes that have been related to modeling cognitive load such as faces gestures (e.g., blink rate, facial actions units) and user actions (e.g., head pose, distance to the camera). The multimodal system uses the following modules based on Convolutional Neural Networks (CNNs): Eye blink detection, head pose estimation, facial landmark detection, and facial expression features. First, we individually evaluate the proposed modules in the task of estimating the student's attention level captured during online e-learning sessions. For that we trained binary classifiers (high or low attention) based on Support Vector Machines (SVM) for each module. Secondly, we find out to what extent multimodal score level fusion improves the attention level estimation. The mEBAL database is used in the experimental framework, a public multi-modal database for attention level estimation obtained in an e-learning environment that contains data from 38 users while conducting several e-learning tasks of variable difficulty (creating changes in student cognitive loads).

ALEBk: Feasibility Study of Attention Level Estimation via Blink Detection applied to e-Learning

Dec 16, 2021

Abstract:This work presents a feasibility study of remote attention level estimation based on eye blink frequency. We first propose an eye blink detection system based on Convolutional Neural Networks (CNNs), very competitive with respect to related works. Using this detector, we experimentally evaluate the relationship between the eye blink rate and the attention level of students captured during online sessions. The experimental framework is carried out using a public multimodal database for eye blink detection and attention level estimation called mEBAL, which comprises data from 38 students and multiples acquisition sensors, in particular, i) an electroencephalogram (EEG) band which provides the time signals coming from the student's cognitive information, and ii) RGB and NIR cameras to capture the students face gestures. The results achieved suggest an inverse correlation between the eye blink frequency and the attention level. This relation is used in our proposed method called ALEBk for estimating the attention level as the inverse of the eye blink frequency. Our results open a new research line to introduce this technology for attention level estimation on future e-learning platforms, among other applications of this kind of behavioral biometrics based on face analysis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge