Álvaro Becerra

Visual Attention Analysis in Online Learning

May 31, 2024

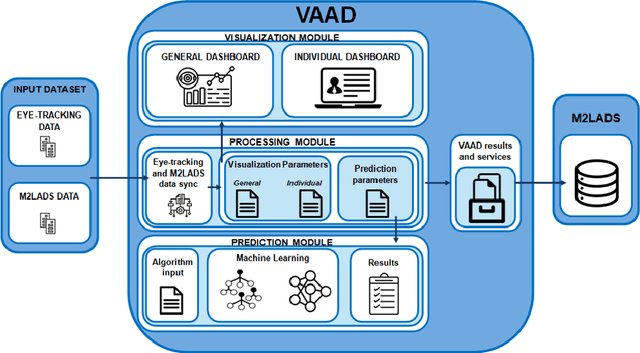

Abstract:In this paper, we present an approach in the Multimodal Learning Analytics field. Within this approach, we have developed a tool to visualize and analyze eye movement data collected during learning sessions in online courses. The tool is named VAAD (an acronym for Visual Attention Analysis Dashboard). These eye movement data have been gathered using an eye-tracker and subsequently processed and visualized for interpretation. The purpose of the tool is to conduct a descriptive analysis of the data by facilitating its visualization, enabling the identification of differences and learning patterns among various learner populations. Additionally, it integrates a predictive module capable of anticipating learner activities during a learning session. Consequently, VAAD holds the potential to offer valuable insights into online learning behaviors from both descriptive and predictive perspectives.

Biometrics and Behavioral Modelling for Detecting Distractions in Online Learning

May 24, 2024

Abstract:In this article, we explore computer vision approaches to detect abnormal head pose during e-learning sessions and we introduce a study on the effects of mobile phone usage during these sessions. We utilize behavioral data collected from 120 learners monitored while participating in a MOOC learning sessions. Our study focuses on the influence of phone-usage events on behavior and physiological responses, specifically attention, heart rate, and meditation, before, during, and after phone usage. Additionally, we propose an approach for estimating head pose events using images taken by the webcam during the MOOC learning sessions to detect phone-usage events. Our hypothesis suggests that head posture undergoes significant changes when learners interact with a mobile phone, contrasting with the typical behavior seen when learners face a computer during e-learning sessions. We propose an approach designed to detect deviations in head posture from the average observed during a learner's session, operating as a semi-supervised method. This system flags events indicating alterations in head posture for subsequent human review and selection of mobile phone usage occurrences with a sensitivity over 90%.

M2LADS: A System for Generating MultiModal Learning Analytics Dashboards in Open Education

May 21, 2023

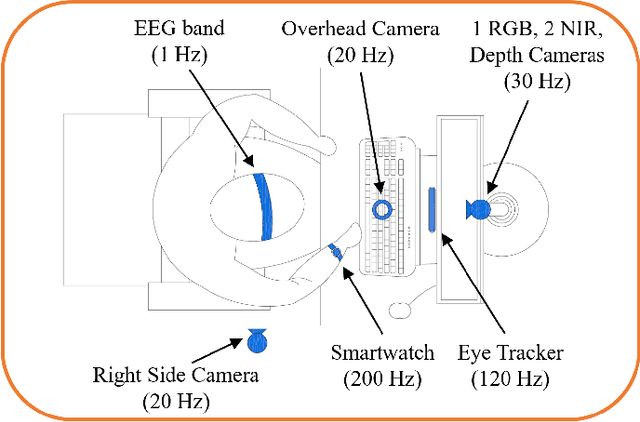

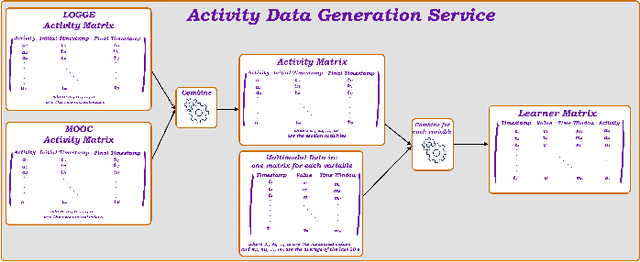

Abstract:In this article, we present a Web-based System called M2LADS, which supports the integration and visualization of multimodal data recorded in learning sessions in a MOOC in the form of Web-based Dashboards. Based on the edBB platform, the multimodal data gathered contains biometric and behavioral signals including electroencephalogram data to measure learners' cognitive attention, heart rate for affective measures, visual attention from the video recordings. Additionally, learners' static background data and their learning performance measures are tracked using LOGCE and MOOC tracking logs respectively, and both are included in the Web-based System. M2LADS provides opportunities to capture learners' holistic experience during their interactions with the MOOC, which can in turn be used to improve their learning outcomes through feedback visualizations and interventions, as well as to enhance learning analytics models and improve the open content of the MOOC.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge