Runze Yu

Two-Bit RIS-Aided Communications at 3.5GHz: Some Insights from the Measurement Results Under Multiple Practical Scenes

May 19, 2023

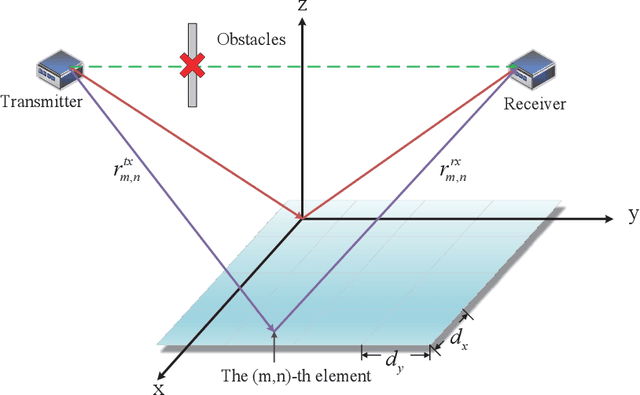

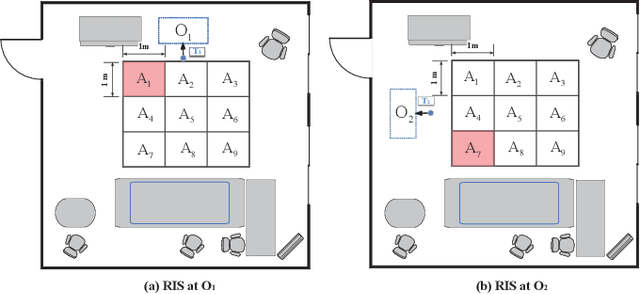

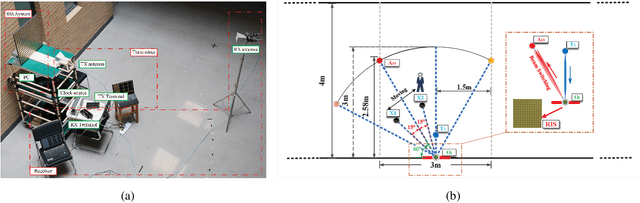

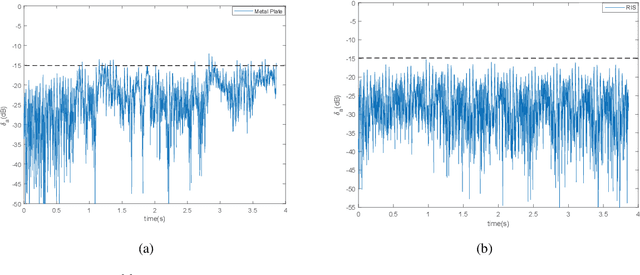

Abstract:In this paper, we propose a two-bit reconfigurable intelligent surface (RIS)-aided communication system, which mainly consists of a two-bit RIS, a transmitter and a receiver. A corresponding prototype verification system is designed to perform experimental tests in practical environments. The carrier frequency is set as 3.5GHz, and the RIS array possesses 256 units, each of which adopts two-bit phase quantization. In particular, we adopt a self-developed broadband intelligent communication system 40MHz-Net (BICT-40N) terminal in order to fully acquire the channel information. The terminal mainly includes a baseband board and a radio frequency (RF) front-end board, where the latter can achieve 26 dB transmitting link gain and 33 dB receiving link gain. The orthogonal frequency division multiplexing (OFDM) signal is used for the terminal, where the bandwidth is 40MHz and the subcarrier spacing is 625KHz. Also, the terminal supports a series of modulation modes, including QPSK, QAM, etc.Through experimental tests, we validate a few functions and properties of the RIS as follows. First, we validate a novel RIS power consumption model, which considers both the static and the dynamic power consumption. Besides, we demonstrate the existence of the imaging interference and find that two-bit RIS can lower the imaging interference about 10 dBm. Moreover, we verify that the RIS can outperform the metal plate in terms of the beam focusing performance. In addition, we find that the RIS has the ability to improve the channel stationarity. Then, we realize the multi-beam reflection of the RIS utilizing the pattern addition (PA) algorithm. Lastly, we validate the existence of the mutual coupling between different RIS units.

A Robotic Auto-Focus System based on Deep Reinforcement Learning

Sep 05, 2018

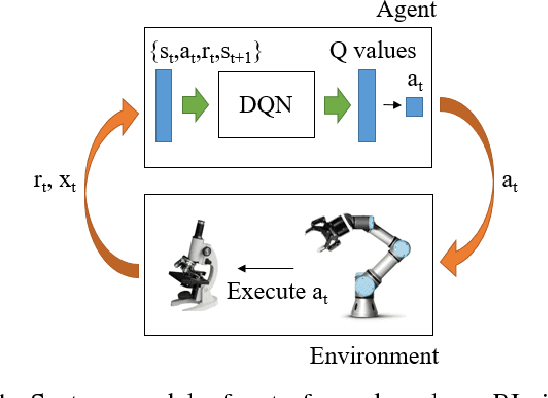

Abstract:Considering its advantages in dealing with high-dimensional visual input and learning control policies in discrete domain, Deep Q Network (DQN) could be an alternative method of traditional auto-focus means in the future. In this paper, based on Deep Reinforcement Learning, we propose an end-to-end approach that can learn auto-focus policies from visual input and finish at a clear spot automatically. We demonstrate that our method - discretizing the action space with coarse to fine steps and applying DQN is not only a solution to auto-focus but also a general approach towards vision-based control problems. Separate phases of training in virtual and real environments are applied to obtain an effective model. Virtual experiments, which are carried out after the virtual training phase, indicates that our method could achieve 100% accuracy on a certain view with different focus range. Further training on real robots could eliminate the deviation between the simulator and real scenario, leading to reliable performances in real applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge