Runsheng Zhang

University of Southern California

DeepSORT-Driven Visual Tracking Approach for Gesture Recognition in Interactive Systems

May 11, 2025Abstract:Based on the DeepSORT algorithm, this study explores the application of visual tracking technology in intelligent human-computer interaction, especially in the field of gesture recognition and tracking. With the rapid development of artificial intelligence and deep learning technology, visual-based interaction has gradually replaced traditional input devices and become an important way for intelligent systems to interact with users. The DeepSORT algorithm can achieve accurate target tracking in dynamic environments by combining Kalman filters and deep learning feature extraction methods. It is especially suitable for complex scenes with multi-target tracking and fast movements. This study experimentally verifies the superior performance of DeepSORT in gesture recognition and tracking. It can accurately capture and track the user's gesture trajectory and is superior to traditional tracking methods in terms of real-time and accuracy. In addition, this study also combines gesture recognition experiments to evaluate the recognition ability and feedback response of the DeepSORT algorithm under different gestures (such as sliding, clicking, and zooming). The experimental results show that DeepSORT can not only effectively deal with target occlusion and motion blur but also can stably track in a multi-target environment, achieving a smooth user interaction experience. Finally, this paper looks forward to the future development direction of intelligent human-computer interaction systems based on visual tracking and proposes future research focuses such as algorithm optimization, data fusion, and multimodal interaction in order to promote a more intelligent and personalized interactive experience. Keywords-DeepSORT, visual tracking, gesture recognition, human-computer interaction

Dynamic User Interface Generation for Enhanced Human-Computer Interaction Using Variational Autoencoders

Dec 19, 2024

Abstract:This study presents a novel approach for intelligent user interaction interface generation and optimization, grounded in the variational autoencoder (VAE) model. With the rapid advancement of intelligent technologies, traditional interface design methods struggle to meet the evolving demands for diversity and personalization, often lacking flexibility in real-time adjustments to enhance the user experience. Human-Computer Interaction (HCI) plays a critical role in addressing these challenges by focusing on creating interfaces that are functional, intuitive, and responsive to user needs. This research leverages the RICO dataset to train the VAE model, enabling the simulation and creation of user interfaces that align with user aesthetics and interaction habits. By integrating real-time user behavior data, the system dynamically refines and optimizes the interface, improving usability and underscoring the importance of HCI in achieving a seamless user experience. Experimental findings indicate that the VAE-based approach significantly enhances the quality and precision of interface generation compared to other methods, including autoencoders (AE), generative adversarial networks (GAN), conditional GANs (cGAN), deep belief networks (DBN), and VAE-GAN. This work contributes valuable insights into HCI, providing robust technical solutions for automated interface generation and enhanced user experience optimization.

Emotion-Aware Interaction Design in Intelligent User Interface Using Multi-Modal Deep Learning

Nov 10, 2024Abstract:In an era where user interaction with technology is ubiquitous, the importance of user interface (UI) design cannot be overstated. A well-designed UI not only enhances usability but also fosters more natural, intuitive, and emotionally engaging experiences, making technology more accessible and impactful in everyday life. This research addresses this growing need by introducing an advanced emotion recognition system to significantly improve the emotional responsiveness of UI. By integrating facial expressions, speech, and textual data through a multi-branch Transformer model, the system interprets complex emotional cues in real-time, enabling UIs to interact more empathetically and effectively with users. Using the public MELD dataset for validation, our model demonstrates substantial improvements in emotion recognition accuracy and F1 scores, outperforming traditional methods. These findings underscore the critical role that sophisticated emotion recognition plays in the evolution of UIs, making technology more attuned to user needs and emotions. This study highlights how enhanced emotional intelligence in UIs is not only about technical innovation but also about fostering deeper, more meaningful connections between users and the digital world, ultimately shaping how people interact with technology in their daily lives.

Investigation and Evaluation of Adaptive Algorithms for Multichannel Active Noise Control System

Aug 09, 2023Abstract:This dissertation focuses on the investigation and evaluation of adptive algorithms for multichannel active noise control system. The aim of the research is to investigate the effectiveness of the FxLMS algorithm and the pre-trained control filter in attenuating various types of noise. The study begins with a comprehensive review of the existing literature on active noise control, highlighting the significance of noise reduction in different applications. The theoretical foundations of the FxLMS algorithm and the pre-trained control filter are then presented, including their underlying principles and mathematical formulations. Through a comprehensive analysis, a clear understanding of these methods is established. To assess the performance of the FxLMS algorithm and the pre-trained control filter, extensive simulation experiments are conducted using real-world noise signals. The experiments include scenarios such as aircraft noise, traffic noise, and mixed noise. The results of the simulations are used to compare the noise reduction capabilities of the two methods and to evaluate their effectiveness under different noise conditions. The findings indicate that the FxLMS algorithm exhibits remarkable noise reduction performance. It demonstrates a strong ability to track and respond quickly to varying noise patterns. In the initial stages of noise reduction, the pre-trained control filter shows better performance. However, as time progresses, the FxLMS algorithm consistently achieves higher average noise reduction levels compared to the pre-trained control filter. Additionally, the FxLMS algorithm shows faster responsiveness during transitional periods when noise characteristics change. These findings contribute to the field of noise control and provide valuable insights for designing efficient noise reduction systems.

Learning from Pixel-Level Label Noise: A New Perspective for Semi-Supervised Semantic Segmentation

Mar 26, 2021

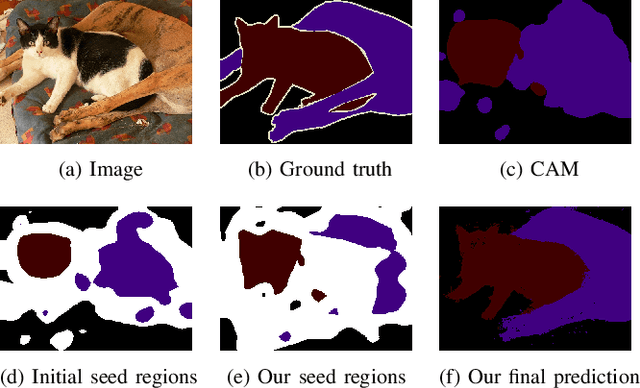

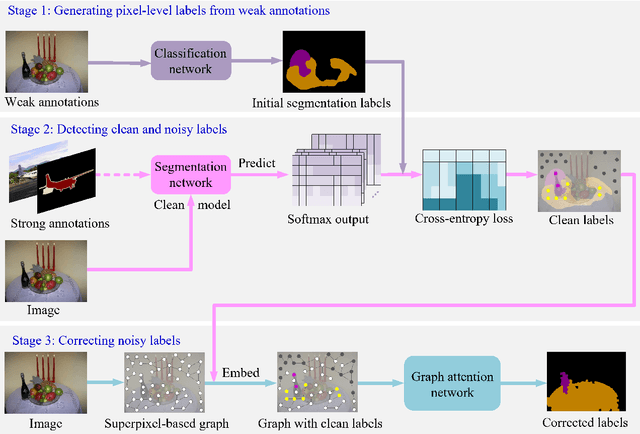

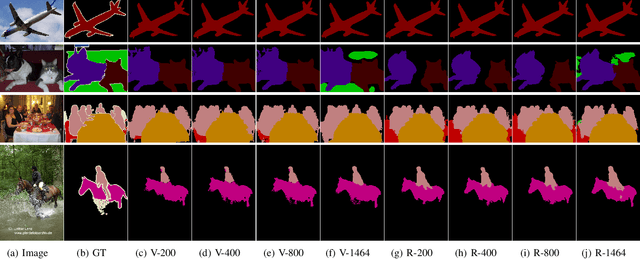

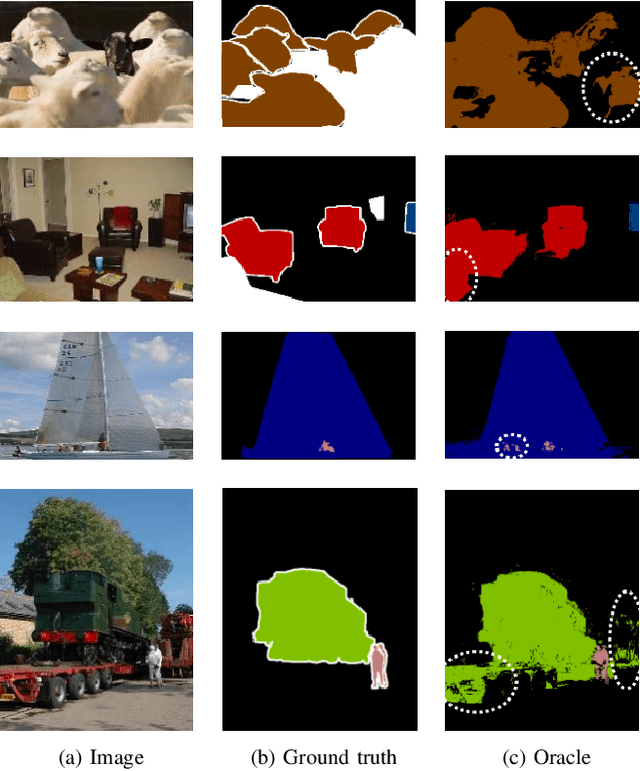

Abstract:This paper addresses semi-supervised semantic segmentation by exploiting a small set of images with pixel-level annotations (strong supervisions) and a large set of images with only image-level annotations (weak supervisions). Most existing approaches aim to generate accurate pixel-level labels from weak supervisions. However, we observe that those generated labels still inevitably contain noisy labels. Motivated by this observation, we present a novel perspective and formulate this task as a problem of learning with pixel-level label noise. Existing noisy label methods, nevertheless, mainly aim at image-level tasks, which can not capture the relationship between neighboring labels in one image. Therefore, we propose a graph based label noise detection and correction framework to deal with pixel-level noisy labels. In particular, for the generated pixel-level noisy labels from weak supervisions by Class Activation Map (CAM), we train a clean segmentation model with strong supervisions to detect the clean labels from these noisy labels according to the cross-entropy loss. Then, we adopt a superpixel-based graph to represent the relations of spatial adjacency and semantic similarity between pixels in one image. Finally we correct the noisy labels using a Graph Attention Network (GAT) supervised by detected clean labels. We comprehensively conduct experiments on PASCAL VOC 2012, PASCAL-Context and MS-COCO datasets. The experimental results show that our proposed semi supervised method achieves the state-of-the-art performances and even outperforms the fully-supervised models on PASCAL VOC 2012 and MS-COCO datasets in some cases.

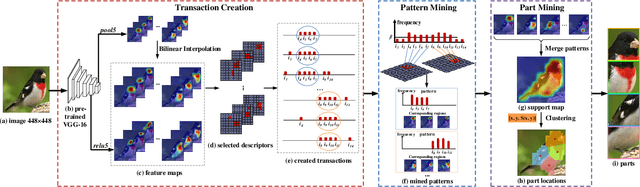

Mining Objects: Fully Unsupervised Object Discovery and Localization From a Single Image

Feb 26, 2019

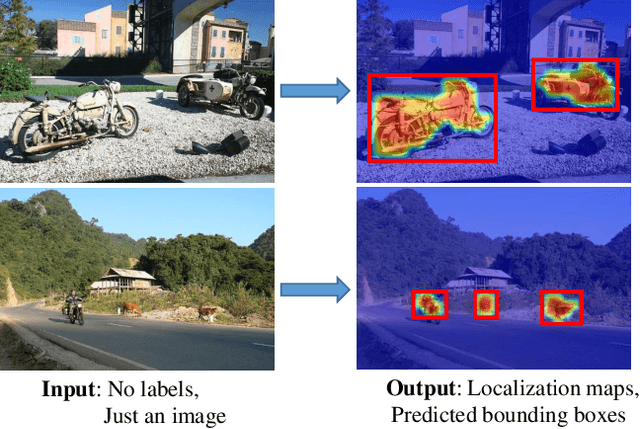

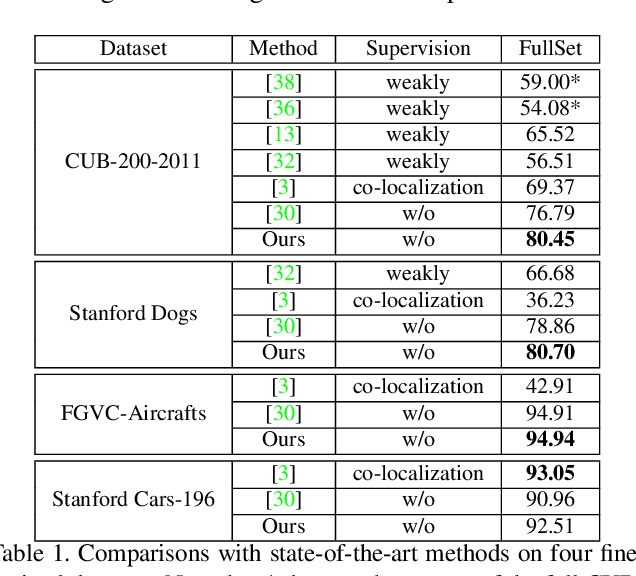

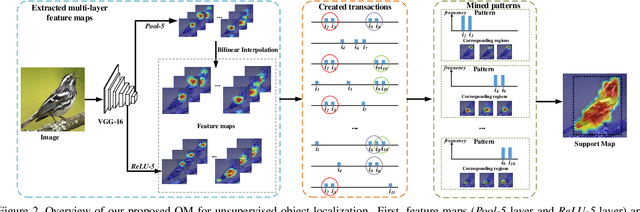

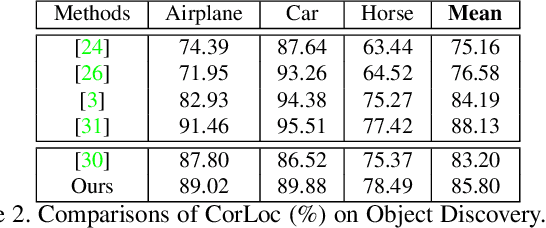

Abstract:The goal of our work is to discover dominant objects without using any annotations. We focus on performing unsupervised object discovery and localization in a strictly general setting where only a single image is given. This is far more challenge than typical co-localization or weakly-supervised localization tasks. To tackle this problem, we propose a simple but effective pattern mining-based method, called Object Mining (OM), which exploits the ad-vantages of data mining and feature representation of pre-trained convolutional neural networks (CNNs). Specifically,Object Mining first converts the feature maps from a pre-trained CNN model into a set of transactions, and then frequent patterns are discovered from transaction data base through pattern mining techniques. We observe that those discovered patterns, i.e., co-occurrence highlighted regions,typically hold appearance and spatial consistency. Motivated by this observation, we can easily discover and localize possible objects by merging relevant meaningful pat-terns in an unsupervised manner. Extensive experiments on a variety of benchmarks demonstrate that Object Mining achieves competitive performance compared with the state-of-the-art methods.

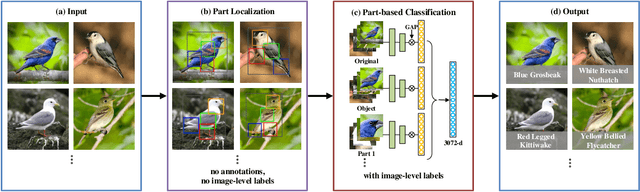

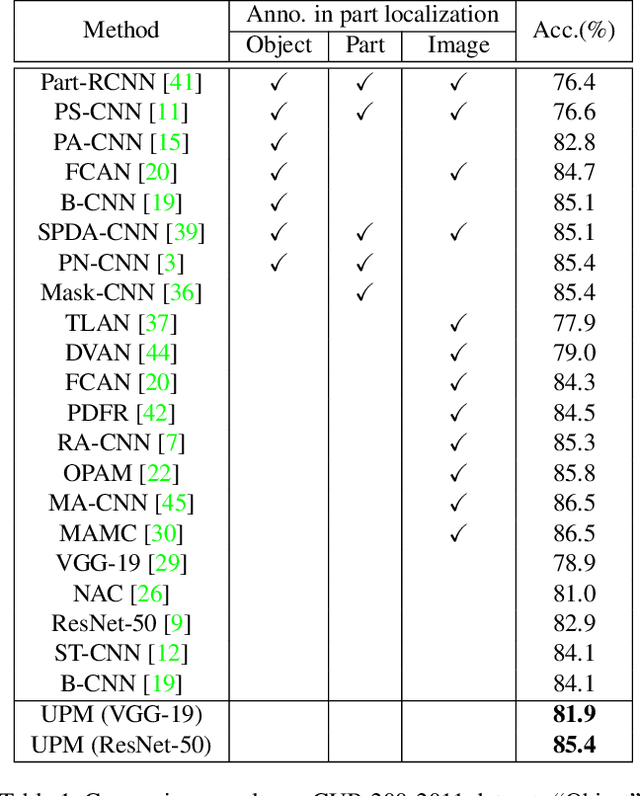

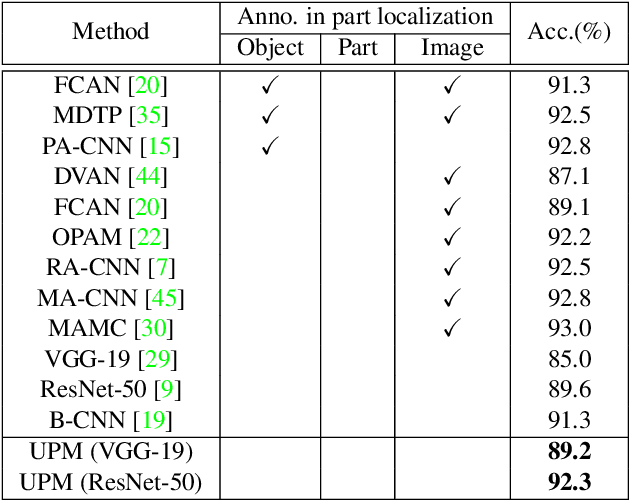

Unsupervised Part Mining for Fine-grained Image Classification

Feb 26, 2019

Abstract:Fine-grained image classification remains challenging due to the large intra-class variance and small inter-class variance. Since the subtle visual differences are only in local regions of discriminative parts among subcategories, part localization is a key issue for fine-grained image classification. Most existing approaches localize object or parts in an image with object or part annotations, which are expensive and labor-consuming. To tackle this issue, we propose a fully unsupervised part mining (UPM) approach to localize the discriminative parts without even image-level annotations, which largely improves the fine-grained classification performance. We first utilize pattern mining techniques to discover frequent patterns, i.e., co-occurrence highlighted regions, in the feature maps extracted from a pre-trained convolutional neural network (CNN) model. Inspired by the fact that these relevant meaningful patterns typically hold appearance and spatial consistency, we then cluster the mined regions to obtain the cluster centers and the discriminative parts surrounding the cluster centers are generated. Importantly, any annotations and sophisticated training procedures are not used in our proposed part localization approach. Finally, a multi-stream classification network is built for aggregating the original, object-level and part-level features simultaneously. Compared with other state-of-the-art approaches, our UPM approach achieves the competitive performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge