Ruffin White

SROS2: Usable Cyber Security Tools for ROS 2

Aug 04, 2022

Abstract:ROS 2 is rapidly becoming a standard in the robotics industry. Built upon DDS as its default communication middleware and used in safety-critical scenarios, adding security to robots and ROS computational graphs is increasingly becoming a concern. The present work introduces SROS2, a series of developer tools and libraries that facilitate adding security to ROS 2 graphs. Focusing on a usability-centric approach in SROS2, we present a methodology for securing graphs systematically while following the DevSecOps model. We also demonstrate the use of our security tools by presenting an application case study that considers securing a graph using the popular Navigation2 and SLAM Toolbox stacks applied in a TurtleBot3 robot. We analyse the current capabilities of SROS2 and discuss the shortcomings, which provides insights for future contributions and extensions. Ultimately, we present SROS2 as usable security tools for ROS 2 and argue that without usability, security in robotics will be greatly impaired.

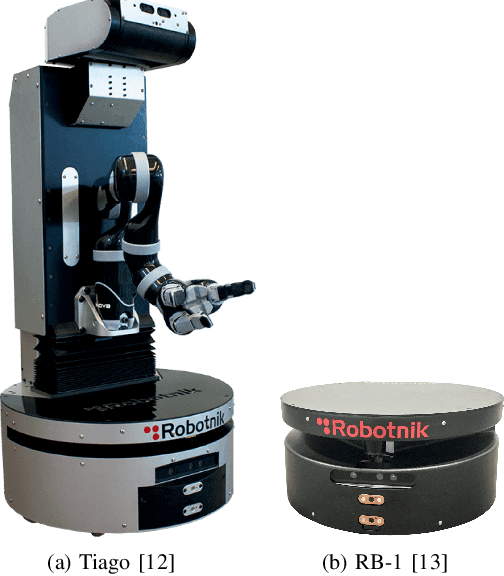

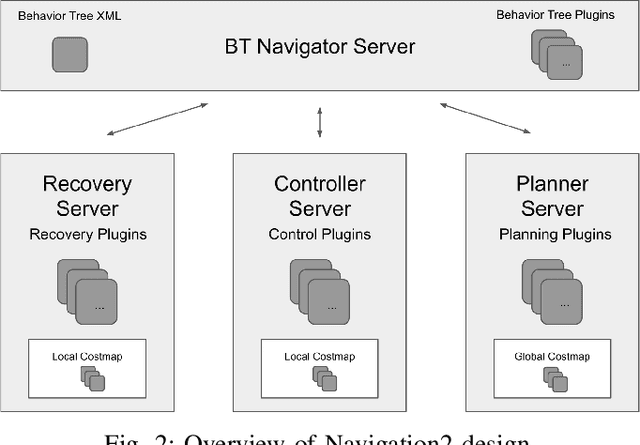

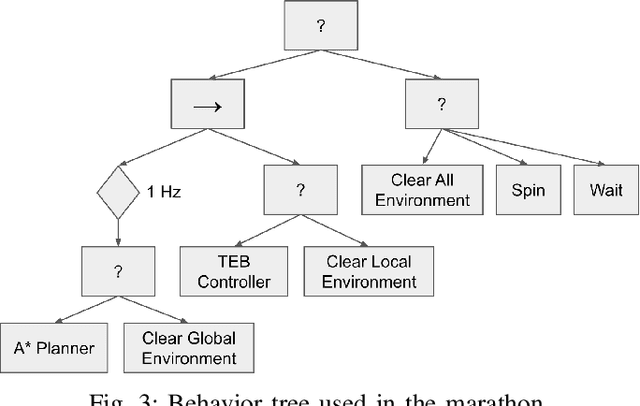

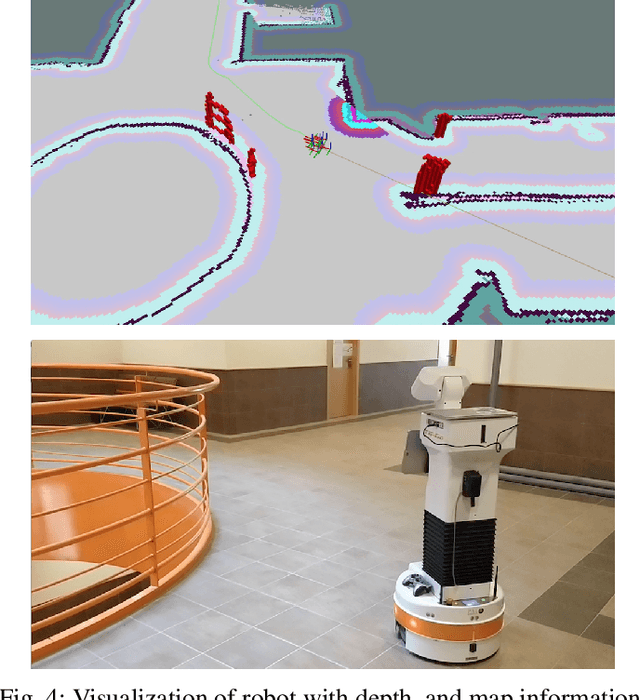

The Marathon 2: A Navigation System

Mar 01, 2020

Abstract:Developments in mobile robot navigation have enabled robots to operate in warehouses, retail stores, and on sidewalks around pedestrians. Various navigation solutions have been proposed, though few as widely adopted as ROS Navigation. 10 years on, it is still one of the most popular navigation solutions. Yet, ROS Navigation has failed to keep up with modern trends. We propose the new navigation solution, Navigation2, which builds on the successful legacy of ROS Navigation. Navigation2 uses a behavior tree for navigator task orchestration and employs new methods designed for dynamic environments applicable to a wider variety of modern sensors. It is built on top of ROS2, a secure message passing framework suitable for safety critical applications and program lifecycle management. We present experiments in a campus setting utilizing Navigation2 to operate safely alongside students over a marathon as an extension of the experiment proposed in Eppstein et al. The Navigation2 system is freely available at https://github.com/ros-planning/navigation2 with a rich community and instructions.

Procedurally Provisioned Access Control for Robotic Systems

Oct 18, 2018

Abstract:Security of robotics systems, as well as of the related middleware infrastructures, is a critical issue for industrial and domestic IoT, and it needs to be continuously assessed throughout the whole development lifecycle. The next generation open source robotic software stack, ROS2, is now targeting support for Secure DDS, providing the community with valuable tools for secure real world robotic deployments. In this work, we introduce a framework for procedural provisioning access control policies for robotic software, as well as for verifying the compliance of generated transport artifacts and decision point implementations.

SROS: Securing ROS over the wire, in the graph, and through the kernel

Nov 21, 2016Abstract:SROS is a proposed addition to the ROS API and ecosystem to support modern cryptography and security measures. An overview of current progress will be presented, rationalizing each major advancement, including: over-the-wire cryptography for all data transport, namespaced access control enforcing graph policies/restrictions, and finally process profiles using Linux Security Modules to harden a node's resource access. By making the community aware of the vulnerabilities in ROS, as well as the proposed solutions provided by SROS, we intend to improve the state of security for future robotics subsystems.

* Workshop contribution presented at IEEE-RAS International Conference on Humanoid Robots (HUMANOIDS). 2016

Multi-modal Tracking for Object based SLAM

Mar 14, 2016

Abstract:We present an on-line 3D visual object tracking framework for monocular cameras by incorporating spatial knowledge and uncertainty from semantic mapping along with high frequency measurements from visual odometry. Using a combination of vision and odometry that are tightly integrated we can increase the overall performance of object based tracking for semantic mapping. We present a framework for integration of the two data-sources into a coherent framework through information based fusion/arbitration. We demonstrate the framework in the context of OmniMapper[1] and present results on 6 challenging sequences over multiple objects compared to data obtained from a motion capture systems. We are able to achieve a mean error of 0.23m for per frame tracking showing 9% relative error less than state of the art tracker.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge