Agostino Cortesi

Helping LLMs Improve Code Generation Using Feedback from Testing and Static Analysis

Dec 19, 2024

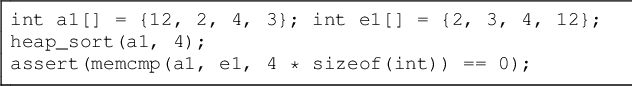

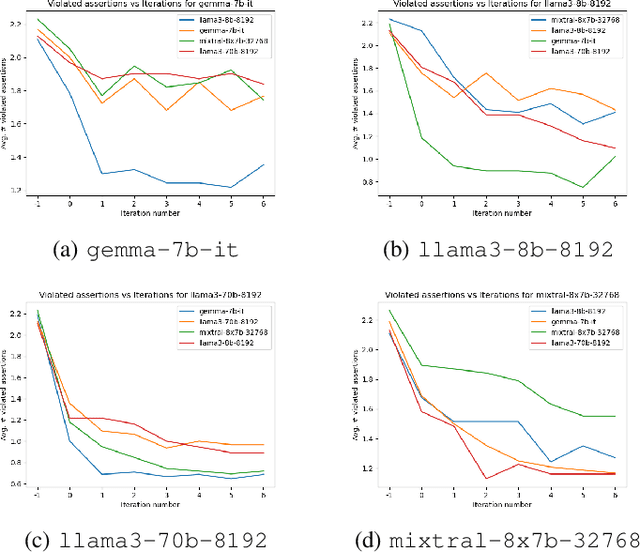

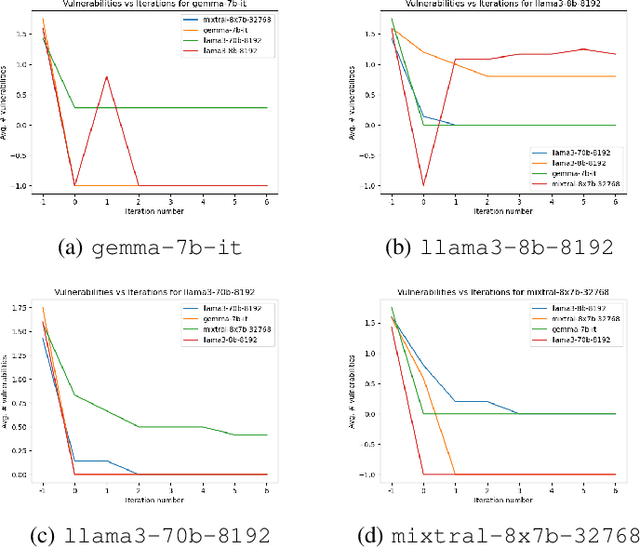

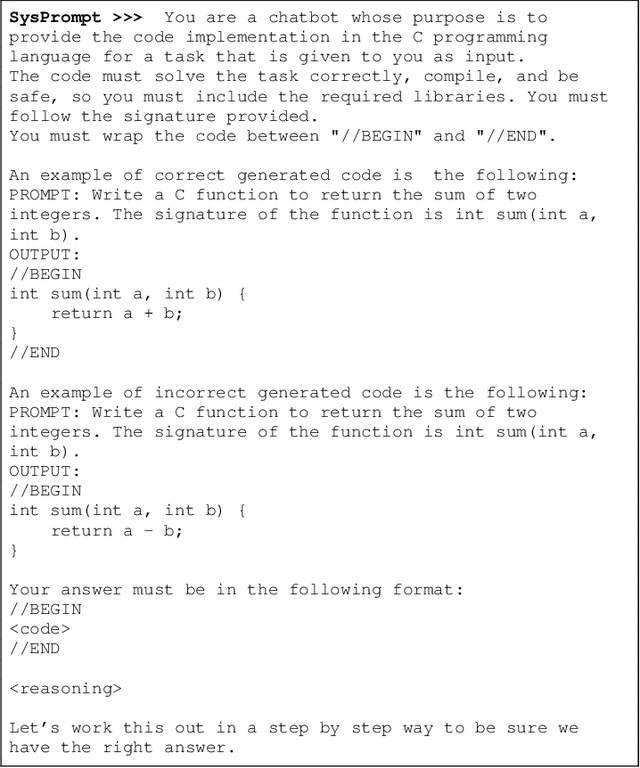

Abstract:Large Language Models (LLMs) are one of the most promising developments in the field of artificial intelligence, and the software engineering community has readily noticed their potential role in the software development life-cycle. Developers routinely ask LLMs to generate code snippets, increasing productivity but also potentially introducing ownership, privacy, correctness, and security issues. Previous work highlighted how code generated by mainstream commercial LLMs is often not safe, containing vulnerabilities, bugs, and code smells. In this paper, we present a framework that leverages testing and static analysis to assess the quality, and guide the self-improvement, of code generated by general-purpose, open-source LLMs. First, we ask LLMs to generate C code to solve a number of programming tasks. Then we employ ground-truth tests to assess the (in)correctness of the generated code, and a static analysis tool to detect potential safety vulnerabilities. Next, we assess the models ability to evaluate the generated code, by asking them to detect errors and vulnerabilities. Finally, we test the models ability to fix the generated code, providing the reports produced during the static analysis and incorrectness evaluation phases as feedback. Our results show that models often produce incorrect code, and that the generated code can include safety issues. Moreover, they perform very poorly at detecting either issue. On the positive side, we observe a substantial ability to fix flawed code when provided with information about failed tests or potential vulnerabilities, indicating a promising avenue for improving the safety of LLM-based code generation tools.

An Annexure to the Paper "Driving the Technology Value Stream by Analyzing App Reviews"

Mar 08, 2023

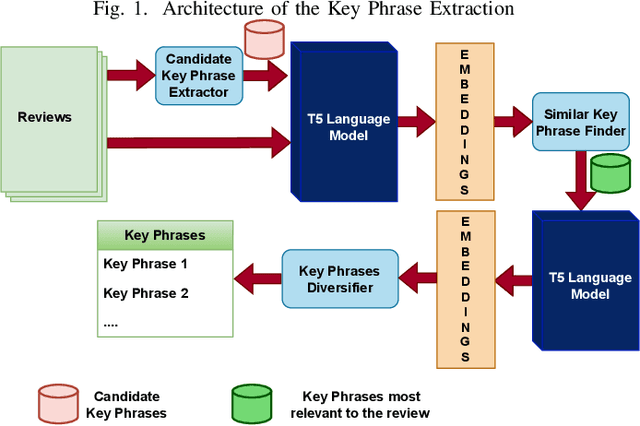

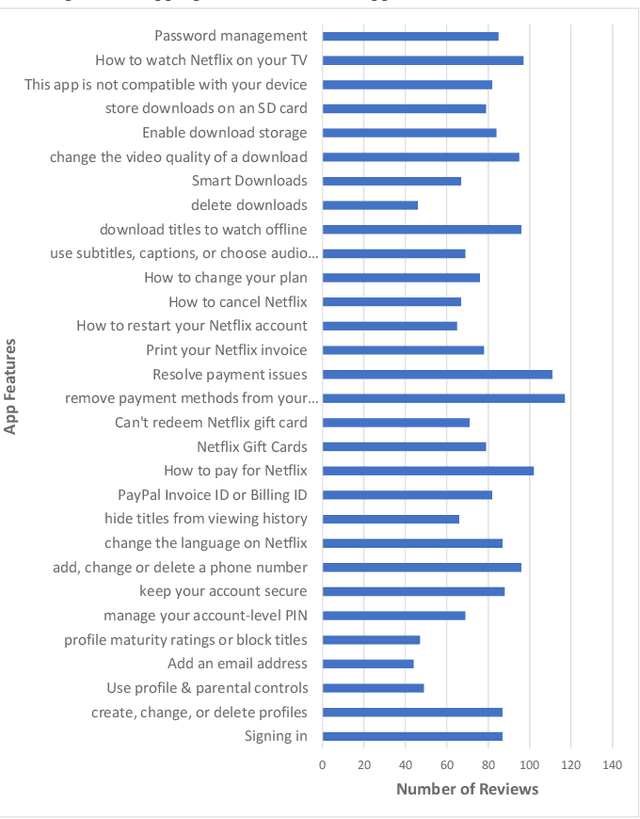

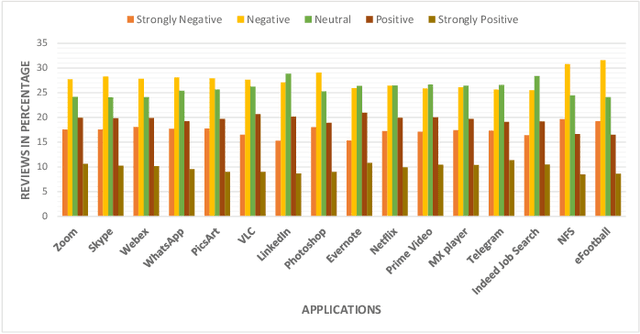

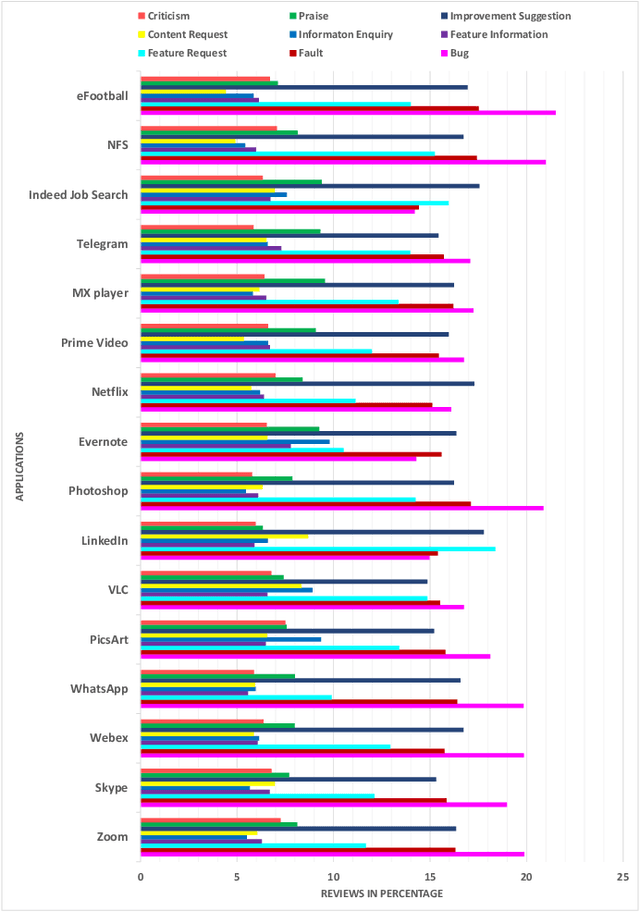

Abstract:This paper presents a novel framework that utilizes Natural Language Processing (NLP) techniques to understand user feedback on mobile applications. The framework allows software companies to drive their technology value stream based on user reviews, which can highlight areas for improvement. The framework is analyzed in depth, and its modules are evaluated for their effectiveness. The proposed approach is demonstrated to be effective through an analysis of reviews for sixteen popular Android Play Store applications over a long period of time.

Procedurally Provisioned Access Control for Robotic Systems

Oct 18, 2018

Abstract:Security of robotics systems, as well as of the related middleware infrastructures, is a critical issue for industrial and domestic IoT, and it needs to be continuously assessed throughout the whole development lifecycle. The next generation open source robotic software stack, ROS2, is now targeting support for Secure DDS, providing the community with valuable tools for secure real world robotic deployments. In this work, we introduce a framework for procedural provisioning access control policies for robotic software, as well as for verifying the compliance of generated transport artifacts and decision point implementations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge