Eleonora Iotti

Helping LLMs Improve Code Generation Using Feedback from Testing and Static Analysis

Dec 19, 2024

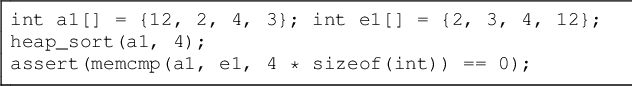

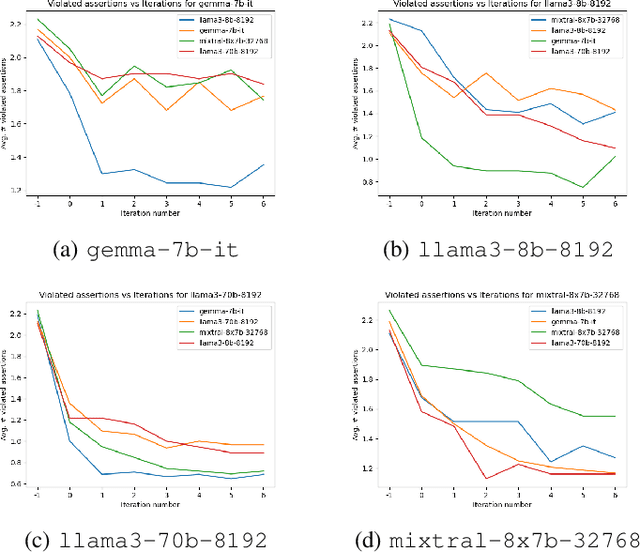

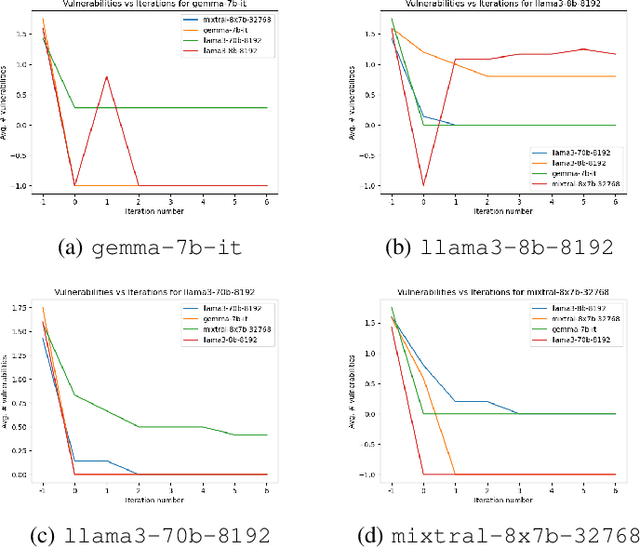

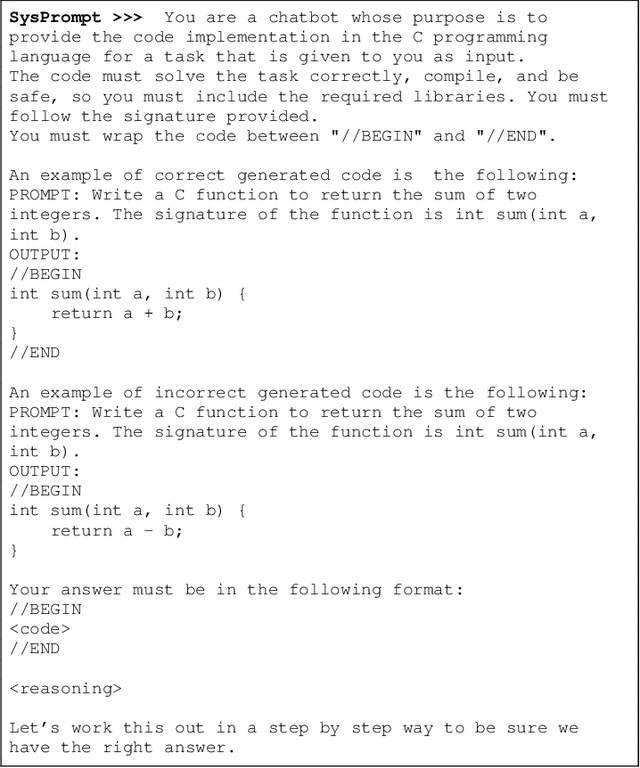

Abstract:Large Language Models (LLMs) are one of the most promising developments in the field of artificial intelligence, and the software engineering community has readily noticed their potential role in the software development life-cycle. Developers routinely ask LLMs to generate code snippets, increasing productivity but also potentially introducing ownership, privacy, correctness, and security issues. Previous work highlighted how code generated by mainstream commercial LLMs is often not safe, containing vulnerabilities, bugs, and code smells. In this paper, we present a framework that leverages testing and static analysis to assess the quality, and guide the self-improvement, of code generated by general-purpose, open-source LLMs. First, we ask LLMs to generate C code to solve a number of programming tasks. Then we employ ground-truth tests to assess the (in)correctness of the generated code, and a static analysis tool to detect potential safety vulnerabilities. Next, we assess the models ability to evaluate the generated code, by asking them to detect errors and vulnerabilities. Finally, we test the models ability to fix the generated code, providing the reports produced during the static analysis and incorrectness evaluation phases as feedback. Our results show that models often produce incorrect code, and that the generated code can include safety issues. Moreover, they perform very poorly at detecting either issue. On the positive side, we observe a substantial ability to fix flawed code when provided with information about failed tests or potential vulnerabilities, indicating a promising avenue for improving the safety of LLM-based code generation tools.

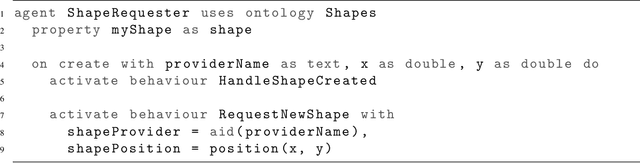

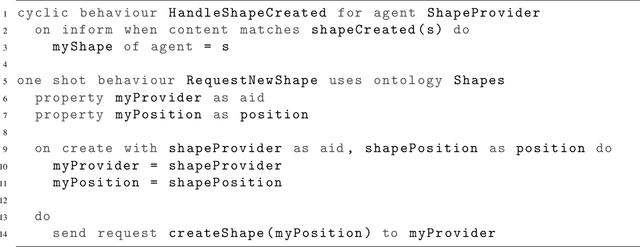

Exploratory Experiments on Programming Autonomous Robots in Jadescript

Jul 23, 2020

Abstract:This paper describes exploratory experiments to validate the possibility of programming autonomous robots using an agent-oriented programming language. Proper perception of the environment, by means of various types of sensors, and timely reaction to external events, by means of effective actuators, are essential to provide robots with a sufficient level of autonomy. The agent-oriented programming paradigm is relevant with this respect because it offers language-level abstractions to process events and to command actuators. A recent agent-oriented programming language called Jadescript is presented in this paper together with its new features specifically designed to handle events. Exploratory experiments on a simple case-study application are presented to show the validity of the proposed approach and to exemplify the use of the language to program autonomous robots.

* In Proceedings AREA 2020, arXiv:2007.11260

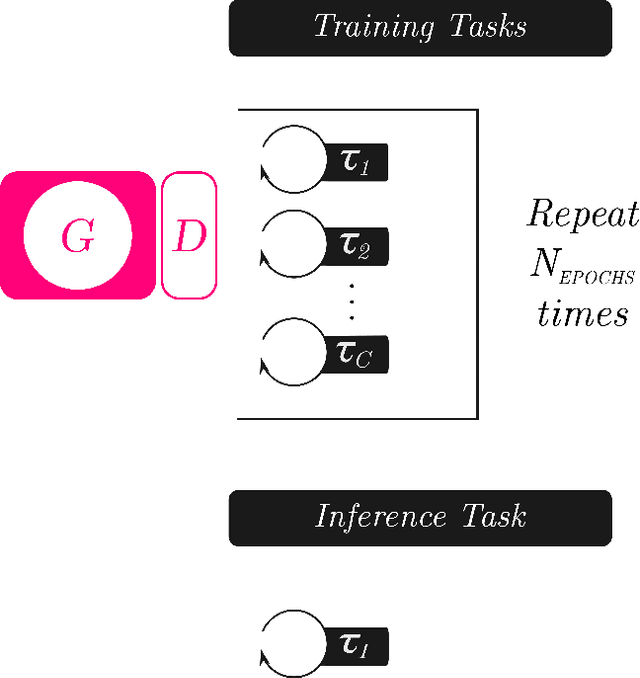

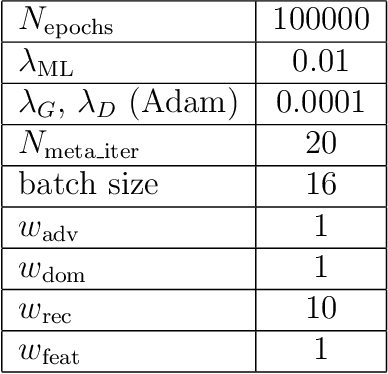

MetalGAN: Multi-Domain Label-Less Image Synthesis Using cGANs and Meta-Learning

Dec 05, 2019

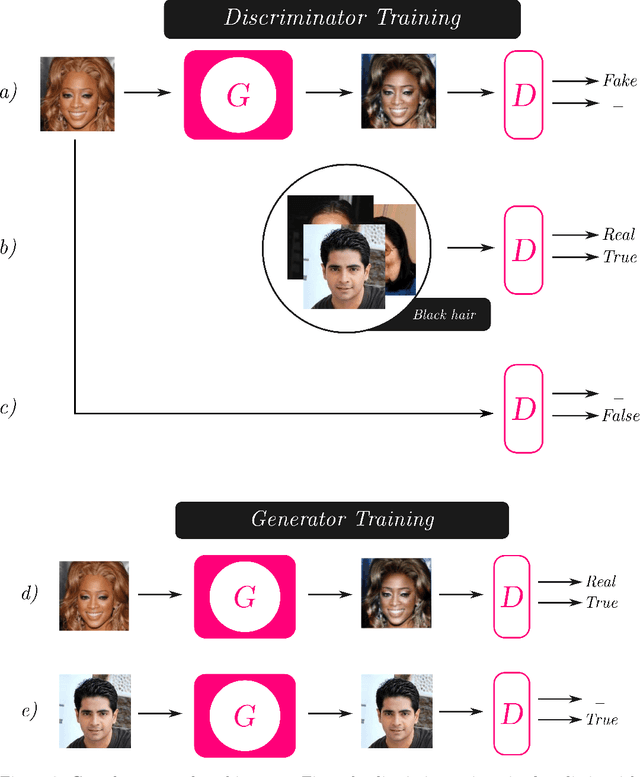

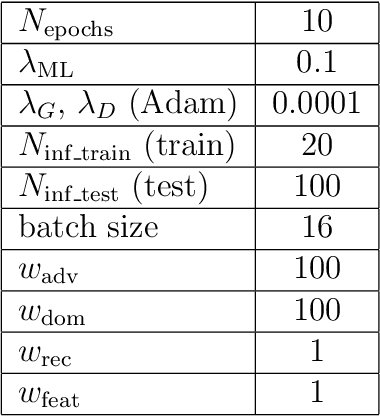

Abstract:Image synthesis is currently one of the most addressed image processing topic in computer vision and deep learning fields of study. Researchers have tackled this problem focusing their efforts on its several challenging problems, e.g. image quality and size, domain and pose changing, architecture of the networks, and so on. Above all, producing images belonging to different domains by using a single architecture is a very relevant goal for image generation. In fact, a single multi-domain network would allow greater flexibility and robustness in the image synthesis task than other approaches. This paper proposes a novel architecture and a training algorithm, which are able to produce multi-domain outputs using a single network. A small portion of a dataset is intentionally used, and there are no hard-coded labels (or classes). This is achieved by combining a conditional Generative Adversarial Network (cGAN) for image generation and a Meta-Learning algorithm for domain switch, and we called our approach MetalGAN. The approach has proved to be appropriate for solving the multi-domain problem and it is validated on facial attribute transfer, using CelebA dataset.

MetalGAN: a Cluster-based Adaptive Training for Few-Shot Adversarial Colorization

Sep 17, 2019

Abstract:In recent years, the majority of works on deep-learning-based image colorization have focused on how to make a good use of the enormous datasets currently available. What about when the data at disposal are scarce? The main objective of this work is to prove that a network can be trained and can provide excellent colorization results even without a large quantity of data. The adopted approach is a mixed one, which uses an adversarial method for the actual colorization, and a meta-learning technique to enhance the generator model. Also, a clusterization a-priori of the training dataset ensures a task-oriented division useful for meta-learning, and at the same time reduces the per-step number of images. This paper describes in detail the method and its main motivations, and a discussion of results and future developments is provided.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge