Ruben Rodriguez Torrado

Bootstrapping Conditional GANs for Video Game Level Generation

Oct 03, 2019

Abstract:Generative Adversarial Networks (GANs) have shown im-pressive results for image generation. However, GANs facechallenges in generating contents with certain types of con-straints, such as game levels. Specifically, it is difficult togenerate levels that have aesthetic appeal and are playable atthe same time. Additionally, because training data usually islimited, it is challenging to generate unique levels with cur-rent GANs. In this paper, we propose a new GAN architec-ture namedConditional Embedding Self-Attention Genera-tive Adversarial Network(CESAGAN) and a new bootstrap-ping training procedure. The CESAGAN is a modification ofthe self-attention GAN that incorporates an embedding fea-ture vector input to condition the training of the discriminatorand generator. This allows the network to model non-localdependency between game objects, and to count objects. Ad-ditionally, to reduce the number of levels necessary to trainthe GAN, we propose a bootstrapping mechanism in whichplayable generated levels are added to the training set. Theresults demonstrate that the new approach does not only gen-erate a larger number of levels that are playable but also gen-erates fewer duplicate levels compared to a standard GAN.

Evolving Agents for the Hanabi 2018 CIG Competition

Sep 26, 2018

Abstract:Hanabi is a cooperative card game with hidden information that has won important awards in the industry and received some recent academic attention. A two-track competition of agents for the game will take place in the 2018 CIG conference. In this paper, we develop a genetic algorithm that builds rule-based agents by determining the best sequence of rules from a fixed rule set to use as strategy. In three separate experiments, we remove human assumptions regarding the ordering of rules, add new, more expressive rules to the rule set and independently evolve agents specialized at specific game sizes. As result, we achieve scores superior to previously published research for the mirror and mixed evaluation of agents.

Illuminating Generalization in Deep Reinforcement Learning through Procedural Level Generation

Sep 07, 2018

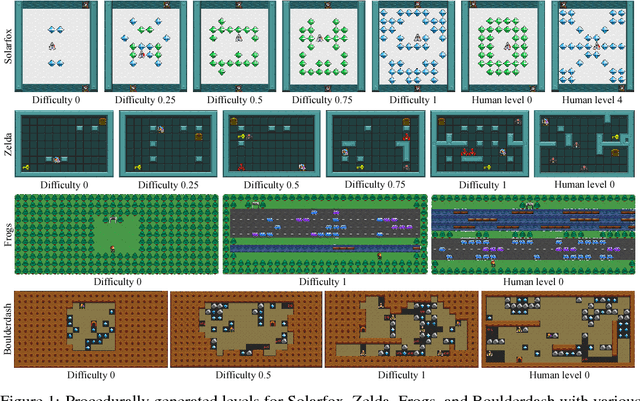

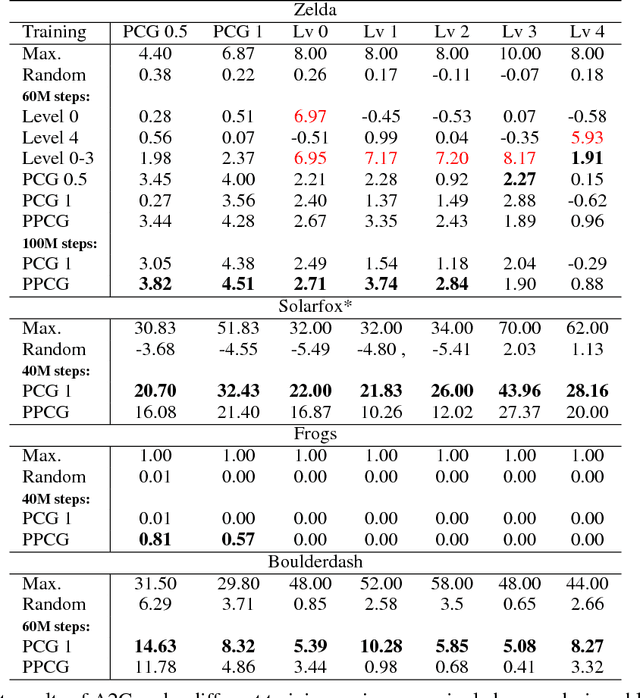

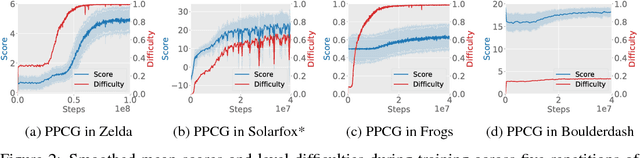

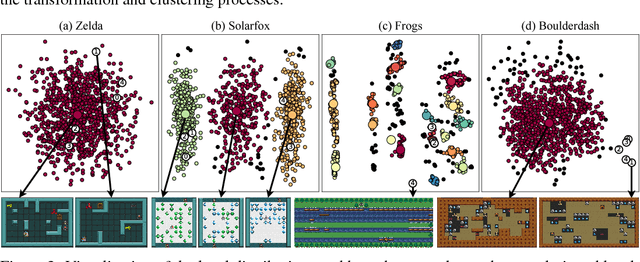

Abstract:Deep reinforcement learning (RL) has shown impressive results in a variety of domains, learning directly from high-dimensional sensory streams. However, when neural networks are trained in a fixed environment, such as a single level in a video game, they will usually overfit and fail to generalize to new levels. When RL models overfit, even slight modifications to the environment can result in poor agent performance. In this paper, we explore how procedurally generated levels during training increase generality. We show that for some games procedural level generation enables generalization to new levels within the same distribution. Additionally, it is possible to achieve better performance with less data by manipulating the difficulty of the levels in response to the performance of the agent. The generality of the learned behaviors is also evaluated on a set of human-designed levels. Our results show that the ability to generalize to human-designed levels highly depends on the design of the level generators. We apply dimensionality reduction and clustering techniques to visualize the generators' distributions of levels and analyze to what degree they can produce levels similar to those designed by a human.

Deep Reinforcement Learning for General Video Game AI

Jun 06, 2018

Abstract:The General Video Game AI (GVGAI) competition and its associated software framework provides a way of benchmarking AI algorithms on a large number of games written in a domain-specific description language. While the competition has seen plenty of interest, it has so far focused on online planning, providing a forward model that allows the use of algorithms such as Monte Carlo Tree Search. In this paper, we describe how we interface GVGAI to the OpenAI Gym environment, a widely used way of connecting agents to reinforcement learning problems. Using this interface, we characterize how widely used implementations of several deep reinforcement learning algorithms fare on a number of GVGAI games. We further analyze the results to provide a first indication of the relative difficulty of these games relative to each other, and relative to those in the Arcade Learning Environment under similar conditions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge