Rounak Meyur

Cyber Knowledge Completion Using Large Language Models

Sep 24, 2024

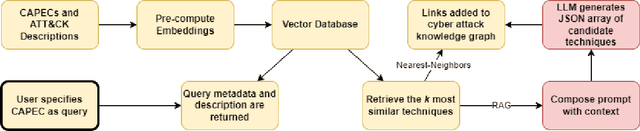

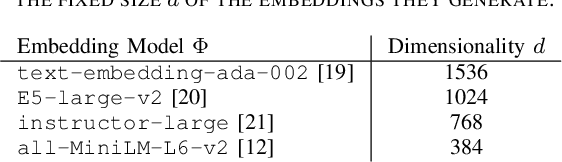

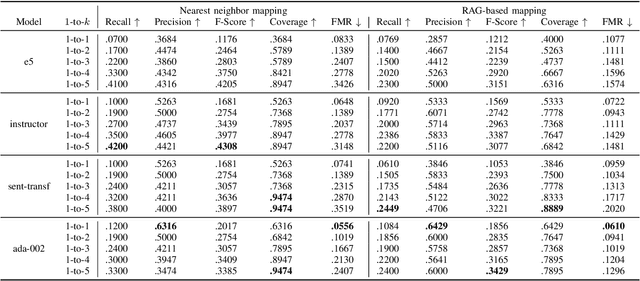

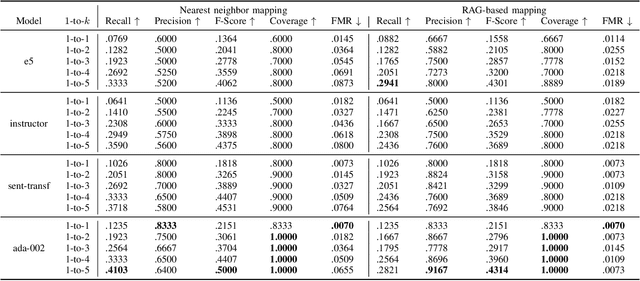

Abstract:The integration of the Internet of Things (IoT) into Cyber-Physical Systems (CPSs) has expanded their cyber-attack surface, introducing new and sophisticated threats with potential to exploit emerging vulnerabilities. Assessing the risks of CPSs is increasingly difficult due to incomplete and outdated cybersecurity knowledge. This highlights the urgent need for better-informed risk assessments and mitigation strategies. While previous efforts have relied on rule-based natural language processing (NLP) tools to map vulnerabilities, weaknesses, and attack patterns, recent advancements in Large Language Models (LLMs) present a unique opportunity to enhance cyber-attack knowledge completion through improved reasoning, inference, and summarization capabilities. We apply embedding models to encapsulate information on attack patterns and adversarial techniques, generating mappings between them using vector embeddings. Additionally, we propose a Retrieval-Augmented Generation (RAG)-based approach that leverages pre-trained models to create structured mappings between different taxonomies of threat patterns. Further, we use a small hand-labeled dataset to compare the proposed RAG-based approach to a baseline standard binary classification model. Thus, the proposed approach provides a comprehensive framework to address the challenge of cyber-attack knowledge graph completion.

PermitQA: A Benchmark for Retrieval Augmented Generation in Wind Siting and Permitting domain

Aug 21, 2024

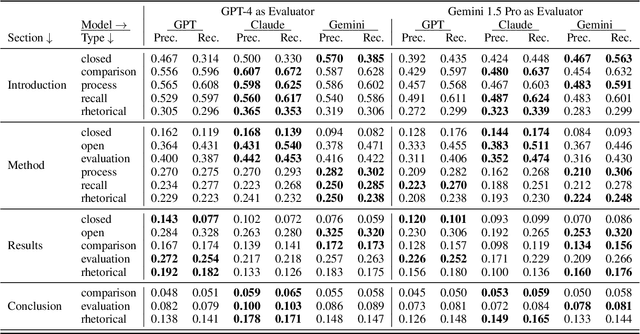

Abstract:In the rapidly evolving landscape of Natural Language Processing (NLP) and text generation, the emergence of Retrieval Augmented Generation (RAG) presents a promising avenue for improving the quality and reliability of generated text by leveraging information retrieved from user specified database. Benchmarking is essential to evaluate and compare the performance of the different RAG configurations in terms of retriever and generator, providing insights into their effectiveness, scalability, and suitability for the specific domain and applications. In this paper, we present a comprehensive framework to generate a domain relevant RAG benchmark. Our framework is based on automatic question-answer generation with Human (domain experts)-AI Large Language Model (LLM) teaming. As a case study, we demonstrate the framework by introducing PermitQA, a first-of-its-kind benchmark on the wind siting and permitting domain which comprises of multiple scientific documents/reports related to environmental impact of wind energy projects. Our framework systematically evaluates RAG performance using diverse metrics and multiple question types with varying complexity level. We also demonstrate the performance of different models on our benchmark.

Hy-DAT: A Tool to Address Hydropower Modeling Gaps Using Interdependency, Efficiency Curves, and Unit Dispatch Models

Mar 05, 2024

Abstract:As the power system continues to be flooded with intermittent resources, it becomes more important to accurately assess the role of hydro and its impact on the power grid. While hydropower generation has been studied for decades, dependency of power generation on water availability and constraints in hydro operation are not well represented in power system models used in the planning and operation of large-scale interconnection studies. There are still multiple modeling gaps that need to be addressed; if not, they can lead to inaccurate operation and planning reliability studies, and consequently to unintentional load shedding or even blackouts. As a result, it is very important that hydropower is represented correctly in both steady-state and dynamic power system studies. In this paper, we discuss the development and use of the Hydrological Dispatch and Analysis Tool (Hy-DAT) as an interactive graphical user interface, that uses a novel methodology to address the hydropower modeling gaps like water availability and interdependency using a database and algorithms to generate accurate representative models for power system simulation.

Fortify Your Defenses: Strategic Budget Allocation to Enhance Power Grid Cybersecurity

Dec 20, 2023Abstract:The abundance of cyber-physical components in modern day power grid with their diverse hardware and software vulnerabilities has made it difficult to protect them from advanced persistent threats (APTs). An attack graph depicting the propagation of potential cyber-attack sequences from the initial access point to the end objective is vital to identify critical weaknesses of any cyber-physical system. A cyber security personnel can accordingly plan preventive mitigation measures for the identified weaknesses addressing the cyber-attack sequences. However, limitations on available cybersecurity budget restrict the choice of mitigation measures. We address this aspect through our framework, which solves the following problem: given potential cyber-attack sequences for a cyber-physical component in the power grid, find the optimal manner to allocate an available budget to implement necessary preventive mitigation measures. We formulate the problem as a mixed integer linear program (MILP) to identify the optimal budget partition and set of mitigation measures which minimize the vulnerability of cyber-physical components to potential attack sequences. We assume that the allocation of budget affects the efficacy of the mitigation measures. We show how altering the budget allocation for tasks such as asset management, cybersecurity infrastructure improvement, incident response planning and employee training affects the choice of the optimal set of preventive mitigation measures and modifies the associated cybersecurity risk. The proposed framework can be used by cyber policymakers and system owners to allocate optimal budgets for various tasks required to improve the overall security of a cyber-physical system.

Fault location in High Voltage Multi-terminal dc Networks Using Ensemble Learning

Jan 20, 2022

Abstract:Precise location of faults for large distance power transmission networks is essential for faster repair and restoration process. High Voltage direct current (HVdc) networks using modular multi-level converter (MMC) technology has found its prominence for interconnected multi-terminal networks. This allows for large distance bulk power transmission at lower costs. However, they cope with the challenge of dc faults. Fast and efficient methods to isolate the network under dc faults have been widely studied and investigated. After successful isolation, it is essential to precisely locate the fault. The post-fault voltage and current signatures are a function of multiple factors and thus accurately locating faults on a multi-terminal network is challenging. In this paper, we discuss a novel data-driven ensemble learning based approach for accurate fault location. Here we utilize the eXtreme Gradient Boosting (XGB) method for accurate fault location. The sensitivity of the proposed algorithm to measurement noise, fault location, resistance and current limiting inductance are performed on a radial three-terminal MTdc network designed in Power System Computer Aided Design (PSCAD)/Electromagnetic Transients including dc (EMTdc).

DeVLearn: A Deep Visual Learning Framework for Localizing Temporary Faults in Power Systems

Nov 09, 2019

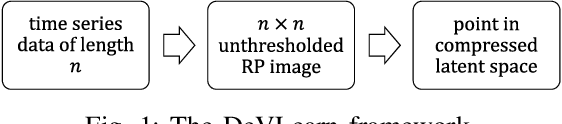

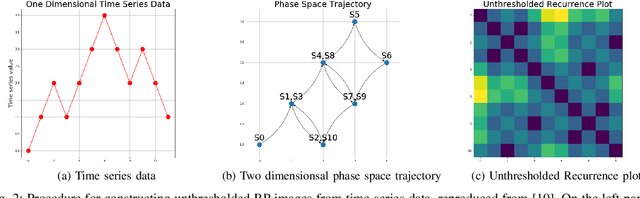

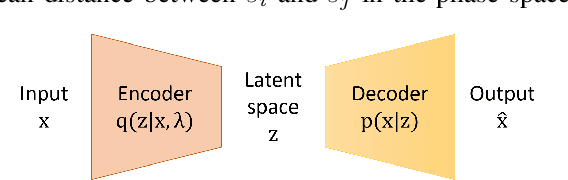

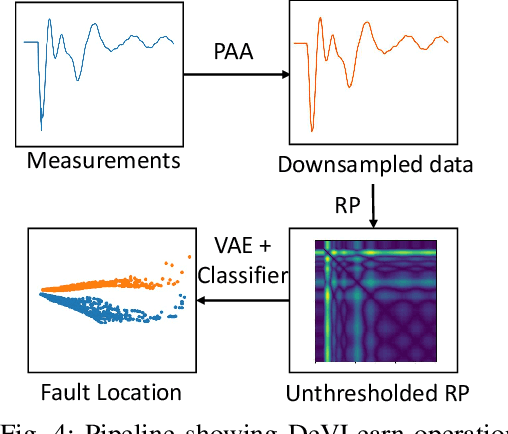

Abstract:Frequently recurring transient faults in a transmission network may be indicative of impending permanent failures. Hence, determining their location is a critical task. This paper proposes a novel image embedding aided deep learning framework called DeVLearn for faulted line location using PMU measurements at generator buses. Inspired by breakthroughs in computer vision, DeVLearn represents measurements (one-dimensional time series data) as two-dimensional unthresholded Recurrent Plot (RP) images. These RP images preserve the temporal relationships present in the original time series and are used to train a deep Variational Auto-Encoder (VAE). The VAE learns the distribution of latent features in the images. Our results show that for faults on two different lines in the IEEE 68-bus network, DeVLearn is able to project PMU measurements into a two-dimensional space such that data for faults at different locations separate into well-defined clusters. This compressed representation may then be used with off-the-shelf classifiers for determining fault location. The efficacy of the proposed framework is demonstrated using local voltage magnitude measurements at two generator buses.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge